-

What Is Hadoop Backup and Recovery?

-

Why Is Hadoop Backup Important?

-

How to Back Up and Recover Hadoop Data With Native Tools?

-

How to Protect Hadoop HDFS Files with Vinchin Backup & Recovery?

-

Hadoop Backup and Recovery FAQs

-

Conclusion

Hadoop powers many of today’s largest data lakes and analytics platforms. With so much critical data at stake, protecting it is not optional—it’s essential. What if someone deletes a key directory? What if hardware fails or ransomware strikes? That’s where Hadoop backup and recovery come into play. In this guide, we’ll break down what these terms mean, why they matter for every administrator, and how you can implement them using both native tools and modern solutions.

What Is Hadoop Backup and Recovery?

Hadoop backup and recovery refer to the strategies that protect your data stored in Hadoop clusters—and restore it after loss or corruption. Unlike traditional databases that store everything in one place, Hadoop uses its distributed file system (HDFS) to spread data across many nodes. This architecture brings speed but also unique challenges when it comes to safeguarding information.

A backup creates a point-in-time copy of your data—think of it as a safety net you can fall back on if something goes wrong. Recovery is about getting your data back into a usable state after an incident like accidental deletion or corruption. Both processes are vital for business continuity.

Backing up Hadoop isn’t just about copying files; you need to consider metadata (like NameNode information), application states (such as Hive schemas), user permissions, and even job configurations. Each piece plays a role in ensuring full recovery.

Why Is Hadoop Backup Important?

Many administrators believe that Hadoop’s built-in replication offers enough protection against failure—but does it really? Replication helps guard against disk or node failures by keeping multiple copies of each block across different servers. However, this feature alone cannot save you from every threat.

Imagine an employee accidentally deletes an important folder—or worse yet—a script runs amok due to a bug or malware attack. All replicas get deleted or corrupted together because replication simply mirrors changes across nodes instantly. In such cases, there’s no way back unless you have true backups stored elsewhere.

Without proper backup strategies:

Data loss could be permanent

Downtime may stretch from hours into days

Compliance requirements might be violated

Business reputation could suffer

For example: If ransomware encrypts your HDFS directories overnight, replication won’t help—you need clean backups outside the affected cluster to recover quickly.

That’s why every organization running Hadoop should design robust backup policies that go beyond simple replication—covering accidental deletions, software bugs, cyberattacks, hardware failures, natural disasters, or even human error.

How to Back Up and Recover Hadoop Data With Native Tools?

Hadoop provides several native tools designed for backup and recovery tasks. Each tool has strengths—and limitations—so understanding how they work is crucial before relying on them for critical workloads.

HDFS Replication: Not a Backup

By default, HDFS stores three copies of each data block across different nodes within the cluster. This setup protects against single-node failures but doesn’t count as true backup because all replicas reflect changes immediately—including deletions or corruptions.

If someone deletes a file by mistake or malware corrupts files:

Every replica gets affected at once

There’s no historical version to roll back

Replication ensures availability—not recoverability—so always pair it with real backups stored separately from production clusters.

HDFS Snapshots

HDFS snapshots offer point-in-time read-only copies of directories within your cluster. They’re quick to create without interrupting running jobs—a lifesaver when users make mistakes like deleting files they shouldn’t have touched!

However:

Snapshots reside on the same physical cluster as live data; if the whole cluster fails (fire/flood/ransomware), both primary data AND snapshots are lost.

Snapshots operate at the file level—they don’t capture database schemas or application-specific states.

Managing too many snapshots increases storage needs rapidly; monitor usage closely!

To use HDFS snapshots:

1. Enable snapshots on your chosen directory using hdfs dfsadmin -allowSnapshot /data/important

2. Create a snapshot anytime with hdfs dfs -createSnapshot /data/important my_snapshot_202406

3. Restore individual files via hdfs dfs -cp /data/important/.snapshot/my_snapshot_202406/file.txt /data/important/file.txt

4. Delete old snapshots using hdfs dfs -deleteSnapshot /data/important my_snapshot_202406

Snapshots provide fast rollback options but should never be your only line of defense—always combine them with off-cluster backups!

DistCp (Distributed Copy)

DistCp is a command-line utility designed for copying large datasets between Hadoop clusters—or out to external storage systems like cloud buckets or NFS shares—using parallel MapReduce jobs for efficiency.

DistCp shines when:

Migrating entire directories between clusters

Creating offsite backups outside production environments

It can handle petabytes efficiently thanks to parallelism but requires careful planning around bandwidth usage so as not to overload networks during big transfers.

A typical DistCp command looks like this:

hadoop distcp hdfs://source-cluster:8020/data/important hdfs://backup-cluster:8020/backup/important

You can also copy directly from HDFS into cloud storage endpoints if configured properly—for example:

hadoop distcp hdfs://mycluster/data gs://mybucket/hadoop-backup/

For incremental backups (copying only changed files), combine DistCp with HDFS snapshots—a powerful approach explained below!

Handling Incremental Backups with DistCp and Snapshots

Incremental backups save time and bandwidth by transferring only new or modified files since your last snapshot rather than duplicating everything each run—a must-have feature for large-scale environments where full copies would take too long!

Here’s how admins typically set up incremental DistCp jobs:

1. Take an initial snapshot (SNAPSHOT_A) of your source directory using hdfs dfs -createSnapshot

2. After some time passes (daily/hourly/etc.), take another snapshot (SNAPSHOT_B)

3. Use DistCp's diff option along with update flag:

hadoop distcp -update -diff SNAPSHOT_A,SNAPSHOT_B hdfs://source-cluster/data hdfs://backup-cluster/data

This command copies only those blocks changed between SNAPSHOT_A & SNAPSHOT_B—saving resources while keeping remote sites up-to-date! Schedule these jobs via cron or orchestration tools like Apache Oozie for automation; always check logs afterward for errors so nothing slips through unnoticed.

NameNode Metadata Backup

The NameNode acts as the brain of any Hadoop cluster—it stores critical metadata about file locations (fsimage) plus recent changes (edit logs). If this information gets lost due to disk failure/corruption/human error—the entire filesystem becomes inaccessible even though raw blocks still exist on DataNodes!

Regularly backing up NameNode metadata is non-negotiable:

1. Fetch latest fsimage using hdfs dfsadmin -fetchImage /backup/fsimage.backup

2. Also copy edit logs manually from their configured directory; rolling edits beforehand ensures completeness (hdfs dfsadmin -rollEdits)

3. Store several generations securely offsite—in case recent ones get corrupted too!

4. Test restoring metadata periodically by spinning up test NameNodes using these saved images/logs

Neglecting this step risks total cluster downtime during disaster events—even if all raw blocks survive physically intact!

How to Protect Hadoop HDFS Files with Vinchin Backup & Recovery?

While robust storage architectures like Hadoop HDFS provide inherent resilience, comprehensive backup remains essential for true data protection. Vinchin Backup & Recovery is an enterprise-grade solution purpose-built for safeguarding mainstream file storage—including Hadoop HDFS environments—as well as Windows/Linux file servers, NAS devices, and S3-compatible object storage. Specifically optimized for large-scale platforms like Hadoop HDFS, Vinchin Backup & Recovery delivers exceptionally fast backup speeds that surpass competing products thanks to advanced technologies such as simultaneous scanning/data transfer and merged file transmission.

Among its extensive capabilities, five stand out as particularly valuable for protecting critical big-data assets: incremental backup (capturing only changed files), wildcard filtering (targeting specific datasets), multi-level compression (reducing space usage), cross-platform restore (recovering backups onto any supported target including other file servers/NAS/Hadoop/object storage), and integrity check (verifying backups remain unchanged). Together these features ensure efficient operations while maximizing security and flexibility across diverse infrastructures.

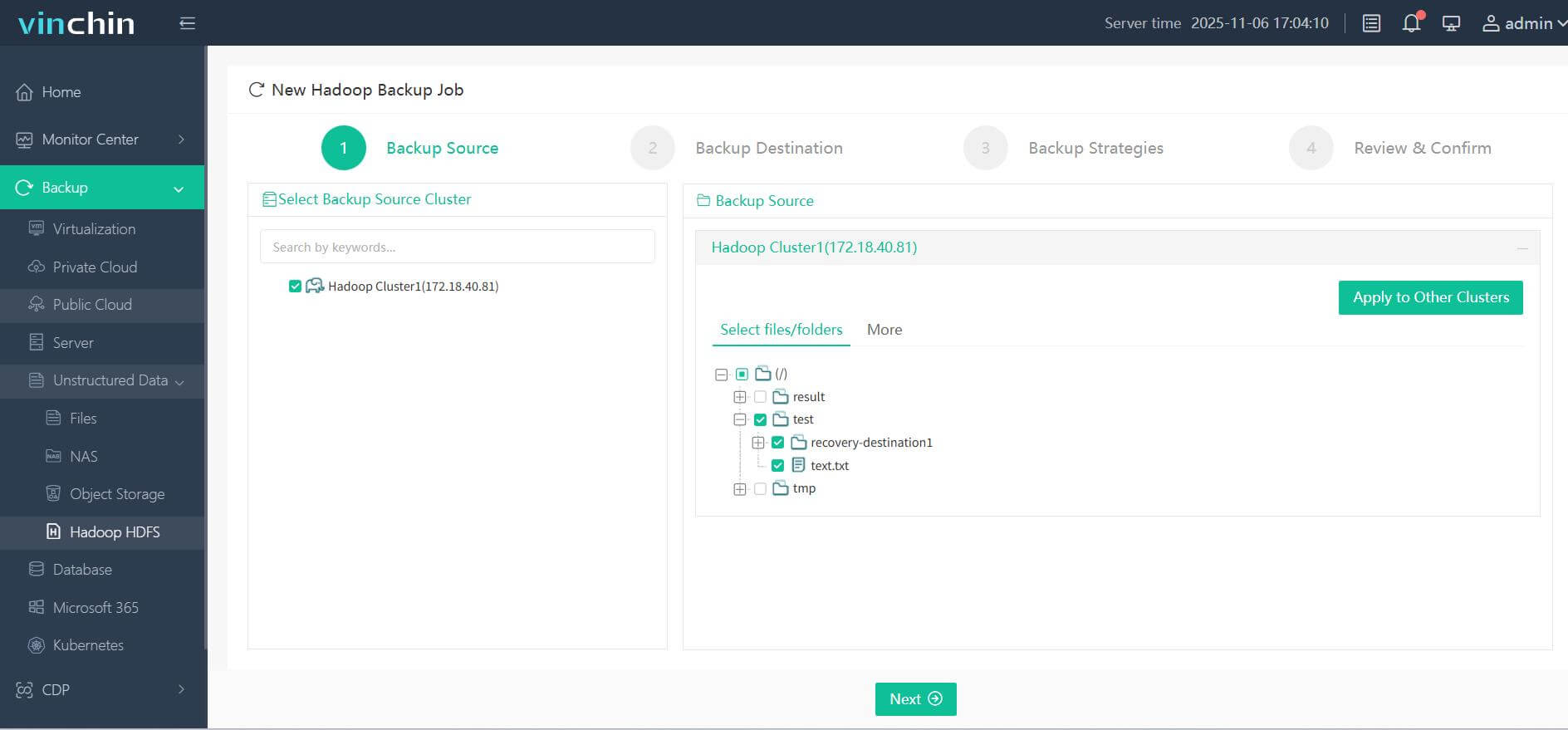

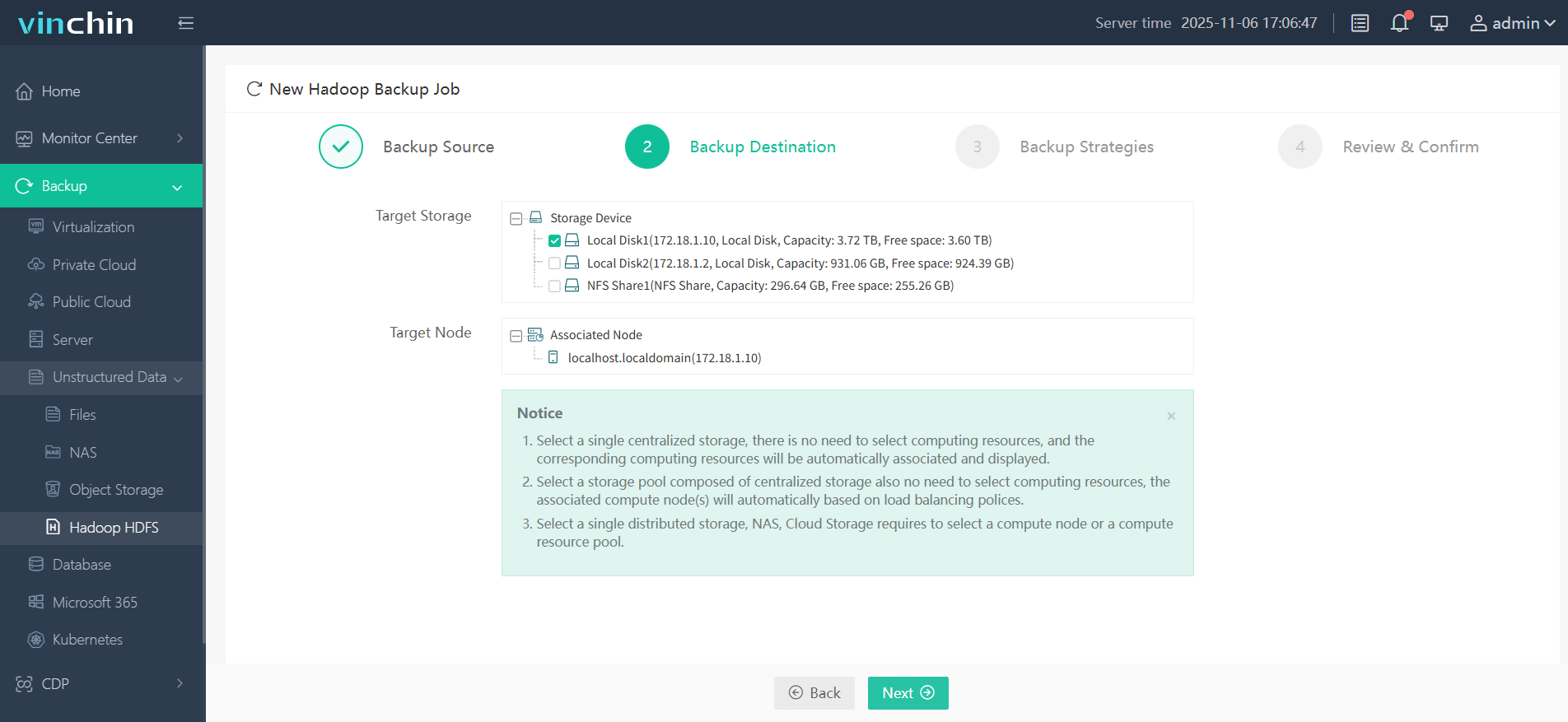

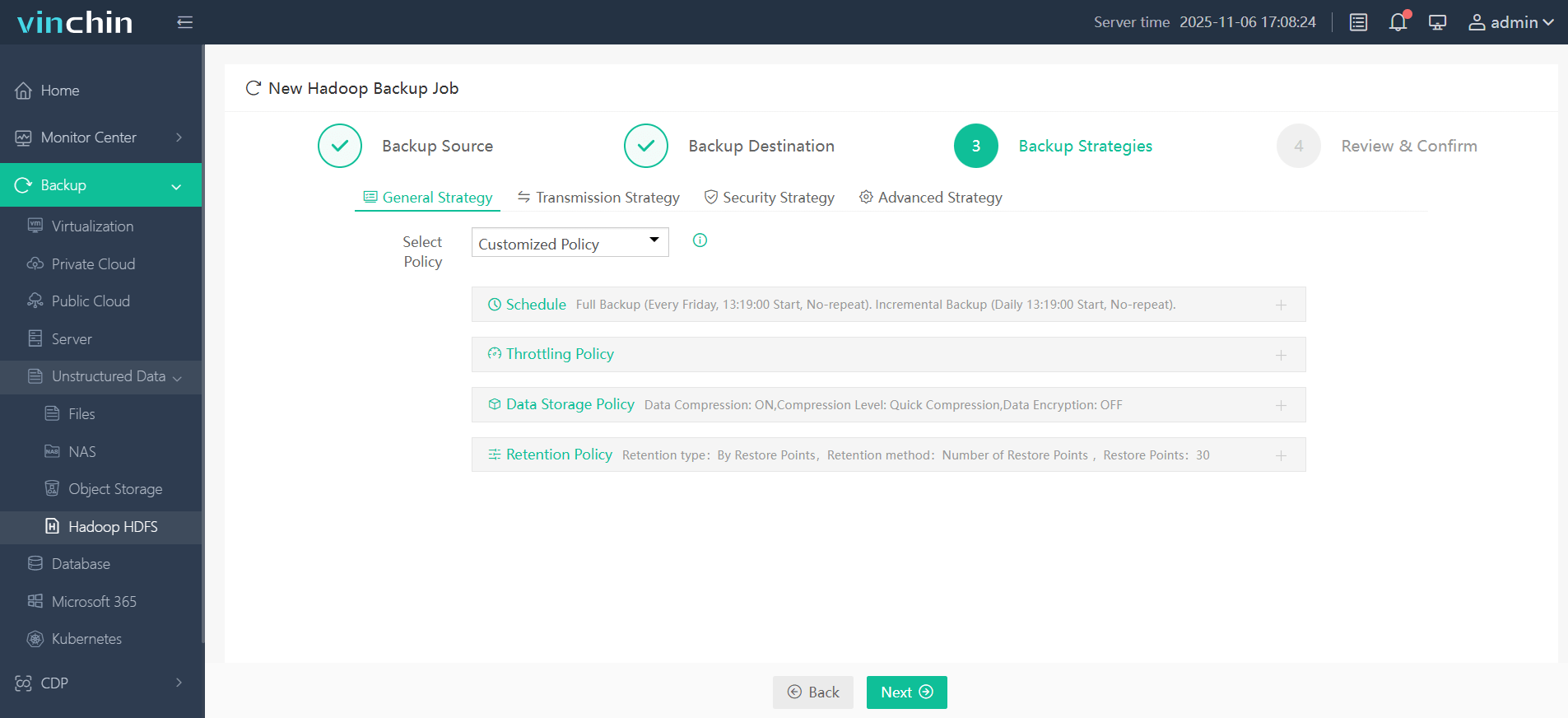

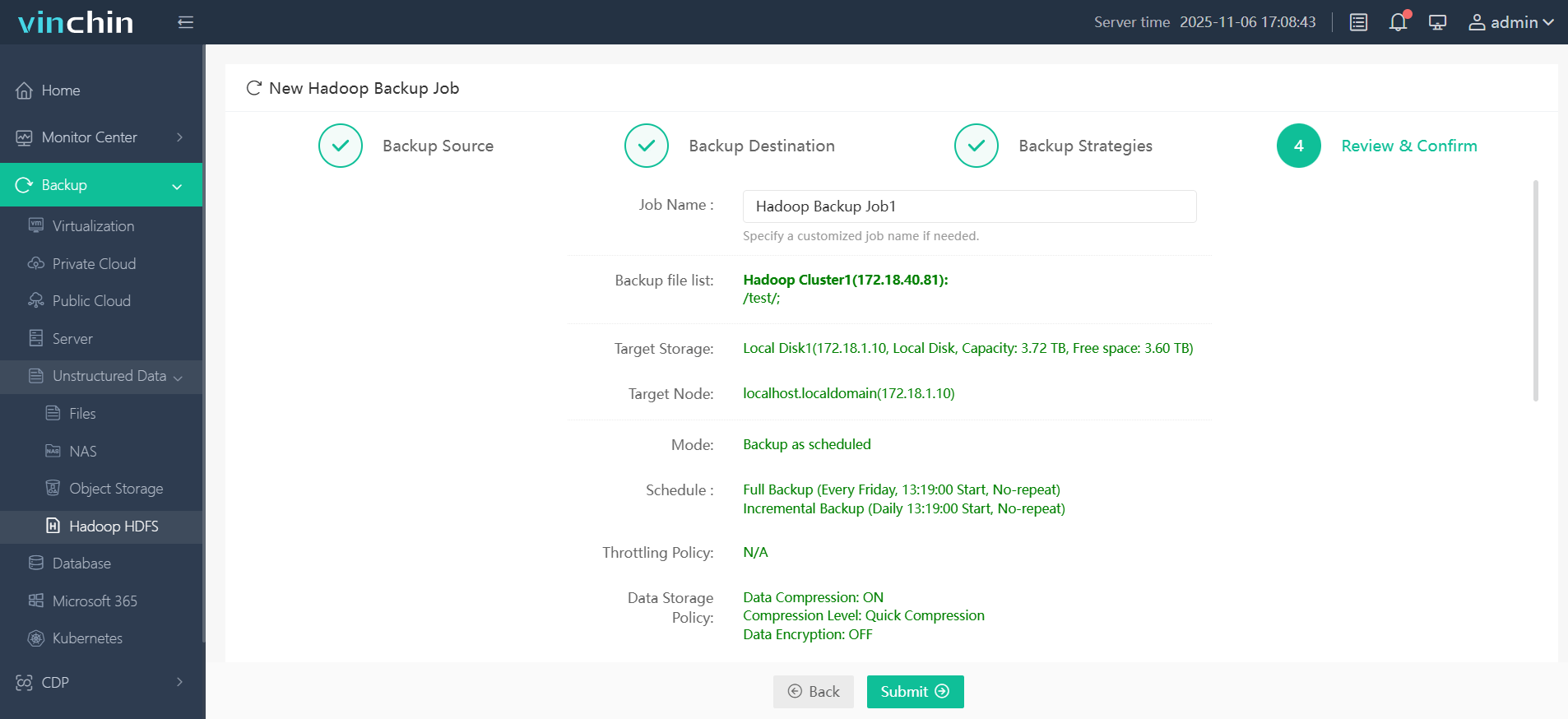

Vinchin Backup & Recovery offers an intuitive web console designed for simplicity. To back up your Hadoop HDFS files:

Step 1. Select the Hadoop HDFS files you wish to back up

Step 2. Choose your desired backup destination

Step 3. Define backup strategies tailored for your needs

Step 4. Submit the job

Join thousands of global enterprises who trust Vinchin Backup & Recovery—renowned worldwide with top ratings—for reliable data protection. Try all features free with a 60-day trial; click below to get started!

Hadoop Backup and Recovery FAQs

Q1: How do I automate daily incremental backups between two clusters?

A1: Schedule recurring cron jobs running DistCp commands paired with HDFS snapshot diffs so only changed files transfer each day automatically without manual intervention.

Q2: What should I do if my restored dataset shows missing permissions?

A2: After restoring files/directories verify ACLs/user/group ownership matches originals using hdfs dfs -getfacl/setfacl, adjusting settings as needed before resuming production workloads.

Q3: Can I perform consistent backups while active jobs write new data?

A3: Yes—take an HDFS snapshot first which freezes current state instantly then run backup tools against that static view avoiding conflicts/inconsistencies.

Conclusion

Protecting big data means planning ahead—not just hoping replication saves you! Native tools like snapshots/distcp cover basics but advanced needs demand more flexibility/reliability. Vinchin delivers enterprise-grade simplicity plus peace-of-mind. Try our free trial today—and safeguard what matters most!

Share on: