-

What is Hadoop?

-

What are Hadoop Components?

-

Why Use Hadoop?

-

How to Protect Hadoop Data with Vinchin Backup & Recovery?

-

What is Hadoop FAQs

-

Conclusion

Data is growing at an incredible rate worldwide. Every day, organizations collect logs, transactions, images, videos—more than traditional systems can handle alone. As these volumes explode, storing and processing such massive information becomes a challenge for IT teams everywhere. That’s where Hadoop steps in as a solution built for big data needs. But what is Hadoop exactly? Let’s break it down from basics to advanced details so you can see why it matters—and how to manage it well.

What is Hadoop?

Hadoop is an open-source software framework designed to store and process large datasets across clusters of computers using simple programming models. It solves the problem of handling data that outgrows single servers by connecting many standard machines—called nodes—to work together as one system.

Think of Hadoop’s storage layer like a distributed RAID array at the software level: files are split into blocks and spread across different servers so no single failure brings down your data. This approach makes it possible to store petabytes of information efficiently while keeping costs low since you use regular hardware instead of expensive specialized gear.

The project began in the mid-2000s when Doug Cutting and Mike Cafarella drew inspiration from Google’s research on big data processing frameworks. They named their creation after Cutting’s son’s toy elephant—Hadoop—and released it through the Apache Software Foundation where it continues to evolve today.

Industries from finance to healthcare rely on Hadoop because it scales easily as data grows while offering strong reliability features that operations administrators value most.

What are Hadoop Components?

To understand what makes Hadoop powerful, you need to know its core modules—each plays a unique role in managing big data workloads:

Hadoop Distributed File System (HDFS):

This is Hadoop’s backbone for storage. HDFS breaks large files into blocks (often 128MB or 256MB each) then distributes them across multiple nodes in your cluster. Each block gets copied several times—so if one node fails, your information stays safe elsewhere automatically thanks to replication policies built right into HDFS design principles.

MapReduce:

MapReduce acts as Hadoop's main engine for processing tasks at scale by dividing jobs into smaller chunks handled in parallel across many nodes before combining results back together again efficiently—a method called parallel computing that speeds up analysis dramatically compared with traditional approaches.

The "Map" phase processes input data into key-value pairs.

The "Reduce" phase aggregates those pairs into final results.

While MapReduce remains foundational within classic Hadoop deployments, many modern clusters now also support alternative engines like Apache Spark which offer faster performance for some workloads—a trend worth noting if you're planning future upgrades or migrations within your environment.

YARN (Yet Another Resource Negotiator):

YARN manages resources throughout your cluster by scheduling jobs intelligently based on available CPU power or memory capacity per node—it ensures nothing sits idle while balancing loads so everything runs smoothly even during peak demand periods.

With YARN handling job assignments behind-the-scenes via its ResourceManager component plus NodeManagers running locally on each server instance—you gain flexibility supporting diverse applications beyond just batch analytics including streaming or interactive queries too!

Hadoop Common:

This module provides shared libraries plus utilities needed by all other parts of the ecosystem so they communicate seamlessly without compatibility issues cropping up between versions over time—a critical factor when maintaining large-scale production environments long-term!

Around these four pillars has grown an entire ecosystem: tools like Hive enable SQL-style queries; HBase offers NoSQL storage; Pig simplifies complex workflows; newer additions such as Spark deliver real-time analytics capabilities atop classic infrastructure—all building upon core strengths established by HDFS/MapReduce/YARN/Common foundation layers first introduced years ago but still vital today!

Why Use Hadoop?

Hadoop addresses the fundamental challenges of storing and processing “big” data. Its value can be summarized in several areas:

1. Scalability — Hadoop is designed for horizontal scaling. Instead of buying bigger, more expensive machines, you add inexpensive commodity nodes to expand both storage and compute capacity. This makes it straightforward to go from tens of terabytes to many petabytes.

2. Cost-efficiency — Running on standard x86 hardware reduces capital and operational expenses compared with proprietary high-end systems. The economics of scale are one of Hadoop’s strongest selling points.

3. Fault tolerance and availability — HDFS automatically replicates data across multiple nodes; the compute framework can reschedule failed tasks. This built-in resilience reduces the need for expensive high-availability hardware.

4. Data locality — Hadoop schedules computation on or near nodes that hold the data, significantly reducing network I/O and improving overall throughput for large batch jobs.

5. Schema-on-read and data variety — Hadoop natively accommodates structured, semi-structured, and unstructured data, enabling analytics without rigid upfront schemas.

6. Ecosystem and flexibility — A rich ecosystem (Hive, Pig, HBase, Spark, Flume, Sqoop, etc.) supports batch ETL, interactive queries, NoSQL storage, stream ingestion, and machine learning workloads, letting teams compose end-to-end data platforms.

7. Maturity and community — Years of production use, broad vendor support, and a large community provide stable, battle-tested patterns and integrations.

How to Protect Hadoop Data with Vinchin Backup & Recovery?

While robust storage architectures like Hadoop HDFS provide inherent resilience, comprehensive backup remains essential for true data protection. Vinchin Backup & Recovery is an enterprise-grade solution purpose-built for safeguarding mainstream file storage—including Hadoop HDFS environments—as well as Windows/Linux file servers, NAS devices, and S3-compatible object storage. Specifically optimized for large-scale platforms like Hadoop HDFS, Vinchin Backup & Recovery delivers exceptionally fast backup speeds that surpass competing products thanks to advanced technologies such as simultaneous scanning/data transfer and merged file transmission.

Among its extensive capabilities, five stand out as particularly valuable for protecting critical big-data assets: incremental backup (capturing only changed files), wildcard filtering (targeting specific datasets), multi-level compression (reducing space usage), cross-platform restore (recovering backups onto any supported target including other file servers/NAS/Hadoop/object storage), and integrity check (verifying backups remain unchanged). Together these features ensure efficient operations while maximizing security and flexibility across diverse infrastructures.

Vinchin Backup & Recovery offers an intuitive web console designed for simplicity. To back up your Hadoop HDFS files:

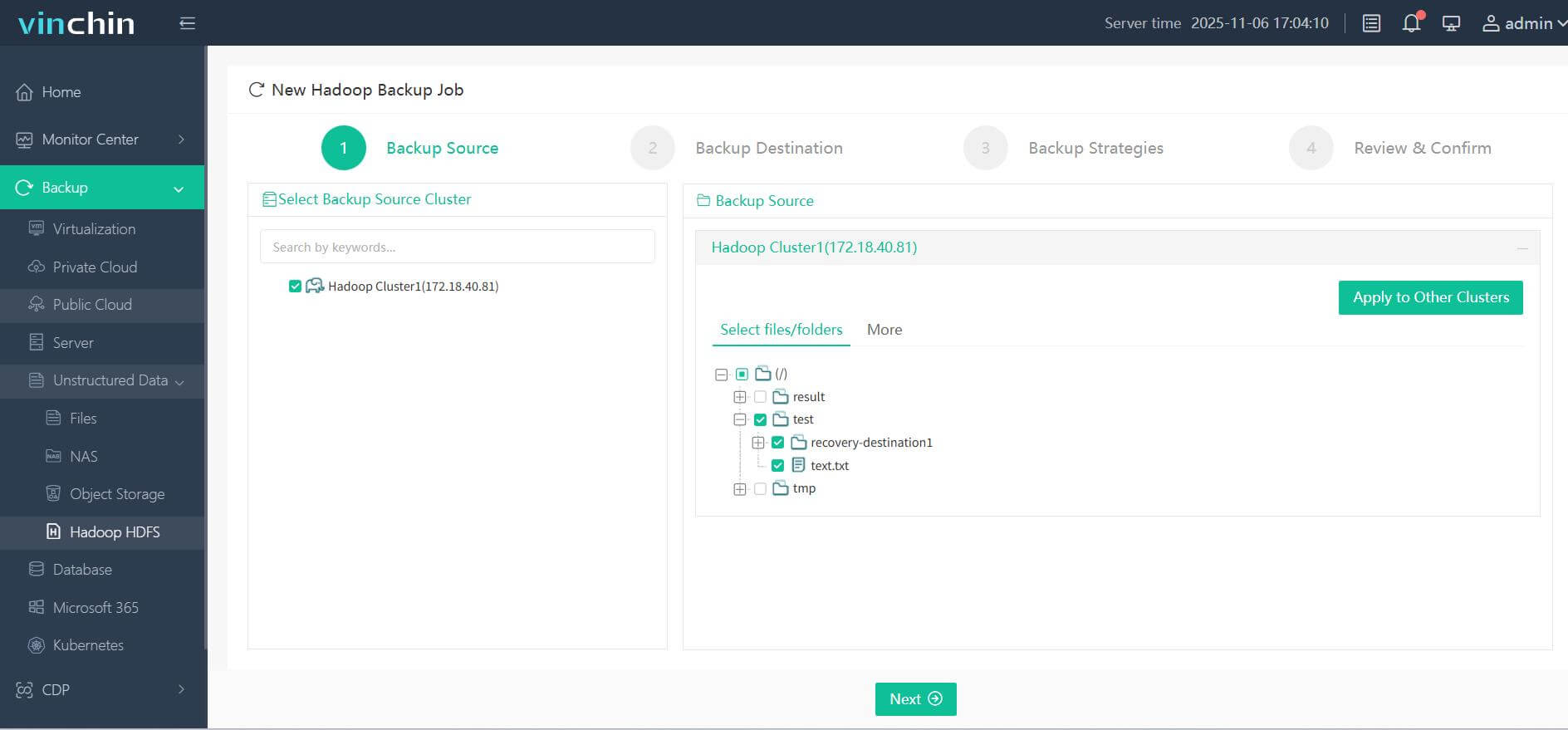

Step 1. Select the Hadoop HDFS files you wish to back up

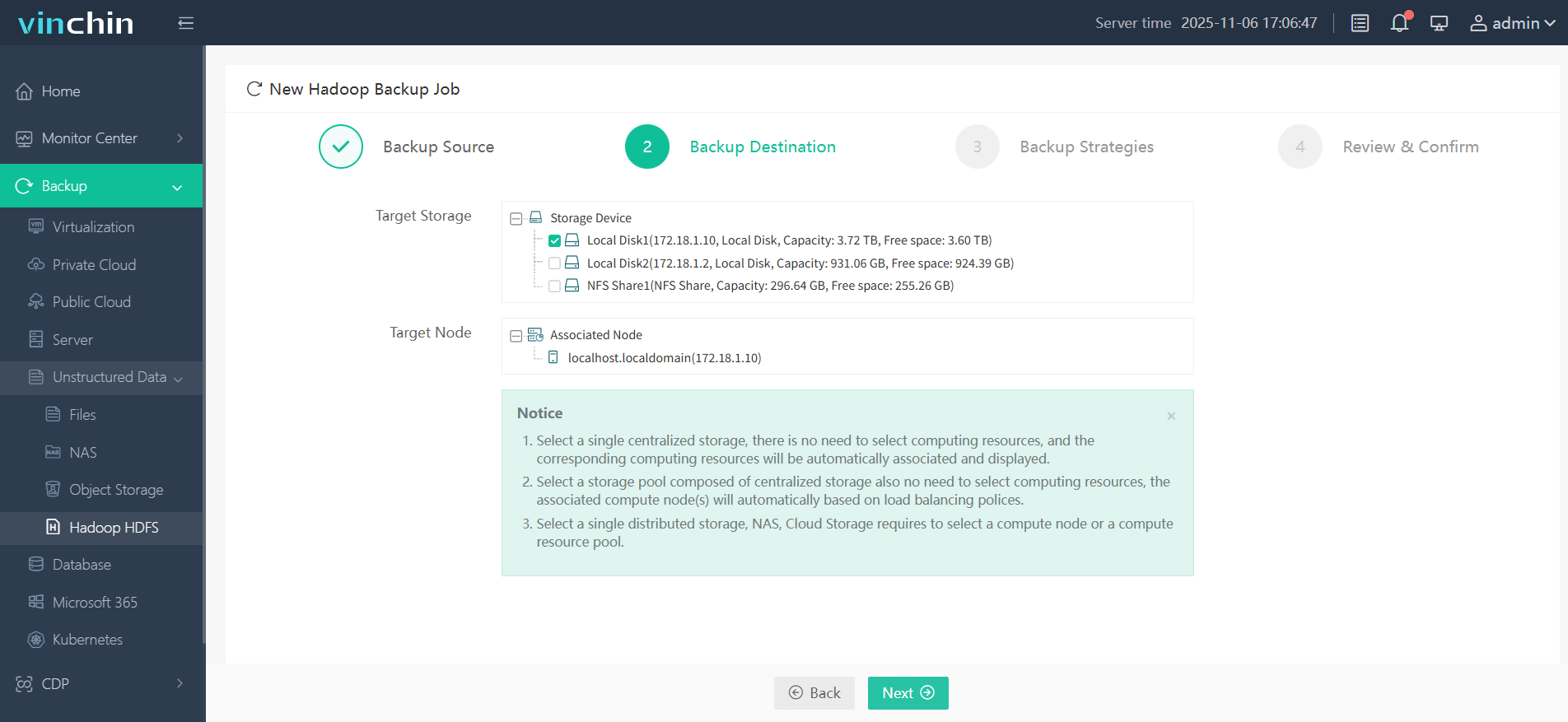

Step 2. Choose your desired backup destination

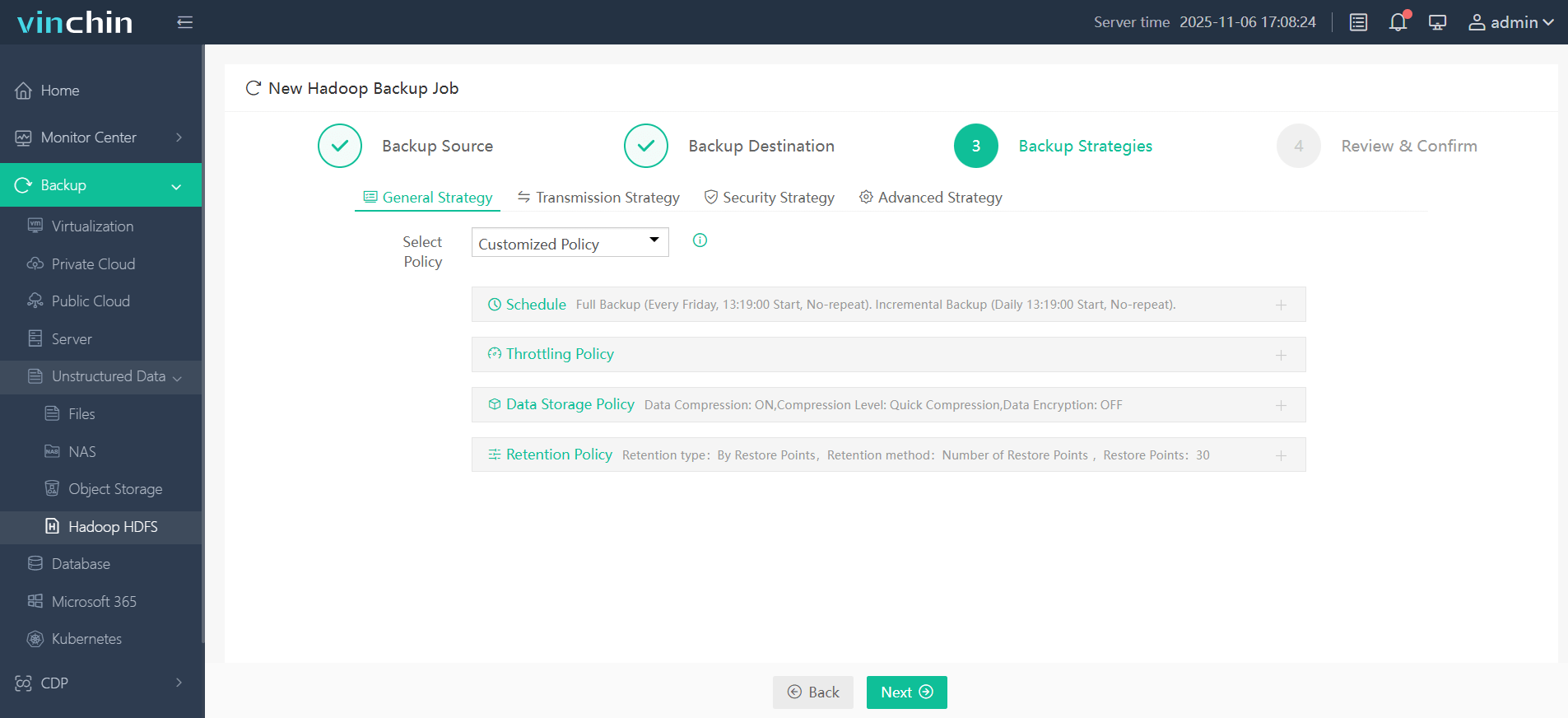

Step 3. Define backup strategies tailored for your needs

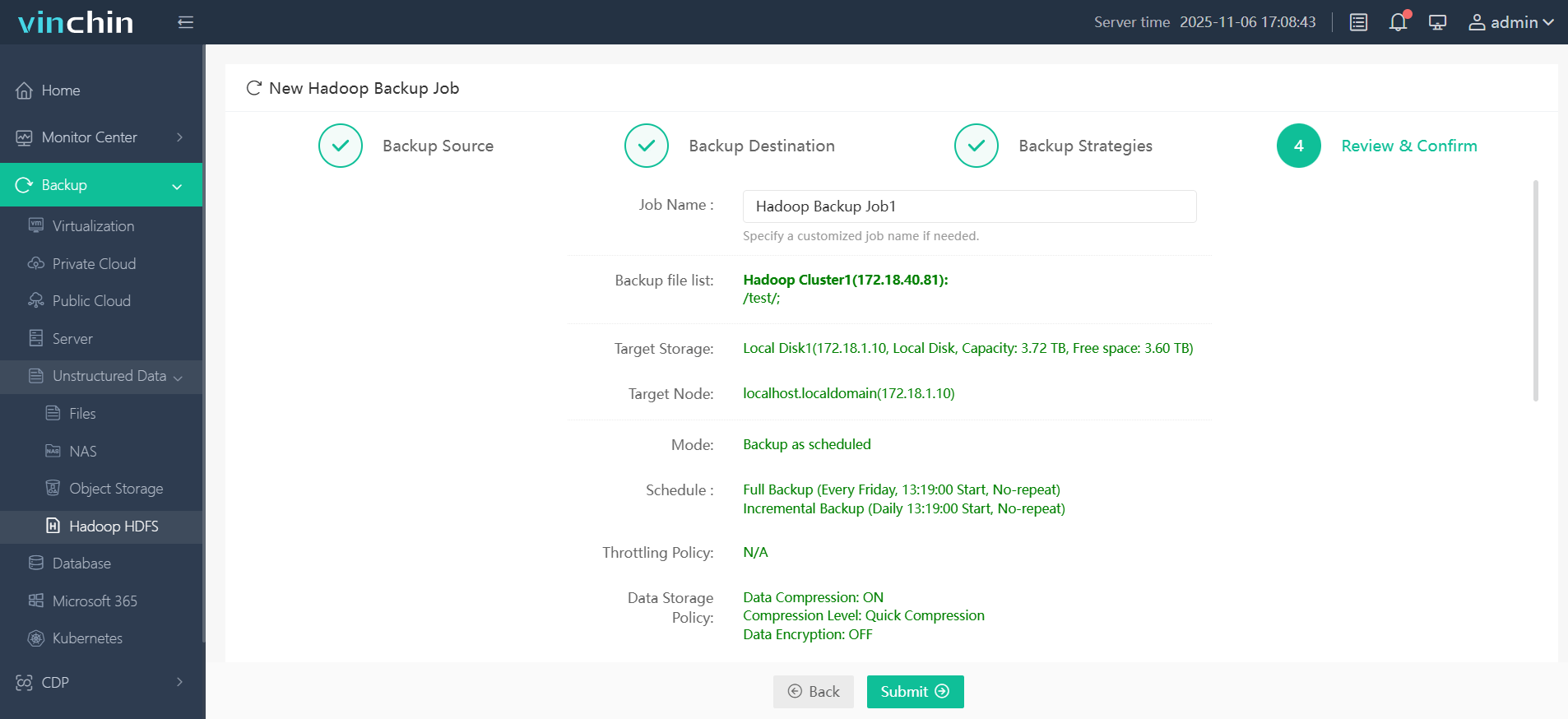

Step 4. Submit the job

Join thousands of global enterprises who trust Vinchin Backup & Recovery—renowned worldwide with top ratings—for reliable data protection. Try all features free with a 60-day trial; click below to get started!

What is Hadoop FAQs

Q1: How does Hadoop handle data consistency during node failures?

A1: When a DataNode fails during writes, HDFS uses write-ahead logs coordinated by NameNode so committed blocks remain consistent; check logs under /var/log/hadoop-hdfs if issues arise.

Q2: Can I migrate my on-premises Hadoop cluster to cloud infrastructure?

A2: Yes—with careful planning around networking/firewall rules/data transfer speeds/cloud provider compatibility; test restores before switching production workloads fully over.

Q3: How do I monitor resource usage trends in my cluster?

A3: Use built-in web UIs like ResourceManager UI or integrate third-party monitoring tools; review metrics regularly under YARN application logs/dashboard panels.

Conclusion

In short, Hadoop provides a scalable, cost-effective and fault-tolerant platform for storing and processing massive, diverse datasets. Backed by a rich ecosystem and data-local processing, it remains a practical foundation for large-scale batch analytics, ETL, and historical data workloads as organizations continue to grow their data.

Share on: