-

What Is HDFS Backup and Restore?

-

Why Perform HDFS Backup and Restore?

-

Method 1: How to Use DistCp for HDFS Backup and Restore

-

Method 2: How to Use HDFS Snapshots for Backup and Restore

-

Introducing Vinchin Backup & Recovery – Enterprise File Protection Made Simple

-

HDFS Backup And Restore FAQs

-

Conclusion

Data drives every modern business decision. Hadoop Distributed File System (HDFS) stores massive datasets across many servers, making it powerful but also complex to protect. Backing up petabytes of distributed data is not simple—especially when you consider NameNode metadata, block distribution across nodes, or network bottlenecks that can slow down recovery after failure.

What happens if hardware fails or someone deletes critical files? That’s where hdfs backup and restore comes in. In this guide, we’ll explore why these processes matter, how they work at different levels of expertise, and which tools best fit your needs—from built-in commands to enterprise solutions.

What Is HDFS Backup and Restore?

HDFS backup and restore means creating safe copies of your Hadoop data so you can recover from loss or disaster. Unlike single-server file systems, HDFS spreads data blocks over many machines for speed and reliability—but this makes traditional backups tricky.

A good backup captures both your actual files (data blocks) and crucial metadata managed by the NameNode. Restoring means bringing back lost or corrupted data into a working state without missing dependencies or breaking applications that rely on it.

Why does this matter? Because even though HDFS replicates files across nodes for fault tolerance, replication alone cannot protect against accidental deletion, corruption from software bugs, ransomware attacks, or site-wide disasters like fire or flood.

Why Perform HDFS Backup and Restore?

Many admins think built-in replication is enough protection—but it isn't! Replication helps if one server goes down but won’t save you from human errors or malware that corrupts every copy at once.

Here are some reasons why regular hdfs backup and restore procedures are essential:

Accidental deletions happen—even experts make mistakes.

Ransomware can encrypt all replicas unless you have isolated backups.

Hardware failures sometimes affect multiple disks at once.

Compliance rules may require offsite copies for legal reasons.

Testing restores ensures your business can bounce back quickly after an outage.

Without tested backups—and a plan to restore them—you risk losing valuable business data forever.

Method 1: How to Use DistCp for HDFS Backup and Restore

DistCp (“Distributed Copy”) is Hadoop’s standard tool for moving large amounts of data between clusters—or from one part of an HDFS system to another location such as cloud storage or local disk arrays. It uses MapReduce jobs under the hood so transfers run in parallel—ideal for big datasets spread over many servers.

Using DistCp for Backups

To perform an hdfs backup using DistCp:

1. Make sure both source (“production”) cluster and destination (“backup”) cluster are reachable via network.

2. Check permissions—the user running commands should have read access on source paths plus write access at destination locations.

3. Create target directories ahead of time if needed using hdfs dfs -mkdir.

The basic command looks like this:

hadoop distcp hdfs://source-namenode:8020/source-path hdfs://dest-namenode:8020/dest-path

Replace source-namenode with your live cluster address; dest-namenode points to where backups go; adjust paths as needed per directory structure.

Want faster jobs? Use options like -bandwidth <MBps> to throttle transfer rates so production traffic isn’t overwhelmed:

hadoop distcp -bandwidth 100 hdfs://source-namenode:/data hdfs://backup-cluster:/backup

For ongoing protection without re-copying unchanged files each time:

Add

-updateso only modified files transferUse

-overwriteif you want destination files replaced regardless of changes

Example:

hadoop distcp -update hdfs://prod:/user/data hdfs://backup:/archive/data

Handling Errors During DistCp Transfers

Network hiccups happen! If a job fails mid-transfer:

1. Check logs (yarn logs) for failed tasks

2. Re-run with -update flag—it skips already-copied files

3. For partial transfers due to node outages use -strategy dynamic which adapts task allocation based on node health

Always check exit codes after completion:

if [ $? -eq 0 ]; then echo "Backup succeeded"; else echo "Backup failed"; fi

This helps automate alerts in scripts so issues don’t go unnoticed overnight!

Using DistCp for Restores

Restoring works just like backing up—simply reverse source/destination paths:

hadoop distcp hdfs://backup-cluster:/archive/data hdfs://prod:/user/data

You can target specific folders/files by adjusting path arguments accordingly—for example restoring only /user/data/reports/2024.

DistCp shines when moving terabytes between clusters—but remember both ends must be available during transfer! Also ensure enough bandwidth exists between sites; otherwise jobs may take hours—or days—to complete depending on dataset size.

Method 2: How to Use HDFS Snapshots for Backup and Restore

Snapshots offer point-in-time “pictures” of any directory in HDFS—a lifesaver when someone accidentally deletes important files! They’re quick because they don’t duplicate entire datasets unless underlying blocks change after creation; instead they track differences internally until deleted later on.

Enabling Snapshots

Only users with superuser privileges can enable snapshots:

hdfs dfsadmin -allowSnapshot /path/to/directory

This step must be done before any snapshots are created.

Creating Snapshots

Once enabled:

hdfs dfs -createSnapshot /path/to/directory [snapshotName]

If no name given ([snapshotName]), Hadoop generates one automatically based on timestamp.

Restoring Data from Snapshots

To recover lost/deleted items:

hdfs dfs -cp /path/to/directory/.snapshot/snapshotName/file /path/to/directory/file

This command copies content out of snapshot view back into its original place—or anywhere else in the filesystem.

Deleting Old Snapshots

Snapshots consume extra space only as changed blocks accumulate over time—so clean them up periodically!

hdfs dfs -deleteSnapshot /path/to/directory snapshotName

Comparing DistCp vs Snapshots

Let’s summarize key differences:

| Feature | DistCp | Snapshots |

|---|---|---|

| Scope | Cluster-to-cluster/offsite | Same cluster only |

| Speed | Slower (large transfers) | Instant creation |

| Space efficiency | Full/incremental possible | Only changed blocks stored |

| Disaster recovery | Yes | No |

| Automation | Scriptable | Scriptable |

Both methods play vital roles—a robust plan often uses both together!

Introducing Vinchin Backup & Recovery – Enterprise File Protection Made Simple

While native tools provide foundational coverage for HDFS environments, organizations managing large-scale file systems require advanced solutions designed specifically for enterprise needs. Vinchin Backup & Recovery stands out as a professional-grade file backup solution supporting most mainstream file storages—including Windows/Linux file servers, NAS devices, S3 object storage platforms, and critically relevant here: Hadoop-based infrastructures such as HDFS itself. Its architecture delivers exceptionally fast file-backup speeds compared to competitors thanks to proprietary technologies like simultaneous scanning plus transmission and merged file delivery mechanisms that dramatically accelerate throughput even at scale.

Among its extensive feature set are incremental backups support (for efficient change-only protection), wildcard filtering (target precise datasets), multi-level compression (save space), cross-platform restore capabilities (restore any backup directly onto file server/NAS/Hadoop/object storage), and robust integrity checks ensuring restored data matches its original state exactly—all combining to deliver secure flexibility while minimizing resource impact across hybrid environments.

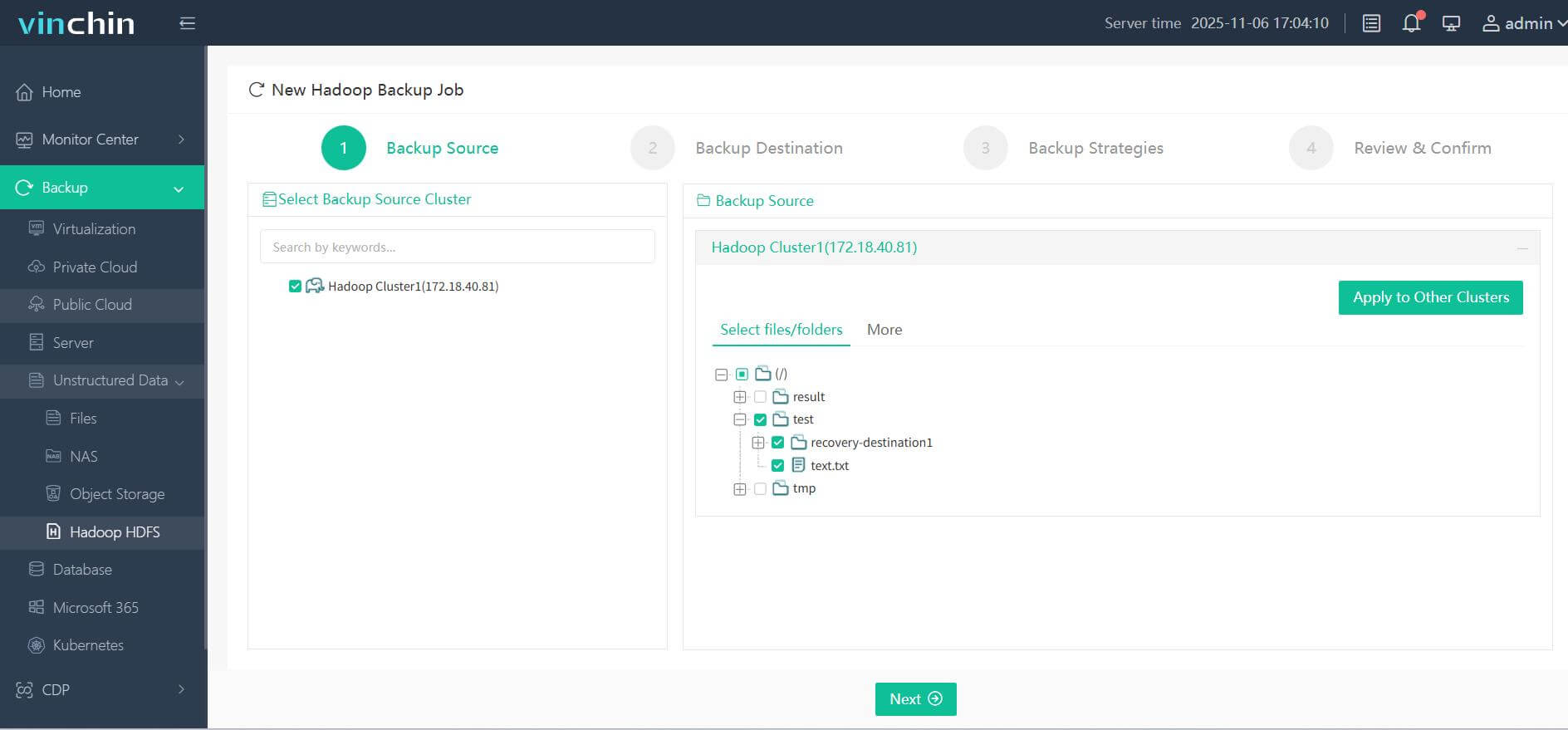

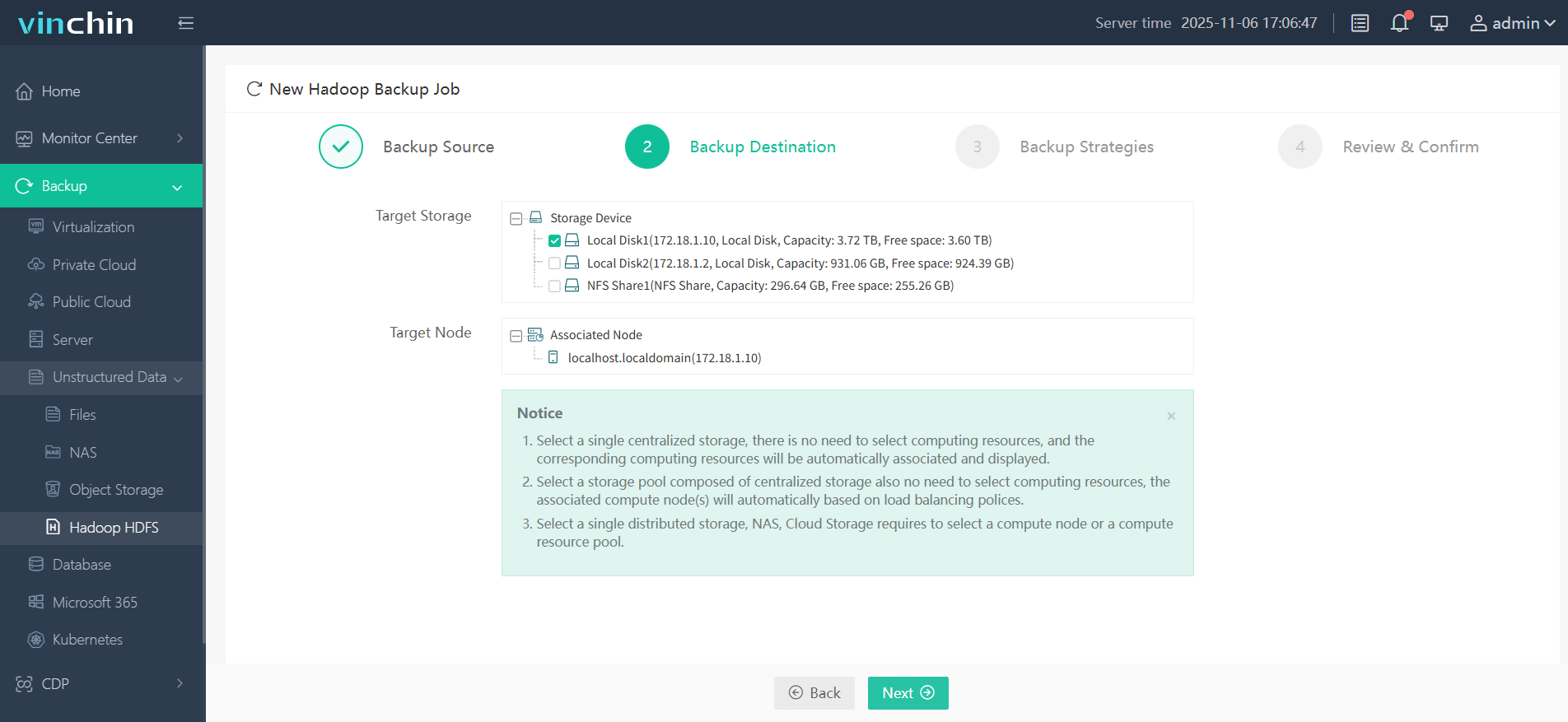

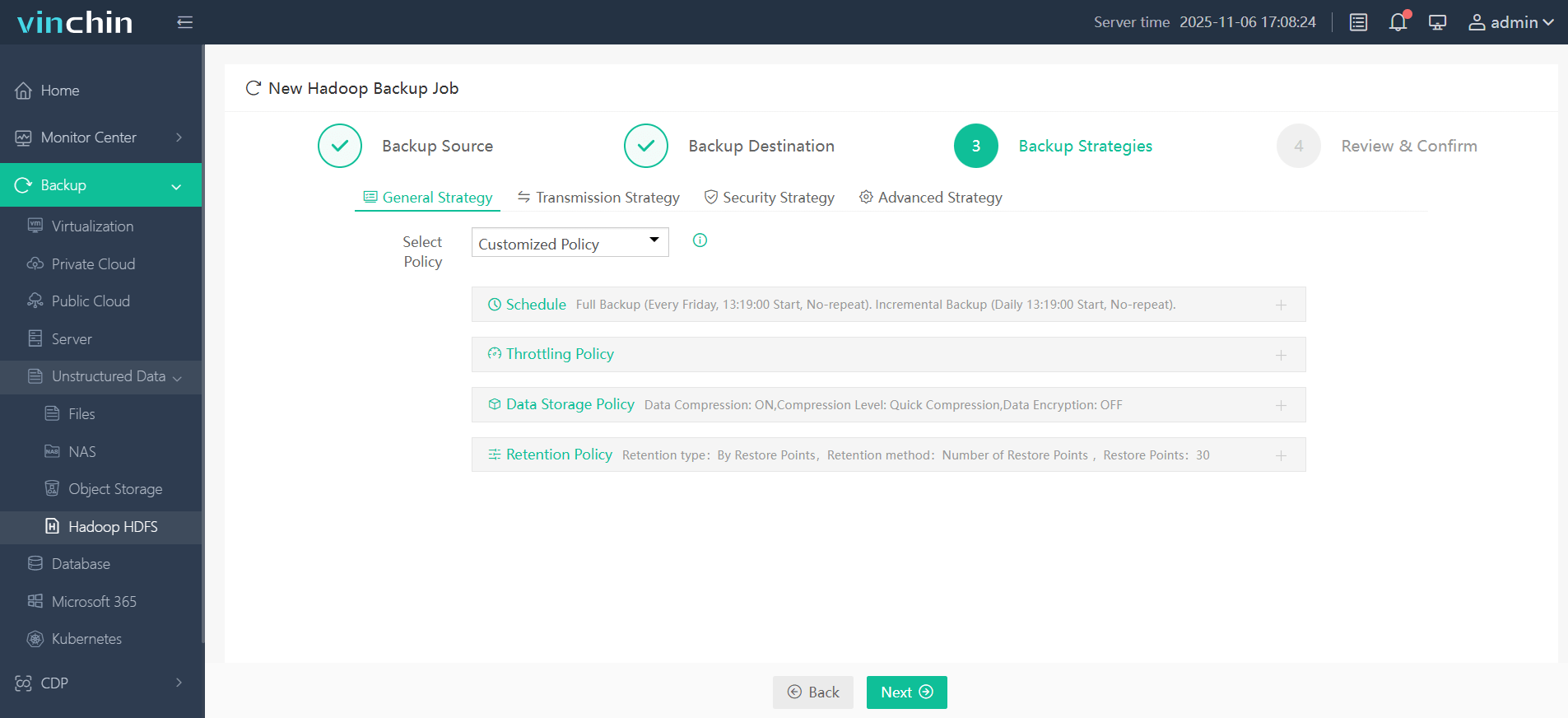

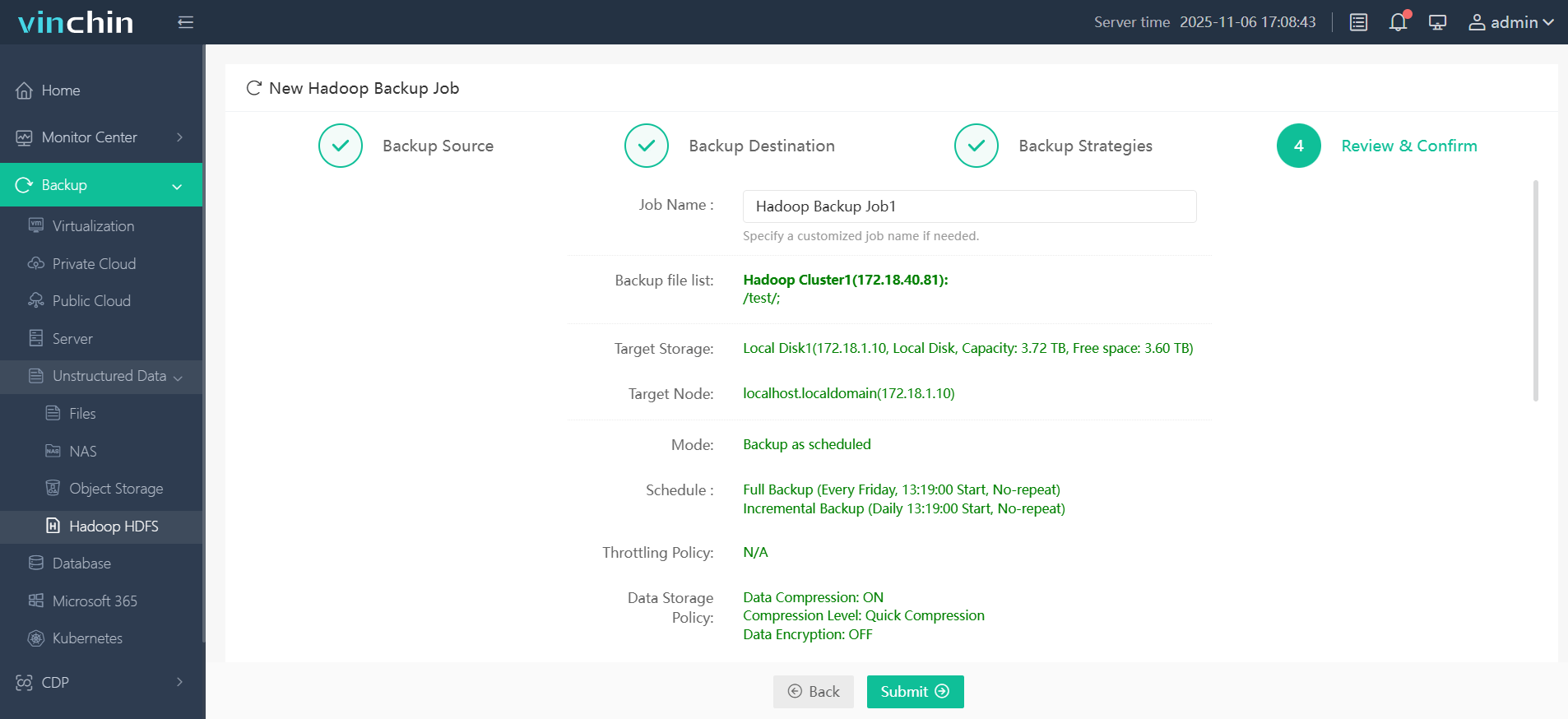

Vinchin Backup & Recovery features an intuitive web console that streamlines operations into four clear steps tailored perfectly for Hadoop clusters:

Step 1. Select the Hadoop HDFS files you wish to back up

Step 2. Choose your desired backup destination

Step 3. Define backup strategies tailored for your needs

Step 4. Submit the job

Recognized globally by thousands of enterprises—with top ratings in industry reviews—Vinchin Backup & Recovery offers a full-featured free trial valid for 60 days; click below now to experience leading-edge enterprise data protection firsthand!

HDFS Backup And Restore FAQs

Q1: How do I verify integrity after running a DistCp-based backup?

A1: Compare checksums using hadoop fsck before/after transfer or run distcp with -skipcrccheck false option enabled for strict validation during copy jobs.

Q2: What's the recommended frequency for scheduling full versus incremental HDFS backups?

A2: Run full backups weekly/monthly depending on dataset growth; schedule incrementals daily/hourly based on RPO requirements set by business needs—not just technical limits!

Q3: What steps should I follow if my scheduled nightly snapshot fails due to quota limits?

A3: Increase quota via hdfs dfsadmin –setSpaceQuota, delete old snapshots using DELETE SNAPSHOT, rerun create command—all within admin shell session.

Conclusion

Protecting big-data environments requires more than default replication settings—robust hdfs backup and restore practices keep businesses resilient against loss or attack. Native tools help cover basics while Vinchin delivers advanced automation plus security features trusted worldwide—try it today!

Share on: