-

What Is Hadoop HDFS?

-

How Hadoop HDFS Works?

-

Features of Hadoop HDFS

-

Why Choose Hadoop HDFS for Big Data

-

How to Protect Hadoop HDFS Files with Vinchin Backup & Recovery

-

Hadoop HDFS FAQs

-

Conclusion

Managing massive data sets is a challenge for any modern enterprise. Hadoop HDFS makes it possible to store, process, and protect big data at scale using clusters of ordinary hardware instead of expensive servers. But what exactly is Hadoop HDFS? Why do so many organizations trust it to handle their most valuable information? Let’s break down its core concepts step by step—from basics to advanced operations—so you can make informed decisions about your storage strategy.

What Is Hadoop HDFS?

Hadoop HDFS stands for Hadoop Distributed File System—the primary storage layer of Apache Hadoop’s ecosystem. Unlike traditional file systems that run on single machines or small clusters, HDFS spreads very large files (from gigabytes to petabytes) across many computers working together as one unit.

This distributed approach means you don’t need high-end hardware; you can use regular servers connected through standard networks. Files stored in HDFS are split into large blocks (usually 128 MB or more), which are then distributed across multiple nodes in the cluster for both speed and safety.

HDFS has evolved since its early days to meet growing demands from enterprises worldwide. For example, features like Federation allow multiple NameNodes to manage different parts of the namespace—helping organizations scale out even further without bottlenecks in metadata management.

How Hadoop HDFS Works?

At its heart, hadoop hdfs uses a master-slave architecture designed for reliability and efficiency—even when failures occur.

The central controller is called the NameNode—it manages all file system metadata such as directory structure, permissions, block locations, and mapping between files and their physical storage blocks on disk drives spread throughout your cluster.

Worker nodes called DataNodes actually store these blocks of data on local disks; they also handle read/write requests from clients based on instructions from the NameNode.

When you upload a file to hadoop hdfs:

The client contacts the NameNode first.

The NameNode splits your file into blocks.

It tells your client which DataNodes should receive each block.

Your client writes directly to those DataNodes.

By default (and this is key!), every block gets replicated three times—on different DataNodes—to ensure redundancy if something goes wrong later on.

Reading works much the same way: Your client asks where each block lives; then reads directly from those DataNodes—using whichever replica happens to be available fastest or closest on the network.

There’s also a component called the Secondary NameNode—but don’t let its name fool you! It doesn’t serve as an automatic backup or failover node; instead it periodically merges edit logs with metadata snapshots so that recovery after crashes remains fast and efficient. For true high availability (HA), modern deployments often use Active/Standby NameNodes managed by ZooKeeper—a detail worth knowing if uptime matters most in your environment!

Monitoring and Maintenance Best Practices

Keeping an eye on your hadoop hdfs cluster ensures smooth operation—and quick recovery when issues arise:

Use

hdfs dfsadmin -reportregularly to check overall health: This command shows live/dead nodes plus total/free capacity at a glance.Run

hdfs fsck /occasionally: It scans for missing/corrupt blocks so you can act before users notice problems.Balance disk usage with

hdfs balancer: If some nodes fill up faster than others due to uneven data distribution—or after adding new hardware—the balancer redistributes blocks automatically.Set up alerts using monitoring tools like Prometheus or Nagios: These help catch slowdowns or failures early by tracking metrics such as DataNode heartbeat loss rates or rising error counts.

Automate repairs where possible: Scripts can restart failed services or trigger re-replication when needed so downtime stays minimal even during maintenance windows.

Data Replication and Consistency

Replication is central to how hadoop hdfs protects your data:

Every block gets stored on three separate DataNodes by default—but admins can adjust this replication factor per file depending on importance versus storage cost.

If one copy becomes unavailable due to hardware failure or network outage? No problem! Clients simply fetch another replica automatically while background processes restore lost copies elsewhere in the cluster.

Consistency comes from write-once-read-many semantics: Once written successfully (all replicas acknowledged), files cannot be changed except through explicit overwrite/delete actions—which simplifies recovery after crashes but requires careful planning around updates!

Features of Hadoop HDFS

Hadoop hdfs offers several features that make it ideal for storing big data reliably:

Fault tolerance stands out first—it’s built right into every layer thanks to replication strategies described above plus automated self-healing routines triggered whenever failures occur behind-the-scenes without user intervention required most times!

High throughput follows closely behind because large sequential reads/writes dominate analytics workloads; streaming access patterns mean less random seeking compared with traditional databases so performance scales linearly as clusters grow larger over time too!

Scalability remains a hallmark feature—you start small but add more DataNodes seamlessly whenever business needs demand extra capacity whether measured in terabytes today…or exabytes tomorrow!

Data locality optimizes processing speed further still: Instead of moving huge datasets across networks repeatedly just so applications can analyze them centrally somewhere else…computation jobs run right next door (“on-node”) wherever relevant blocks already reside physically inside each server rack itself!

Security isn’t forgotten either—with support for POSIX-style permissions plus optional Kerberos authentication integration ensuring only authorized users/applications gain access according strict policies set by IT teams themselves—not left open accidentally due misconfiguration risks common elsewhere sometimes seen outside enterprise-grade solutions like this one here now deployed globally everywhere mission-critical results matter most always!

Snapshots/checkpoints round out protection story nicely too—they capture point-in-time images quickly/restorably anytime disaster strikes unexpectedly whether caused internally via human error externally via ransomware/malware attacks alike unfortunately ever-present threats nowadays facing digital infrastructure worldwide daily basis sadly enough…

Optimizing HDFS for Performance

Getting top performance from hadoop hdfs takes some tuning:

Adjust block size based on workload type—for example set 256 MB (or higher) if dealing mostly with multi-gigabyte files common in video analytics/log archiving scenarios rather than thousands tiny records typical transactional systems might generate instead!

Tune replication factor wisely—not every dataset needs triple redundancy especially if raw storage costs outweigh risk profile involved given business priorities currently faced organization-wide today…

Enable short-circuit local reads where possible: This lets clients bypass network stack entirely when accessing files stored locally speeding things up dramatically during peak loads!

Integrate management platforms like Cloudera Manager/Ambari if available—they simplify configuration changes/monitoring tasks freeing staff focus strategic projects rather than routine firefighting chores alone day-after-day endlessly otherwise encountered legacy environments lacking automation support natively built-in upfront design phase originally conceived years ago perhaps…

Why Choose Hadoop HDFS for Big Data

Open-source and runs on commodity hardware — low entry cost and scalable.

Built-in redundancy and fault tolerance prevent permanent data loss.

Handles both structured and unstructured data at scale.

Integrates with the Apache ecosystem (Spark, Hive, Kafka, etc.).

Proven across industries — finance, healthcare, retail, media, research.

Often deployed hybrid with cloud object stores (e.g., Amazon S3) for balance of control and elasticity.

How to Protect Hadoop HDFS Files with Vinchin Backup & Recovery

While robust storage architectures like Hadoop HDFS provide inherent resilience, comprehensive backup remains essential for true data protection. Vinchin Backup & Recovery is an enterprise-grade solution purpose-built for safeguarding mainstream file storage—including Hadoop HDFS environments—as well as Windows/Linux file servers, NAS devices, and S3-compatible object storage. Specifically optimized for large-scale platforms like Hadoop HDFS, Vinchin Backup & Recovery delivers exceptionally fast backup speeds that surpass competing products thanks to advanced technologies such as simultaneous scanning/data transfer and merged file transmission.

Among its extensive capabilities, five stand out as particularly valuable for protecting critical big-data assets: incremental backup (capturing only changed files), wildcard filtering (targeting specific datasets), multi-level compression (reducing space usage), cross-platform restore (recovering backups onto any supported target including other file servers/NAS/Hadoop/object storage), and integrity check (verifying backups remain unchanged). Together these features ensure efficient operations while maximizing security and flexibility across diverse infrastructures.

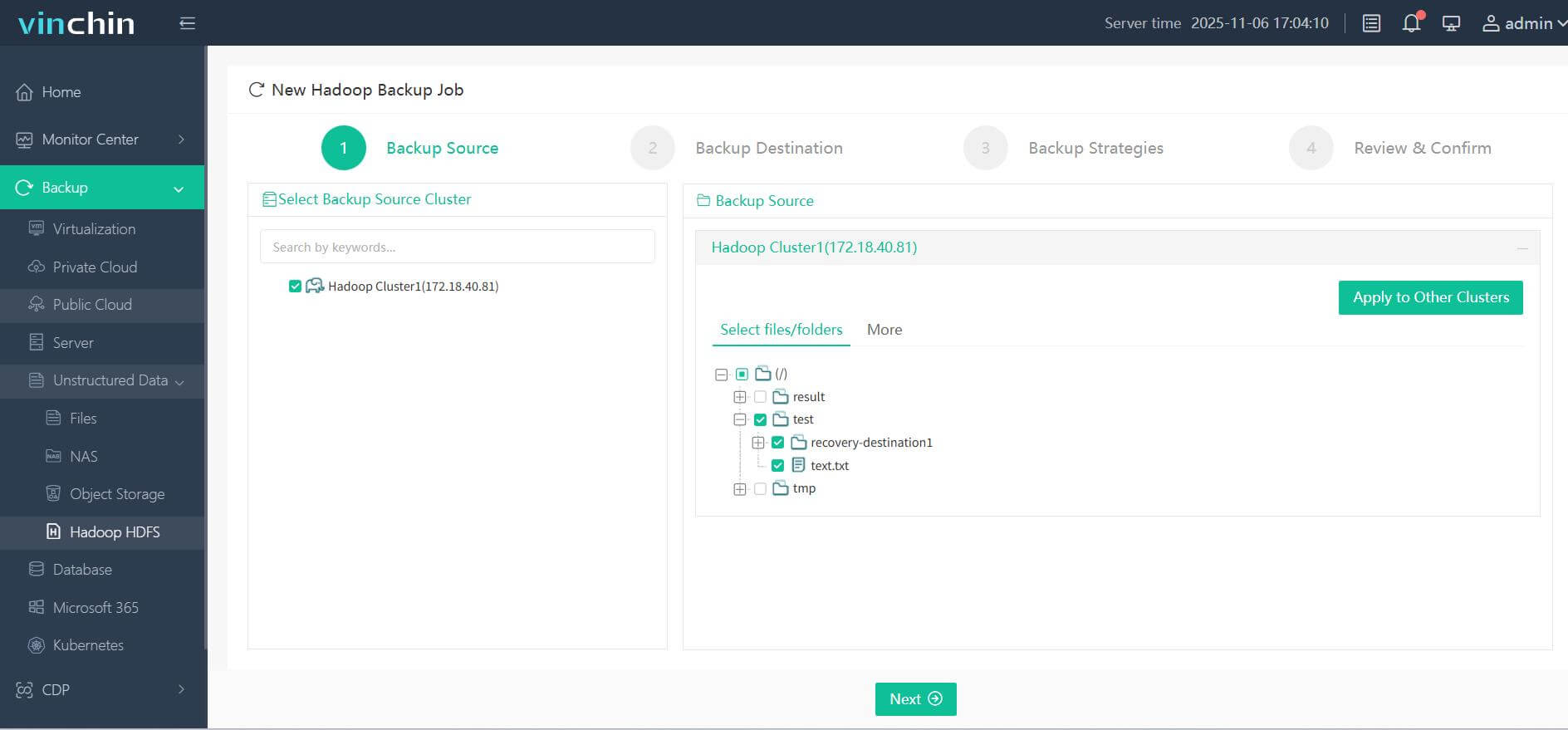

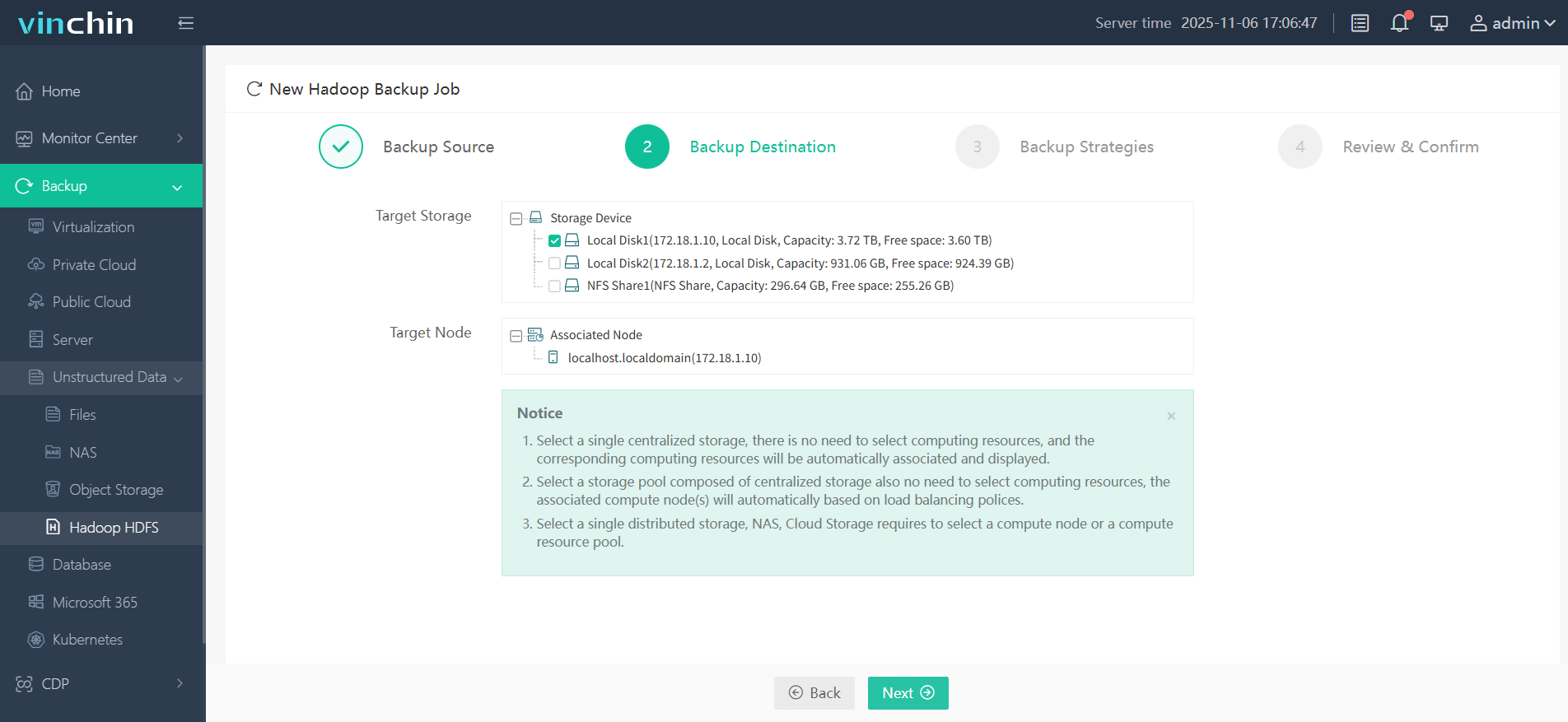

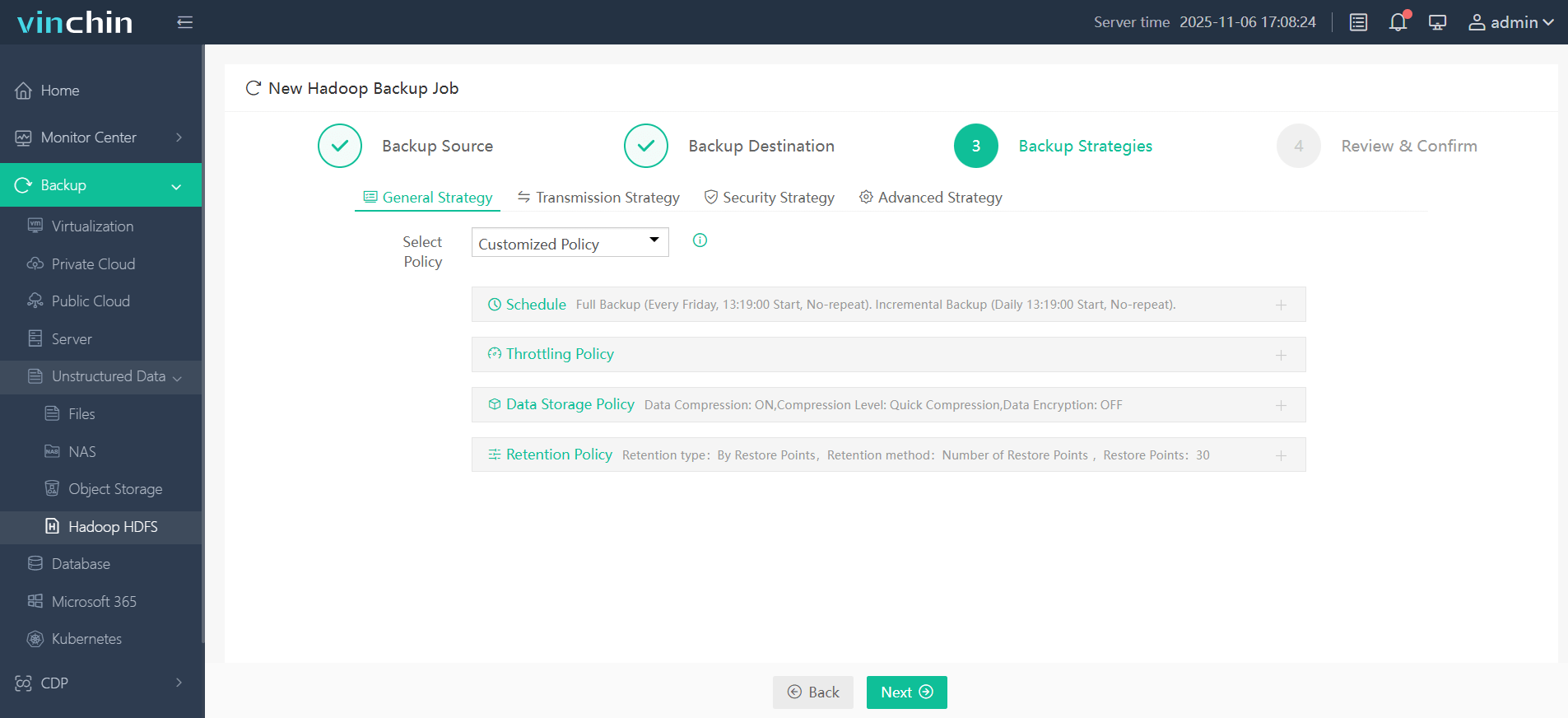

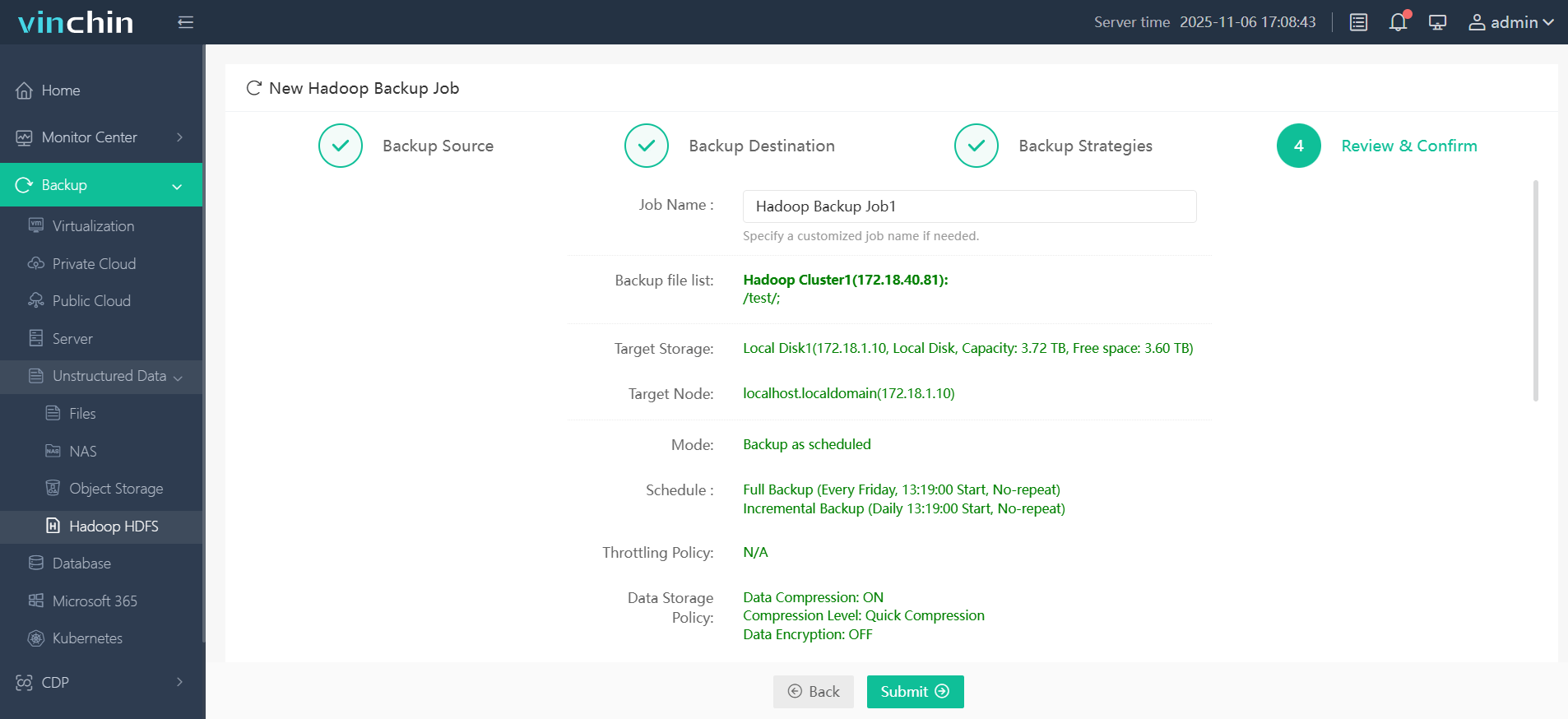

Vinchin Backup & Recovery offers an intuitive web console designed for simplicity. To back up your Hadoop HDFS files:

Step 1. Select the Hadoop HDFS files you wish to back up

Step 2. Choose your desired backup destination

Step 3. Define backup strategies tailored for your needs

Step 4. Submit the job

Join thousands of global enterprises who trust Vinchin Backup & Recovery—renowned worldwide with top ratings—for reliable data protection. Try all features free with a 60-day trial; click below to get started!

Hadoop HDFS FAQs

Q1: How do I check live status of my entire cluster?

A1: Quick CLI (terminal):

hdfs dfsadmin -report

This prints a snapshot of NameNode/Datanode health (active/dead DataNodes, total/used/free space, block counts, replication info, etc.). Run as a user with HDFS admin rights (or sudo -u hdfs ...) if needed.

GUI / web UIs:

NameNode Web UI (default: http://<namenode-host>:9870/) for cluster health, Datanode list and basic graphs.

YARN ResourceManager UI (default port 8088) for job/container/resource views.

Q2: Can I recover accidentally deleted directory if trash was disabled?

A2: Not natively. If fs.trash.interval is 0 (Trash disabled) and you have no snapshots or external backups, HDFS does not provide a built-in way to restore the deleted data.

Q3: How do I change ownership recursively across nested folders/files?

A3: Command:

hdfs dfs -chown -R username:group /path/to/directory

If you need to run as the HDFS superuser:

sudo -u hdfs hdfs dfs -chown -R username:group /path/to/directory

This changes owner:group recursively for the path and all its contents.

Conclusion

Hadoop HDFS provides a proven, cost-effective foundation for storing and protecting massive datasets by distributing data across commodity hardware and offering built-in replication, data locality, and strong ecosystem integration. With proper configuration, monitoring, and complementary tools (like Spark or cloud object stores), HDFS remains an excellent choice for large-scale batch, archival, and analytics workloads.

Share on: