-

What Is Oracle RMAN Backup?

-

Why Follow Oracle RMAN Backup Best Practices

-

Essential Oracle RMAN Backup Best Practices

-

How Vinchin Backup & Recovery Simplifies Oracle Database Protection?

-

Oracle RMAN Backup Best Practices FAQs

-

Conclusion

Protecting your Oracle database is not just a technical task—it’s a business necessity. Data loss or downtime can cost your company money, reputation, and even customers. Failing to follow proven backup practices can lead to extended outages or unrecoverable data. That’s why using Oracle Recovery Manager (RMAN) with best practices is so important. But what are those best practices, and how do you apply them? Let’s break it down step by step.

What Is Oracle RMAN Backup?

Oracle RMAN (Recovery Manager) is the built-in tool for backing up, restoring, and recovering Oracle databases. Unlike manual file copies, RMAN understands the database structure and ensures backups are consistent and reliable. It supports full, incremental, and differential backups while automating many tasks that would otherwise be error-prone if done by hand. RMAN also manages backup retention policies, compression options, validation routines, and integrates tightly with Oracle features like block change tracking. This makes it the go-to choice for DBAs worldwide.

Why Follow Oracle RMAN Backup Best Practices

Following best practices with RMAN is about more than just ticking boxes. It’s about meeting your business’s recovery time objectives (RTO) and recovery point objectives (RPO). A solid backup plan ensures you can restore data quickly after a failure, minimize data loss, and avoid surprises during a crisis. Best practices also help you optimize storage usage, reduce backup windows as your database grows in size or complexity, and keep your systems running smoothly.

Essential Oracle RMAN Backup Best Practices

Let’s walk through essential RMAN backup best practices—each building on the last to create a robust strategy from beginner basics to advanced techniques.

Define Your Backup and Recovery Objectives

Every strong backup plan starts here: know what you need before you start configuring jobs or scripts. How much recent data can your business afford to lose? How fast must systems be restored after an incident? These answers define your RPO and RTO. For example: if losing one hour of transactions is unacceptable but four hours of downtime is tolerable during an outage window—that shapes both frequency of backups and retention periods. Review these objectives regularly as business needs evolve.

Use a Tiered Backup Strategy

A tiered approach balances performance against recoverability—a must for growing environments. Most organizations perform a full Level 0 backup weekly plus daily Level 1 incrementals; this reduces both storage requirements and daily impact on production workloads while ensuring point-in-time recovery remains possible.

For example:

Level 0 (Full) Backup: Once per week

Level 1 (Incremental) Backups: Every day

Archived Log Backups: Several times per day based on transaction volume

Block change tracking further speeds up incremental jobs by reading only changed blocks since the last backup—saving time on large databases.

Enable and Test Backup Compression

Backup compression saves disk space—and often network bandwidth—especially when dealing with large databases or slow links between primary sites and offsite locations. RMAN offers several compression levels (BASIC, LOW, MEDIUM, HIGH). In most cases LOW or MEDIUM provides good balance between CPU usage during backups versus storage savings achieved. Always test different settings in your environment before deploying widely; monitor CPU load closely during initial runs.

Parallelize and Tune Your Backups

Large databases demand efficient resource use during backups—otherwise jobs may run too long or impact production users. Use multiple channels in RMAN to parallelize work across CPUs/disks/networks; in clustered environments allocate at least one channel per instance for optimal throughput.

However: excessive parallelism can overload I/O subsystems or saturate network links without improving speed further! Monitor views like V$BACKUP_SYNC_IO or V$BACKUP_ASYNC_IO during test runs to spot bottlenecks early on.

For very large datafiles consider using SECTION SIZE within your BACKUP command—this splits files into smaller sections processed independently by different channels for even greater speed gains.

Automate Scheduling—and Monitor Everything Closely

Manual backups are risky—they’re easy to forget or misconfigure under pressure! Schedule all regular jobs using tools like cron on Linux or Task Scheduler on Windows servers so nothing gets missed overnight or over weekends.

But don’t stop there: always monitor logs generated by each job run; integrate output into central log aggregation tools where possible so failures trigger alerts immediately—not days later when it’s too late!

Regularly run CROSSCHECK BACKUP commands: this reconciles metadata in control files/recovery catalogs against actual files present on disk/tape/cloud storage; missing pieces get marked as EXPIRED so they’re not relied upon accidentally later.

Set up scripts that parse V$RMAN_OUTPUT or V$RMAN_STATUS views for quick status checks after each scheduled job completes—catch silent errors before they become disasters!

Manage Retention Policies—and Clean Up Old Files Promptly

Retention policies ensure enough history exists for restores—but not so much that old files fill up valuable disk space unnecessarily! Configure retention based directly on RPO needs:

For example:

CONFIGURE RETENTION POLICY TO RECOVERY WINDOW OF 7 DAYS;

This keeps all files needed for any restore within seven days; older pieces become eligible for deletion via

DELETE OBSOLETE;

Use

DELETE EXPIRED;

to clean out records pointing at missing physical files—keeping catalogs tidy over time.

Review retention settings regularly as business requirements shift—or as available storage changes due to hardware upgrades/migrations!

Protect Archived Redo Logs Aggressively

Archived redo logs are vital—they let you roll forward from any valid base backup right up until disaster struck! Back them up frequently throughout each day based on transaction rates; delete only once confirmed safely copied elsewhere using:

BACKUP ARCHIVELOG ALL DELETE INPUT;

Never leave archived logs unprotected—even short gaps could mean lost transactions if disaster hits at just the wrong moment!

Validate and Actually Test Your Recoveries Regularly

A backup isn’t truly useful unless it restores cleanly under pressure! Don’t rely solely on automated reports: schedule regular tests where teams actually restore onto non-production systems using both

RESTORE VALIDATE;

(to check that required pieces exist)

and

VALIDATE BACKUPSET;

(to confirm no corruption inside those pieces).

Remember: validation checks aren’t substitutes for full-scale drills where teams practice restoring entire environments—including application connectivity testing—to prove RTOs/RPOs are realistic under fire!

Use an External Recovery Catalog When Appropriate

In larger shops—or anywhere multiple databases share infrastructure—a dedicated external recovery catalog brings major benefits beyond simple metadata tracking:

Stores longer history than control file alone allows

Centralizes management/reporting across many instances

Enables stored scripts/templates reused across sites/databases

Simplifies recovery if current control file/spfile copies are lost entirely

However: remember that catalog itself must be backed up regularly too—it becomes part of your overall DR plan!

Document Everything—and Review Often

Clear documentation saves precious minutes during emergencies when stress runs high! Maintain updated records covering:

All active scripts/jobs used by automation tools

Current schedules/frequencies/policies set within RMAN/configuration files

Step-by-step procedures tested successfully during past drills—including screenshots/log samples where helpful!

Review/update these materials every quarter—or whenever significant changes occur in infrastructure/business priorities—to keep everyone ready when seconds count most!

How Vinchin Backup & Recovery Simplifies Oracle Database Protection?

To further strengthen your Oracle database protection strategy beyond native tools alone, consider Vinchin Backup & Recovery—a professional enterprise-level solution supporting today’s mainstream platforms including Oracle, MySQL, SQL Server, MariaDB, PostgreSQL, PostgresPro, and TiDB. For organizations relying heavily on Oracle databases specifically, Vinchin Backup & Recovery delivers advanced source-side compression and incremental backup capabilities tailored for high-performance environments alongside batch database operations—all complemented by flexible multi-level data compression options and granular retention policy controls designed around compliance needs.

Among its many features relevant to enterprise users are log/archived log backup support, any-point-in-time recovery options, cloud/tape archiving integration, robust storage protection against ransomware threats including WORM functionality, plus automated integrity checking with SQL-based verification routines—all working together to ensure reliability while reducing manual effort across complex deployments.

The intuitive web console streamlines every stage of safeguarding your Oracle environment:

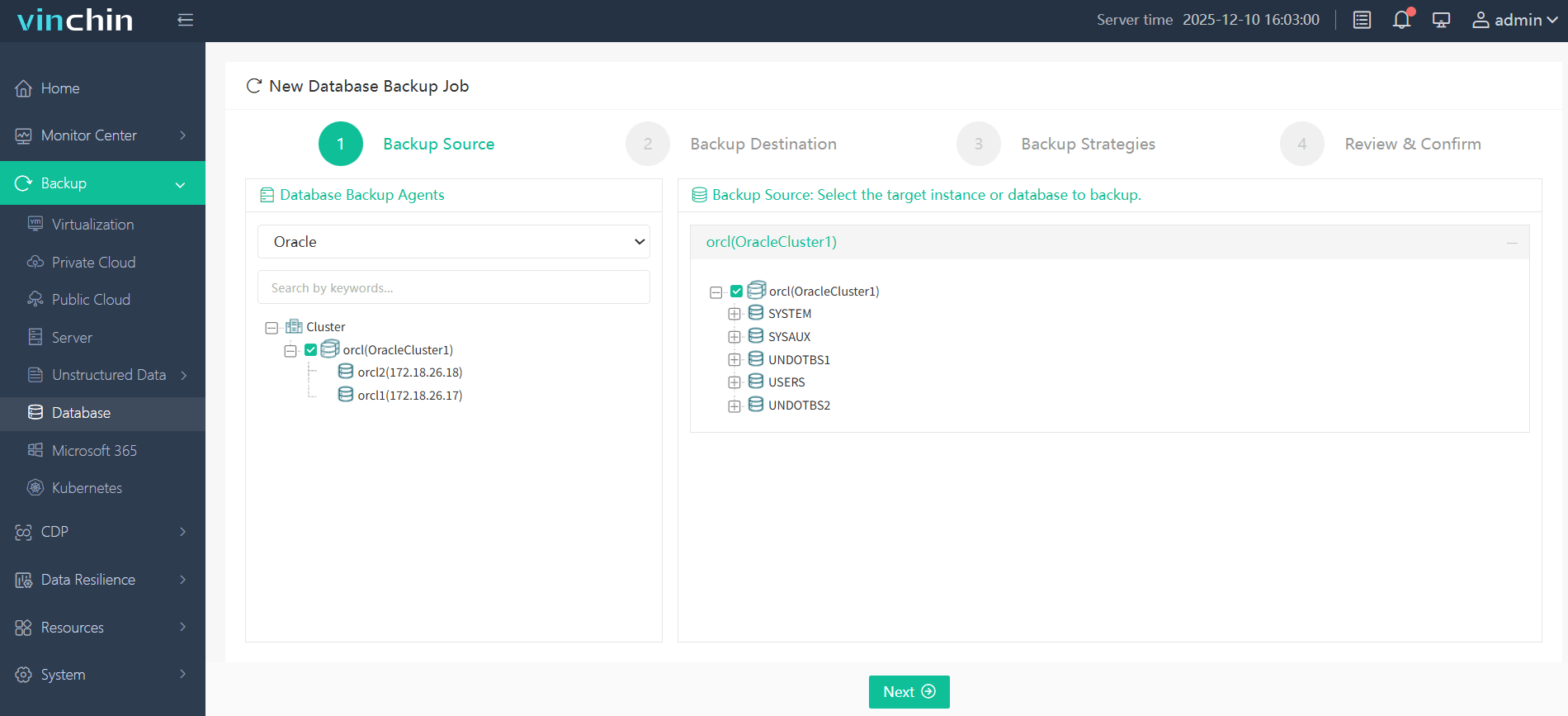

Step 1. Select the Oracle database to back up

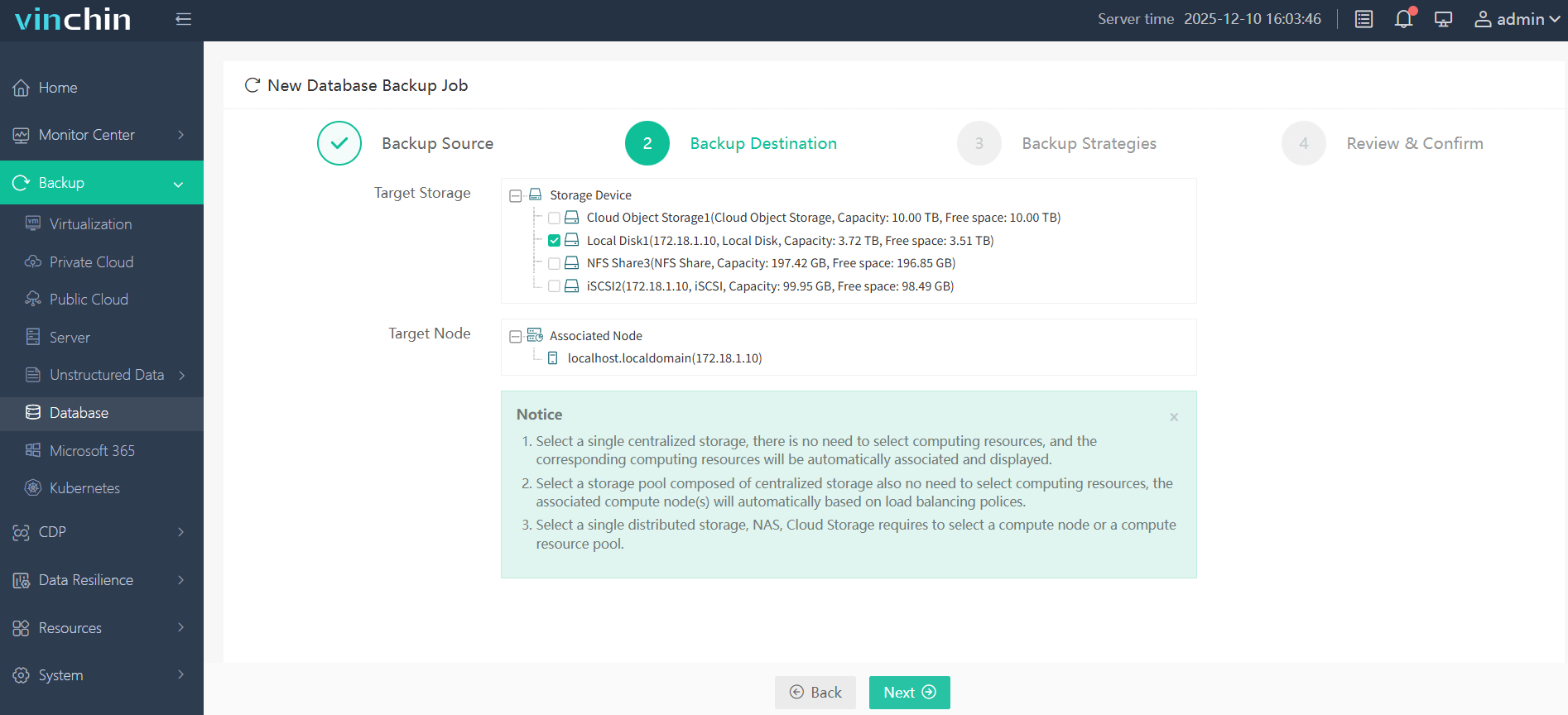

Step 2. Choose the backup storage

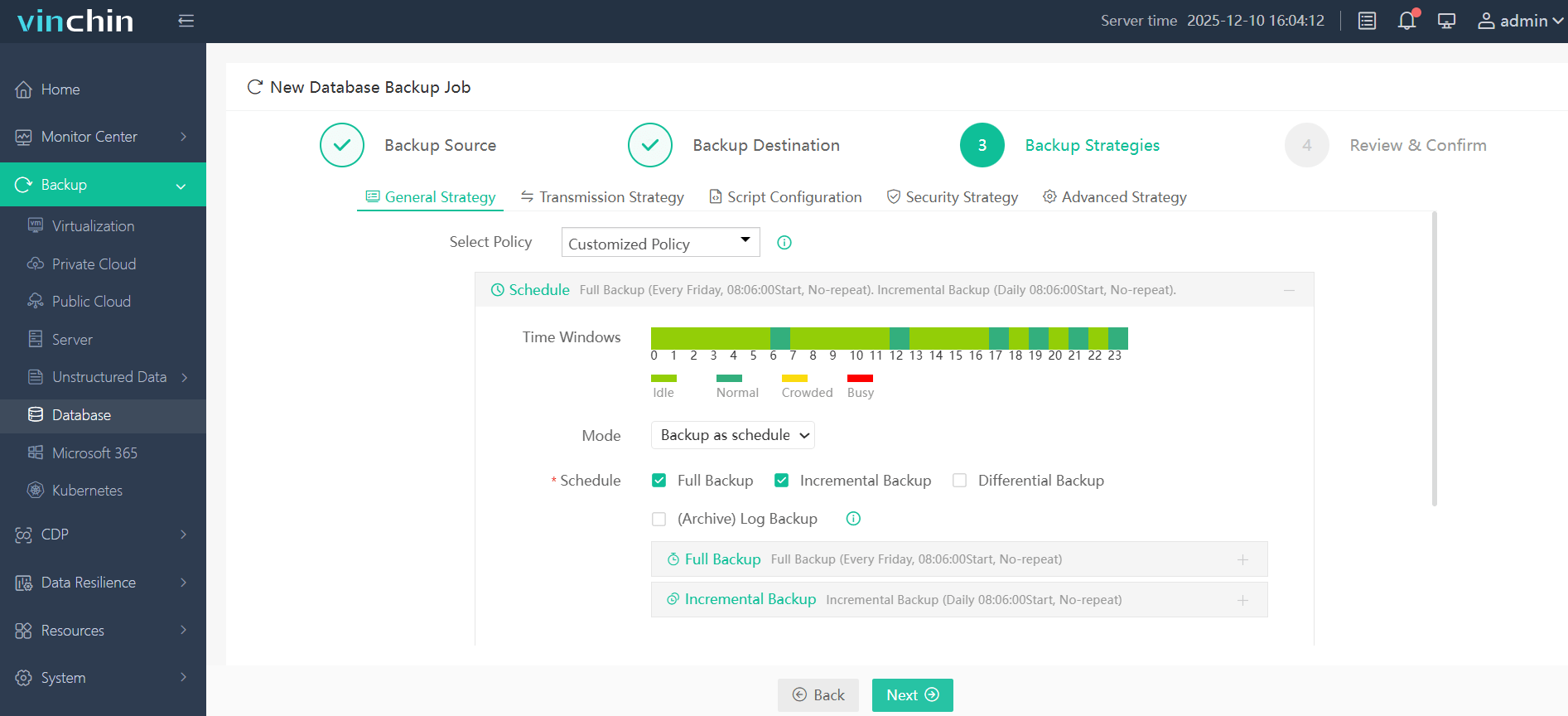

Step 3. Define the backup strategy

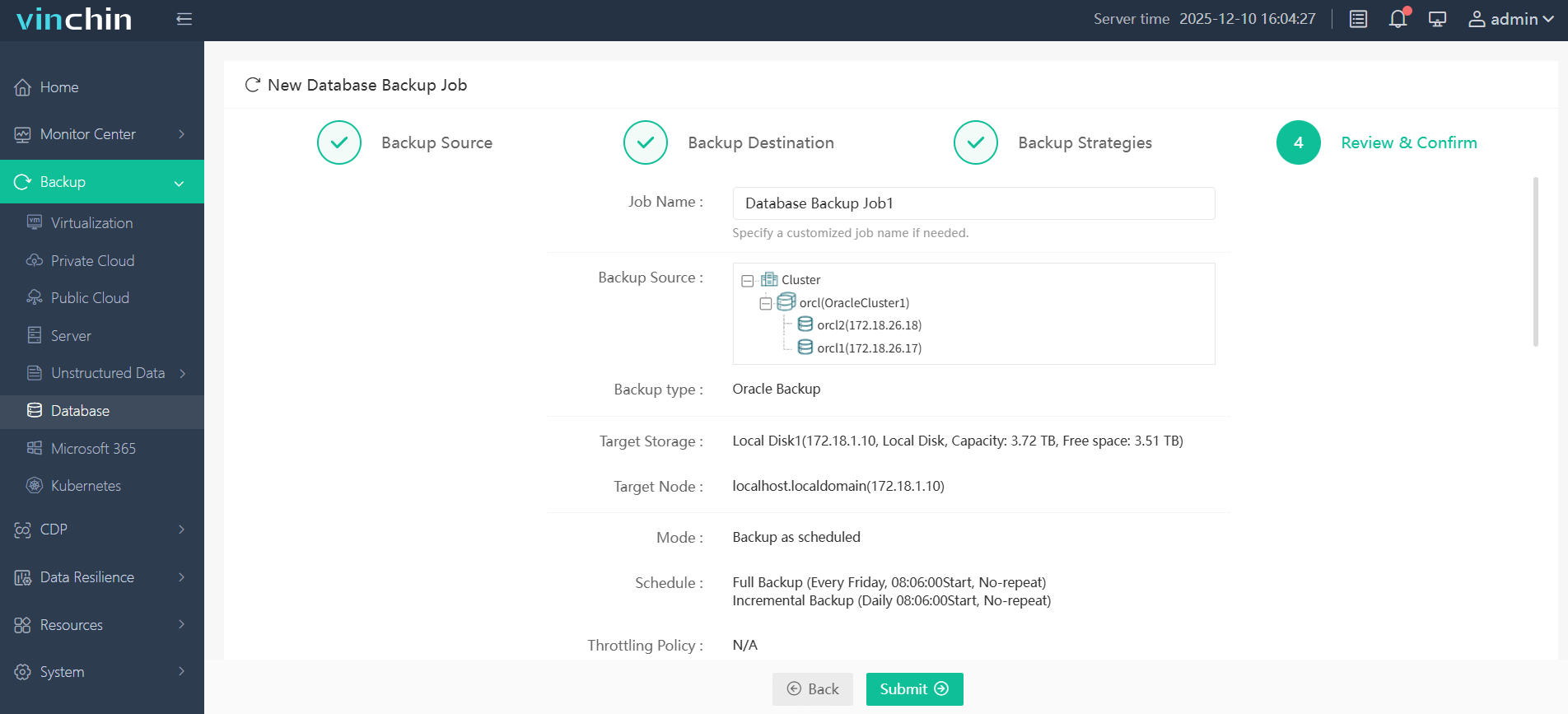

Step 4. Submit the job

Trusted globally by thousands of enterprises—with top ratings from industry analysts—you can experience every feature free for 60 days with no obligation; click below to download Vinchin Backup & Recovery today.

Oracle RMAN Backup Best Practices FAQs

Q1: How do I handle "RMAN-06169: could not read file header" errors?

A1: Check OS-level permissions/existence first; then validate affected files using VALIDATE DATAFILE X command before attempting restoration from previous good backups.

Q2: What should I do if my FRA fills up unexpectedly?

A2: Run DELETE OBSOLETE followed by CROSSCHECK BACKUP then increase FRA size/configure offloading jobs if needed.

Q3: Can I back up directly from my primary site to cloud object storage?

A3: Yes—with supported plugins/interfaces configured properly within newer versions of Oracle Database.

Conclusion

Strong Oracle RMAN backup strategies defend against costly downtime/data loss better than anything else available today—with proven results across industries worldwide! By applying these layered best practices now you'll ensure rapid reliable recoveries tomorrow when stakes are highest—and Vinchin makes protecting critical assets even simpler along every step of that journey!

Share on: