-

What Is RMAN Transportable Tablespace?

-

Why Use RMAN Transportable Tablespace?

-

Critical Pre-Migration Checks

-

Method 1. How to Use RMAN for Transportable Tablespace Migration

-

Method 2. How to Use Data Pump for Transportable Tablespace Migration

-

Protecting Your Oracle Database After Migration With Vinchin Backup & Recovery

-

RMAN Transportable Tablespace FAQs

-

Conclusion

Moving large amounts of data between Oracle databases is a common challenge for IT operations teams. Downtime must be minimized to keep business running smoothly. The RMAN transportable tablespace feature offers an efficient solution for these migrations. It lets you move tablespaces quickly—even across different platforms—by working at the physical file level rather than exporting and importing every row.

In this guide, we’ll explain what RMAN transportable tablespace is, why it matters for database administrators, and how to use it step by step. We’ll also cover critical pre-migration checks that can save you hours of troubleshooting later. For comparison, we’ll look at using Data Pump as an alternative approach. Finally, we’ll show you how to protect your Oracle databases after migration with Vinchin.

What Is RMAN Transportable Tablespace?

RMAN transportable tablespace is an Oracle feature designed for moving one or more tablespaces from a source database to a target database efficiently. Unlike traditional export/import methods that operate logically at the row level, this feature copies data files directly along with their metadata. This makes it much faster when dealing with large datasets or entire application modules.

The process uses Recovery Manager (RMAN) to automate backup, restore, recovery steps—and even platform conversion if needed. By handling everything from creating auxiliary instances to exporting metadata via Data Pump automatically within one workflow, RMAN reduces manual effort and risk of error.

This method is especially useful when migrating between systems that have compatible endian formats or when using RMAN’s built-in conversion features for cross-platform moves.

Why Use RMAN Transportable Tablespace?

For operations administrators tasked with moving large volumes of data while minimizing downtime, choosing the right tool matters. Let’s see why RMAN transportable tablespace stands out:

First: It dramatically reduces downtime because you don’t need to perform full logical exports and imports—data files are moved directly instead of being re-created row by row.

Second: You can migrate data between different operating systems or hardware platforms as long as endian formats match—or use RMAN’s conversion capabilities if they do not.

Third: With point-in-time recovery support built into RMAN workflows (using SCN or timestamp), you gain flexibility for reporting environments or cloning production data safely.

Fourth: For very large tablespaces or self-contained application schemas spanning multiple terabytes, physical movement avoids network bottlenecks associated with logical export/import tools.

How does it compare with Data Pump? Here’s a quick side-by-side view:

| Feature | RMAN Transportable Tablespace | Data Pump Transportable Tablespace |

|---|---|---|

| Speed (large datasets) | Very fast | Slower |

| Downtime window | Minimal | Longer |

| Cross-platform support | Yes (with CONVERT) | Limited |

| Automation | High | Manual steps required |

| Granular object selection | Less granular | More control |

So when should you choose each? If speed and minimal downtime are top priorities—especially during cross-platform migrations—RMAN TTS is usually best. If you need fine-grained control over which objects move or are working with smaller sets of data where downtime isn’t critical, Data Pump may be preferable.

Critical Pre-Migration Checks

Before starting any migration using transportable tablespaces—whether through RMAN or Data Pump—it’s essential to verify several key requirements up front. Skipping these checks often leads to failed migrations or unexpected errors mid-process.

First: Check Self-Containment

A self-contained tablespace means all its objects exist entirely within itself; no dependencies reach outside into other tablespaces except SYSTEM/SYSAUX where allowed. To check this:

EXEC DBMS_TTS.TRANSPORT_SET_CHECK('TBS1,TBS2', TRUE);

SELECT * FROM TRANSPORT_SET_VIOLATIONS;If TRANSPORT_SET_VIOLATIONS returns rows describing violations (like indexes stored elsewhere), resolve them by moving those objects into included tablespaces or expanding your list until no violations remain.

Second: Verify Platform Compatibility

Cross-platform moves require matching endian formats unless you plan on converting files during migration. Query supported platforms like this:

SELECT * FROM V$TRANSPORTABLE_PLATFORM;

Compare PLATFORM_NAME values between source and target databases; if they differ in endianness (ENDIAN_FORMAT column), plan on using RMAN's CONVERT command during migration.

Third: Block Size & Character Set Compatibility

Both source and target databases must have compatible block sizes (usually 8K but always confirm). Also ensure character sets match; mismatches can cause import failures later on.

Fourth: Space Requirements

Make sure there’s enough disk space available:

On source server—for auxiliary instance creation

In auxiliary destination directory—for temporary storage

On target server—for incoming data files plus room for growth

Fifth: Privileges & Access

You need administrative privileges such as SYSDBA or SYSBACKUP roles on both databases plus EXP_FULL_DATABASE/IMP_FULL_DATABASE roles for running Data Pump utilities inside Oracle.

Taking time now ensures smooth sailing later!

Method 1. How to Use RMAN for Transportable Tablespace Migration

Once pre-checks are complete and prerequisites met—including backups—it’s time to start migrating using Recovery Manager (RMAN). This method works best when dealing with large self-contained application modules where minimizing downtime is crucial.

Here’s how it unfolds:

Step 1: Prepare Your Environment

Connect as a user holding SYSDBA, SYSBACKUP, or equivalent privileges on both source and target databases. Identify which tablespaces will be moved; double-check their names against your earlier containment check results so nothing gets missed accidentally!

Ensure all necessary archived redo logs are present—they’re required if restoring/recovering up-to-date copies during migration—and that recent backups exist just in case something goes wrong mid-process.

Step 2: Run TRANSPORT TABLESPACE Command in RMAN

Start up the Recovery Manager client (rman) connected to your source database instance:

RMAN> TRANSPORT TABLESPACE tbs1,tbs2 AUXILIARY DESTINATION '/tmp/aux' TABLESPACE DESTINATION '/tmp/tts';

Here,

AUXILIARY DESTINATION points to a directory where Oracle creates an auxiliary instance used temporarily during recovery; make sure there’s enough free space here—not just for user datafiles but also SYSTEM/SYSAUX/UNDO/TEMP copies!

TABLESPACE DESTINATION specifies where final output files land—the actual

.dbffiles plus exported metadata dump (dmpfile.dmp). Choose a location accessible from both servers if possible; otherwise plan secure transfer afterward.

Note: If migrating across platforms with different endian formats add:

RMAN> CONVERT TABLESPACE tbs1,tbs2 TO PLATFORM 'Linux x86 64-bit' FORMAT '/tmp/convert/%U';

This converts each file into proper format ready for import at destination.

Step 3: Let RMAN Automate Core Steps

Behind-the-scenes now:

1. An auxiliary instance spins up automatically under /tmp/aux.

2. Targeted tablespaces restore/recover up-to-specified SCN/timestamp.

3. Each becomes read-only inside auxiliary environment.

4. Metadata exports via internal callout to Data Pump utility.

5. All output lands neatly inside /tmp/tts.

If anything fails here—most commonly due to lack of disk space in auxiliary destination—you’ll see clear errors in logs; address them before retrying!

Step 4: Move Files Between Servers

Copy resulting .dbf files plus exported dump (dmpfile.dmp) securely from source server over network onto intended target machine/location using SCP/SFTP/NFS mount—or whatever fits your security policy best!

Double-check file integrity after transfer by comparing checksums if possible—a corrupted copy wastes valuable time later on import attempts!

Step 5: Plug Tablespaces Into Target Database

On target system connect again as privileged user then run either provided SQL script (impscrpt.sql) generated earlier—or use standard Data Pump Import utility like so:

impdp system/password \ dumpfile=dmpfile.dmp \ directory=DATA_PUMP_DIR \ transport_datafiles='/tmp/tts/tbs1.dbf','/tmp/tts/tbs2.dbf' \ logfile=tts_import.log

Adjust paths/usernames accordingly based on local conventions/policies! Watch log output closely; any issues encountered here usually relate back either missing privileges/mismatched block size/unresolved dependencies flagged earlier but overlooked…

Step 6: Set Imported Tablespaces Back To Read Write

After successful import flip each transported tablespace back online:

ALTER TABLESPACE tbs1 READ WRITE; ALTER TABLESPACE tbs2 READ WRITE;

Now users/applications can resume normal activity without delay!

Step 7: Validate Migration Success

Don’t skip validation! Run queries checking object counts/types match expectations versus original environment; rerun containment check procedure just like before but now targeting imported spaces only:

EXEC DBMS_TTS.TRANSPORT_SET_CHECK('TBS1,TBS2', TRUE);

SELECT * FROM TRANSPORT_SET_VIOLATIONS;Also test sample application connections/workflows briefly before declaring project finished!

Method 2. How to Use Data Pump for Transportable Tablespace Migration

Sometimes automation isn’t needed—or maybe finer control over what gets moved appeals more than raw speed alone? In those cases consider leveraging Oracle's built-in logical export/import toolset known as Data Pump instead:

Data Pump operates at higher abstraction layer than pure file copy approaches—it exports only selected metadata/data structures then re-imports them elsewhere under tight admin supervision…making it ideal choice when dealing primarily small-medium sized workloads requiring custom filtering/object mapping along way!

Here’s how typical workflow looks:

Step 1: Check Self Containment Again

Just like above run:

EXEC DBMS_TTS.TRANSPORT_SET_CHECK('TBS1,TBS2', TRUE);

SELECT * FROM TRANSPORT_SET_VIOLATIONS;Resolve any listed violations before proceeding further…

Step 2: Make Source Tablespaces Read Only

Lock down targeted spaces so no changes sneak through mid-export!

ALTER TABLESPACE tbs1 READ ONLY; ALTER TABLESPACE tbs2 READ ONLY;

Confirm status via query against DBA_TABLESPACES view if unsure…

Step 3: Export Metadata Using expdp Utility

Launch export specifying relevant parameters including which spaces participate/logging preferences etc…

expdp system/password \ dumpfile=tts_exp.dmp \ directory=DATA_PUMP_DIR \ transport_tablespaces=TBS1,TBS2 \ transport_full_check=Y \ logfile=tts_export.log

Monitor progress/output closely looking out especially ORA-errors indicating privilege issues/missing dependencies etc…

Tip: For cross-platform moves convert physical .dbfs ahead-of-time using same CONVERT command described previously under Method #1 above!

Step 4: Copy Output Files Over Network To Target Server

Use secure transfer mechanism appropriate per company policy ensuring all relevant .dbfs + exported dump arrive intact/unmodified ready next phase...

Validate integrity post-transfer whenever feasible...

Step 5: Plug Spaces Into New Database Instance

Create necessary users/schemas first then launch import operation referencing correct paths/names/log locations...

impdp system/password \ dumpfile=tts_exp.dmp \ directory=DATA_PUMP_DIR \ transport_datafiles='/path/to/tbs1.dbf','/path/to/tbs2.dbf' \ logfile=tts_import.log

Review log output confirming successful completion/no unresolved warnings/errors remain outstanding...

Tip: If encountering "ORA-" errors review both export/import logs carefully—they often point directly toward root cause needing fix-up action upstream/downstream accordingly...

Step 6: Restore Read Write Status Post Import

Bring newly imported spaces back online fully functional mode...

ALTER TABLESPACE tbs1 READ WRITE; ALTER TABLESPACE tbs2 READ WRITE;

Protecting Your Oracle Database After Migration With Vinchin Backup & Recovery

Following a successful migration, safeguarding your Oracle environment becomes paramount. Vinchin Backup & Recovery is an enterprise-level solution supporting today’s mainstream databases—including Oracle, MySQL, SQL Server, MariaDB, PostgreSQL, PostgresPro, and TiDB—with robust compatibility tailored specifically for operational needs around Oracle environments like yours first and foremost.

Key features such as incremental backup, advanced source-side compression, batch database backup, GFS retention policy, and comprehensive integrity checks help streamline protection while reducing storage costs and ensuring recoverability even in complex scenarios—all managed centrally through one intuitive platform.

With Vinchin Backup & Recovery's web console interface backing up your Oracle database takes just four straightforward steps:

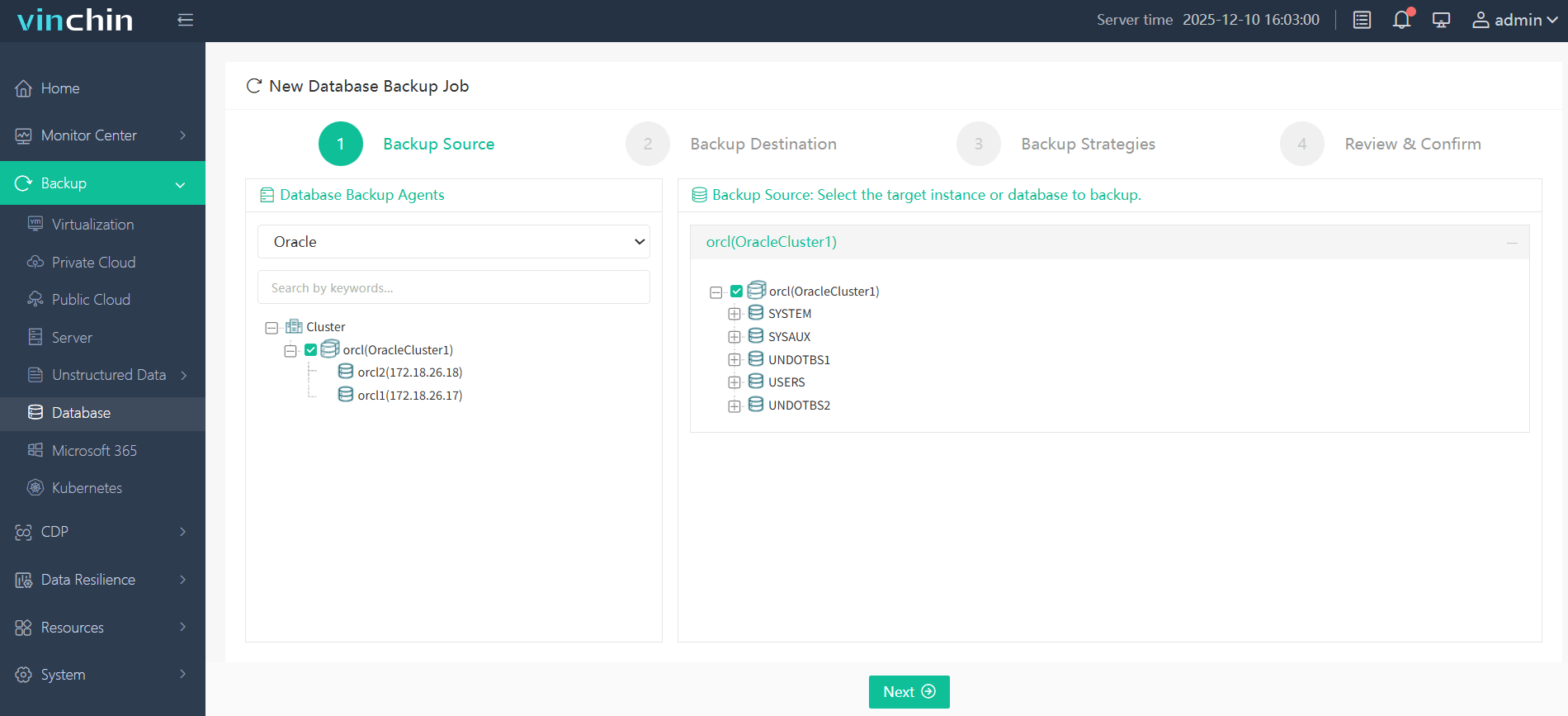

Step 1. Select the Oracle database to back up

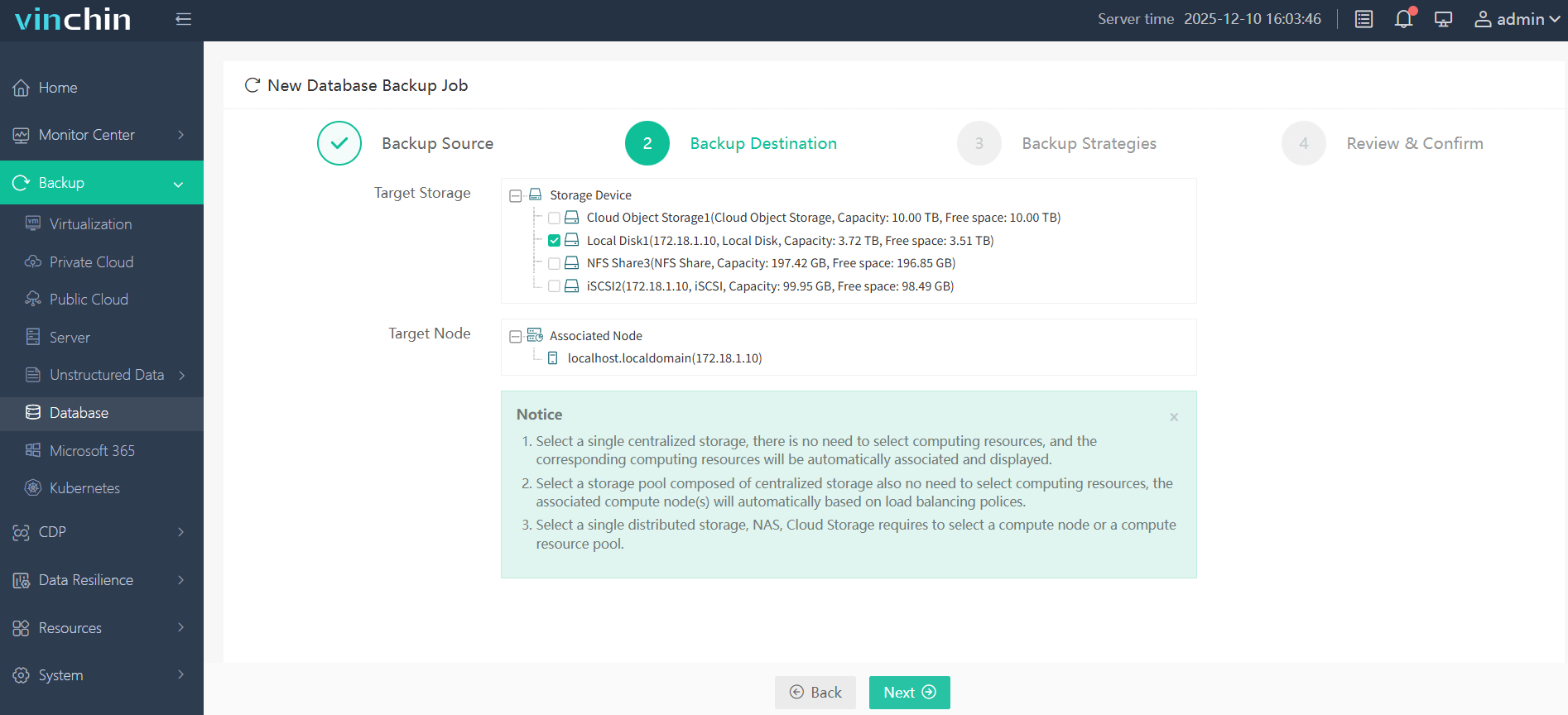

Step 2. Choose backup storage

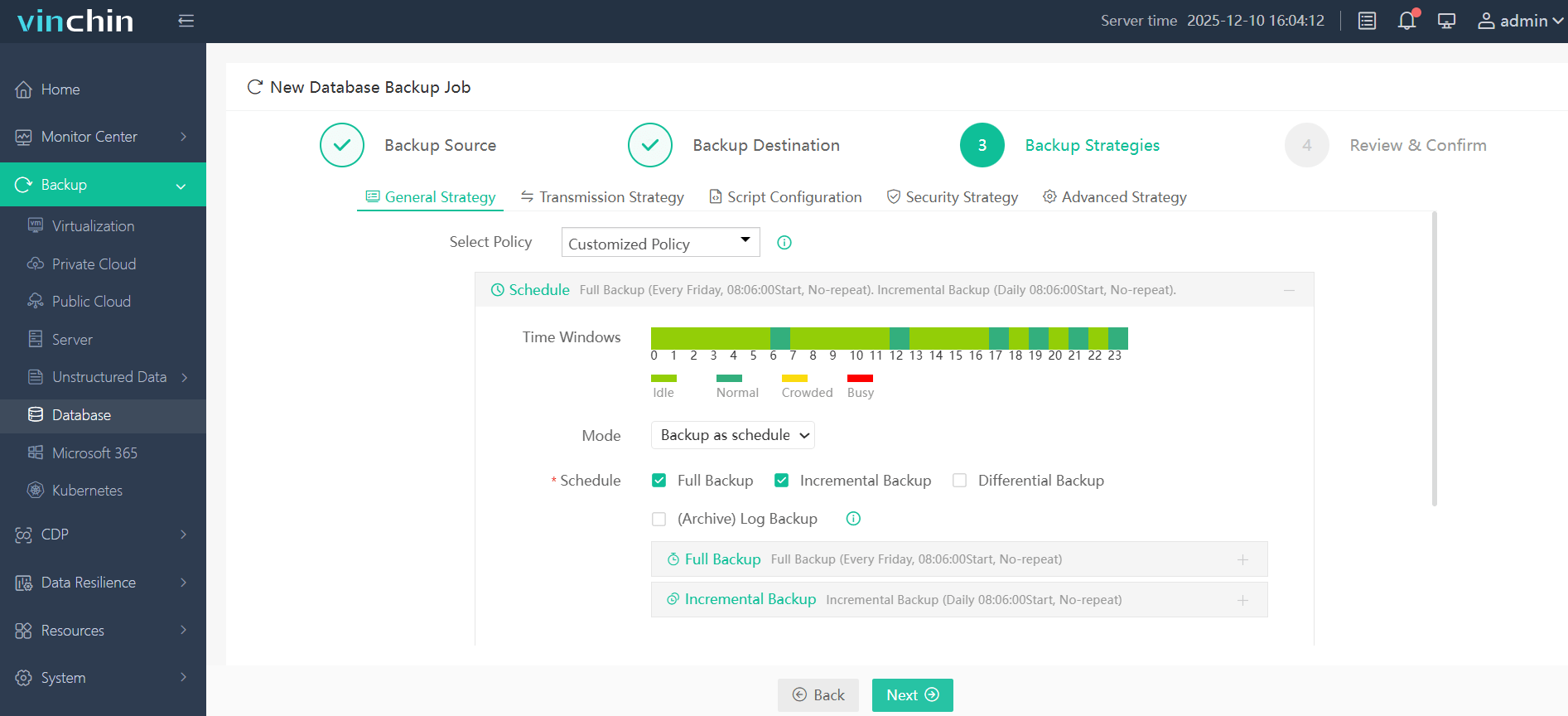

Step 3. Define your backup strategy

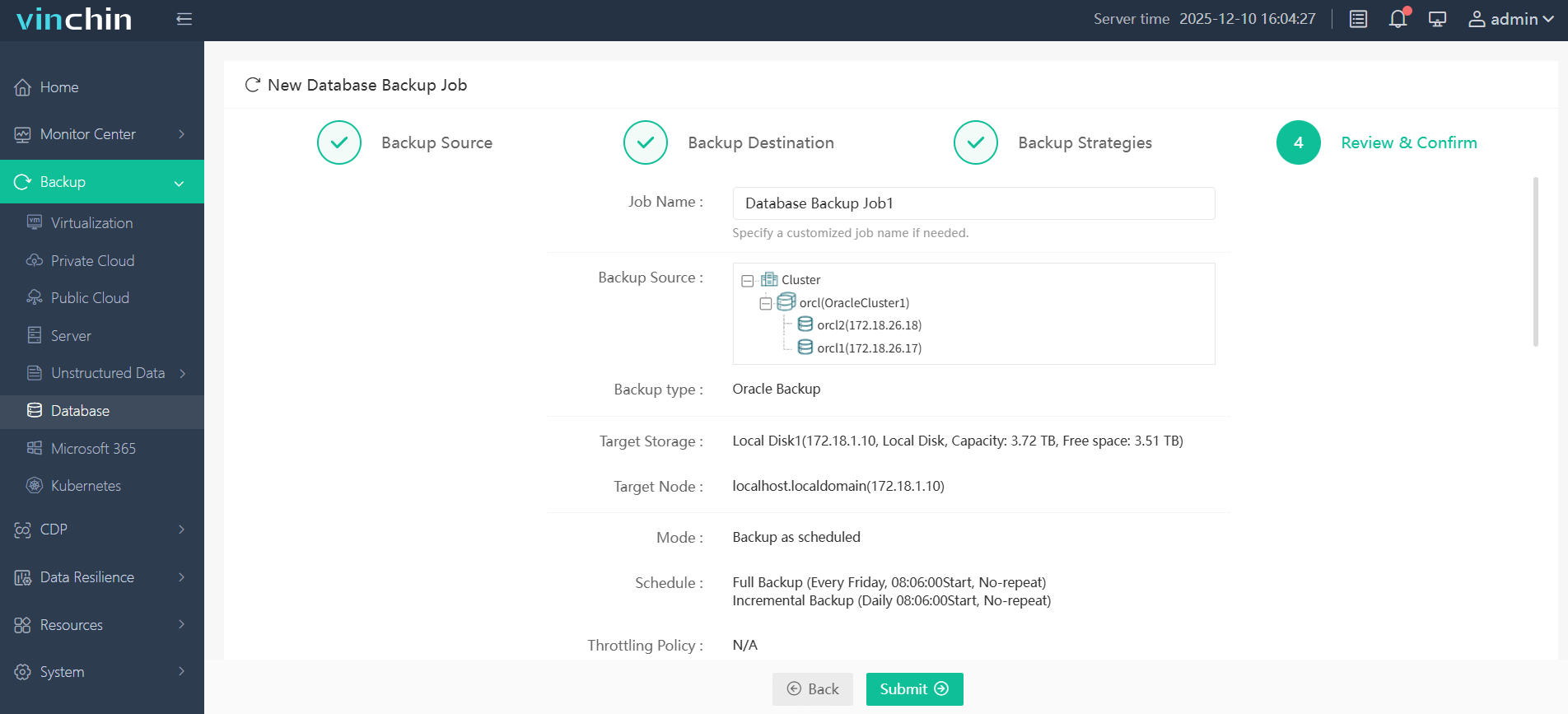

Step 4. Submit the job

Recognized globally by enterprises for reliability and ease-of-use—with excellent customer ratings—Vinchin Backup & Recovery offers a full-featured free trial valid for sixty days so you can experience its benefits firsthand; click below to get started today.

RMAN Transportable Tablespace FAQs

Q1: Can I use RMAN transportable tablespace if my source contains encrypted spaces?

A1: Yes—but remember you'll need also move associated keystore/master keys onto new host prior plugging spaces successfully there afterward.

Q2: What happens if my two platforms differ in endian format?

A2: Use CONVERT command within Recovery Manager workflow converting affected .dbfs inline prior attempting plug-in operation downstream.

Q3: What causes most frequent failures during TRANSPORT TABLESPACE runs?

A3: Insufficient disk space AUXILIARY DESTINATION missing archived redo logs unresolved containment violations privilege gaps—all easily avoided thorough pre-checks upfront!

Conclusion

RMAN transportable tablespace gives operations teams fast reliable ways move huge amounts Oracle data—even across platforms—with minimal disruption compared older methods while still allowing fallback options like Data Pump where greater selectivity needed instead! Afterward safeguard everything effortlessly thanks modern solutions such as Vinchin offering seamless automated protection every step journey forward.

Share on: