-

What Is AWS RDS for Oracle?

-

What Is RMAN in Oracle Backups?

-

Using RMAN with AWS RDS Oracle Databases

-

Method 1: Native Backup Options in AWS RDS Oracle

-

Method 2: Using Data Pump for Logical Backups in AWS RDS Oracle

-

Using RMAN Procedures on Amazon RDS for Oracle

-

Vinchin: A Unified Solution for Diverse IT Environments

-

aws rds oracle rman FAQs

-

Conclusion

Managing backups for Oracle databases in the cloud brings unique challenges—especially when you rely on Amazon RDS for Oracle. While AWS handles much of the infrastructure work behind the scenes, you are still responsible for your data’s recoverability and portability. But what happens when you need enterprise-grade backup strategies without direct server access? In this guide, we break down how to use familiar tools like RMAN (Recovery Manager) within these limits, explain native AWS options, and walk through practical steps to protect or migrate your data using aws rds oracle rman methods.

What Is AWS RDS for Oracle?

Amazon Relational Database Service (RDS) is a managed platform that runs popular databases like Oracle without requiring you to manage hardware or operating systems yourself. When you choose Amazon RDS for Oracle, AWS takes care of patching software, scaling resources up or down as needed, monitoring health metrics around the clock—and even basic daily backups.

However, there’s a tradeoff: you do not have operating system access or SYSDBA privileges as you would on a traditional server. Instead of logging into Linux or Windows shells—or running scripts as root—you interact through SQL clients and special stored procedures provided by AWS.

This security model fundamentally changes your toolkit: all advanced management tasks must be performed at the database level using SQL commands or via the AWS Management Console/API—not by accessing underlying filesystems directly.

What Is RMAN in Oracle Backups?

Oracle Recovery Manager (RMAN) is the standard tool used by DBAs worldwide to back up and restore databases efficiently. It supports full backups at block level; incremental backups that capture only changed data; archivelog management; validation routines; point-in-time recovery; and more.

In classic environments where you control both database software and operating system access, running RMAN commands from command line interfaces is routine practice. But inside Amazon RDS for Oracle instances—where OS-level entry points are blocked—direct use of RMAN CLI is not allowed due to security restrictions set by AWS.

Instead, certain core features of RMAN are exposed through PL/SQL procedures inside your database using packages like rdsadmin.rdsadmin_rman_util. This lets you perform many essential operations—but always within boundaries set by Amazon’s managed service model.

Using RMAN with AWS RDS Oracle Databases

Can you use all features of RMAN on your Amazon RDS instance? The answer depends on what you're trying to achieve:

Supported: full/incremental database file backups (to disk), tablespace-level backups via stored procedures, archived redo log backups—all initiated through PL/SQL calls rather than shell scripts.

Not Supported: Restoring an external RMAN backup directly into an existing Amazon RDS instance is not allowed because file placement/control file management are automated by AWS behind the scenes—a manual restore could disrupt this process.

Use Cases: You can back up your entire database using these methods if you plan later restoration onto an EC2-hosted standalone Oracle server or even back onsite at your own datacenter.

Limitations: With no OS access:

All actions must go through stored procedures provided by

rdsadmin.Backup files remain stored locally within allocated space until manually deleted or copied off-instance (for example—to S3).

These local files consume storage quota—which may increase costs if left unmanaged over time.

Directly restoring such physical-format backups into another live Amazon RDS instance is not supported; instead consider snapshots or Data Pump exports/imports when moving between managed environments.

For most day-to-day protection needs within Amazon itself—such as disaster recovery—you should rely primarily on automated snapshots plus other native features described below.

Method 1: Native Backup Options in AWS RDS Oracle

AWS provides built-in ways to safeguard your data without needing third-party tools—or even deep DBA skills—for most operational scenarios.

Automated Backups

By default when launching any new DB instance in Amazon RDS:

Automated daily snapshots capture all data at a specific point in time

Retention period can be set anywhere from 0–35 days based on compliance needs

Point-in-time recovery lets you roll back your DB instance to any second within this window

Managed entirely via the AWS Management Console under Databases > [Your Instance] > Maintenance & backups, or programmatically via CLI/API

Manual Snapshots

You can also create manual snapshots before major upgrades:

1. Go to Databases in the console

2. Select your DB instance

3. Choose Actions > Take snapshot

4. Enter a name then confirm

Manual snapshots persist until deleted—they’re perfect before risky deployments since they provide rollback points outside regular retention cycles.

Restoring from Snapshots

To recover:

1. In Snapshots, select one

2. Click Actions > Restore snapshot

3. Configure new DB settings as needed—this creates a brand-new DB instance cloned from that snapshot

These methods deliver fast whole-instance restores but do not support granular object-level recovery such as single table restores.

Method 2: Using Data Pump for Logical Backups in AWS RDS Oracle

If flexibility matters—for example migrating only selected schemas/tables rather than everything—Oracle Data Pump (expdp/impdp) works seamlessly inside Amazon RDS for Oracle environments too.

Data Pump creates logical exports containing schema objects which can be imported elsewhere—even across versions/platforms if compatible—with fine-grained control over what gets moved each time.

Exporting Data

To export a schema:

-- Create directory object if needed first time only BEGIN rdsadmin.rdsadmin_util.create_directory(p_directory_name => 'DATA_PUMP_DIR'); END; / -- Run export job as master user replacing credentials/dbname/schema names accordingly expdp admin/password@dbinstance schemas=HR directory=DATA_PUMP_DIR dumpfile=hr_schema.dmp logfile=hr_export.log

The resulting dump file lands inside DATA_PUMP_DIR, mapped internally onto storage accessible only via SQL—not visible externally unless copied out using special utilities/procedures described below.

Importing Data

To import into another environment:

impdp admin/password@targetdb schemas=HR directory=DATA_PUMP_DIR dumpfile=hr_schema.dmp logfile=hr_import.log remap_schema=HR:NEW_HR

This method works well whether moving between cloud accounts/environments—or simply restoring selected objects after accidental deletion.

Transferring Dump Files to Amazon S3

Often you'll want copies outside local storage—for long-term retention/offsite safety/migration purposes:

-- Upload exported dump file directly from DATA_PUMP_DIR into S3 bucket named 'my-backup-bucket' SELECT rdsadmin.rdsadmin_s3_tasks.upload_to_s3( p_bucket_name => 'my-backup-bucket', p_prefix => 'oracle_exports/', p_s3_prefix => 'hr_schema.dmp', p_directory_name => 'DATA_PUMP_DIR' ) AS TASK_ID FROM DUAL;

This procedure moves specified files securely into S3 buckets under your account—making them available anywhere globally while freeing up local space inside your running database.

Using RMAN Procedures on Amazon RDS for Oracle

Important: Use these PL/SQL-based procedures mainly when creating physical-format backups destined for restoration onto non-RDS targets such as EC2-hosted standalone servers or traditional datacenters—not routine day-to-day restores within managed service boundaries!

Let’s see how supported operations work step-by-step:

Full Database Backup Example

BEGIN rdsadmin.rdsadmin_rman_util.backup_database_full( p_owner => 'SYS', -- Owner performing operation p_directory_name => 'DATA_PUMP_DIR', -- Where output lands p_tag => 'FULL_DB_BACKUP', -- Custom label/tag p_rman_to_dbms_output => FALSE); -- Output verbosity flag END; /

This backs up every datafile into DATA_PUMP_DIR. You may then copy those files off-instance using S3 integration.

Incremental Backup Example

BEGIN rdsadmin.rdsadmin_rman_util.backup_database_incremental( p_owner => 'SYS', p_directory_name => 'DATA_PUMP_DIR', p_level => 1,-- Set 0 = full base / 1 = differential incremental p_tag => 'MY_INCREMENTAL_BACKUP', p_rman_to_dbms_output => FALSE); END; /

Set p_level parameter appropriately depending whether starting fresh (0) versus capturing just recent changes (1).

Archive Log Backup Example

First create target directory:

EXEC rdsadmin.rdsadmin_util.create_directory(p_directory_name => 'ARCHIVE_LOG_BACKUP');

Then back up archive logs themselves:

BEGIN rdsadmin.rdsadmin_rman_util.backup_archivelog_all( p_owner => 'SYS', p_directory_name => 'ARCHIVE_LOG_BACKUP', p_tag => 'LOG_BACKUP', p_rman_to_dbms_output => FALSE); END; /

> Note: These steps let you generate physical-format backup sets compatible with classic restores elsewhere—but never directly usable by another live Amazon-managed instance.

Managing and Pruning RMAN Backup Files

Backup jobs consume valuable local storage quota until cleaned up! Here’s how admins typically manage lifecycle safely:

1. List contents of working directories regularly:

SELECT * FROM TABLE(rdsadmin.rds_file_util.listdir('DATA_PUMP_DIR'));2. Cross-reference job status/history so nothing critical gets deleted prematurely:

SELECT * FROM V$RMAN_BACKUP_JOB_DETAILS ORDER BY START_TIME DESC;

3. Remove obsolete/unneeded files carefully after confirming they're no longer required:

EXEC UTL_FILE.FREMOVE('DATA_PUMP_DIR', '<filename>');Always double-check filenames/dates before deleting anything! Accidentally removing active control/datafiles could cause outages.

Vinchin: A Unified Solution for Diverse IT Environments

Given these complexities with native tools and limited direct access in cloud-managed databases, organizations often seek more flexible solutions that span multiple platforms and workloads. Vinchin stands out here—it is compatible with over 19 virtualization platforms including VMware, Hyper-V, Proxmox, along with support for physical servers, various databases, and both on-premises/cloud file storage systems, making it ideal for diverse IT architectures found across industries today.

When migrations are required, Vinchin Backup & Recovery delivers highly flexible migration capabilities, allowing seamless full-system transfers across any supported virtual, physical, or cloud hosts with minimal effort. For mission-critical workloads running virtually or physically, its combination of real-time backup and replication ensures additional recovery points and enables automated failover, significantly reducing both Recovery Point Objective (RPO) and Recovery Time Objective (RTO).

To guarantee reliability during disaster events, Vinchin offers features like automatic integrity checks of backup data plus isolated environment recoverability validation—ensuring restorability whenever needed. It also helps build robust DR systems through automated retention policies, cloud/tape archiving, remote replicas, or DR centers—all designed for rapid resumption of business operations after disruptions.

With its simple B/S web console interface and wizard-driven workflows—even complex jobs become straightforward:

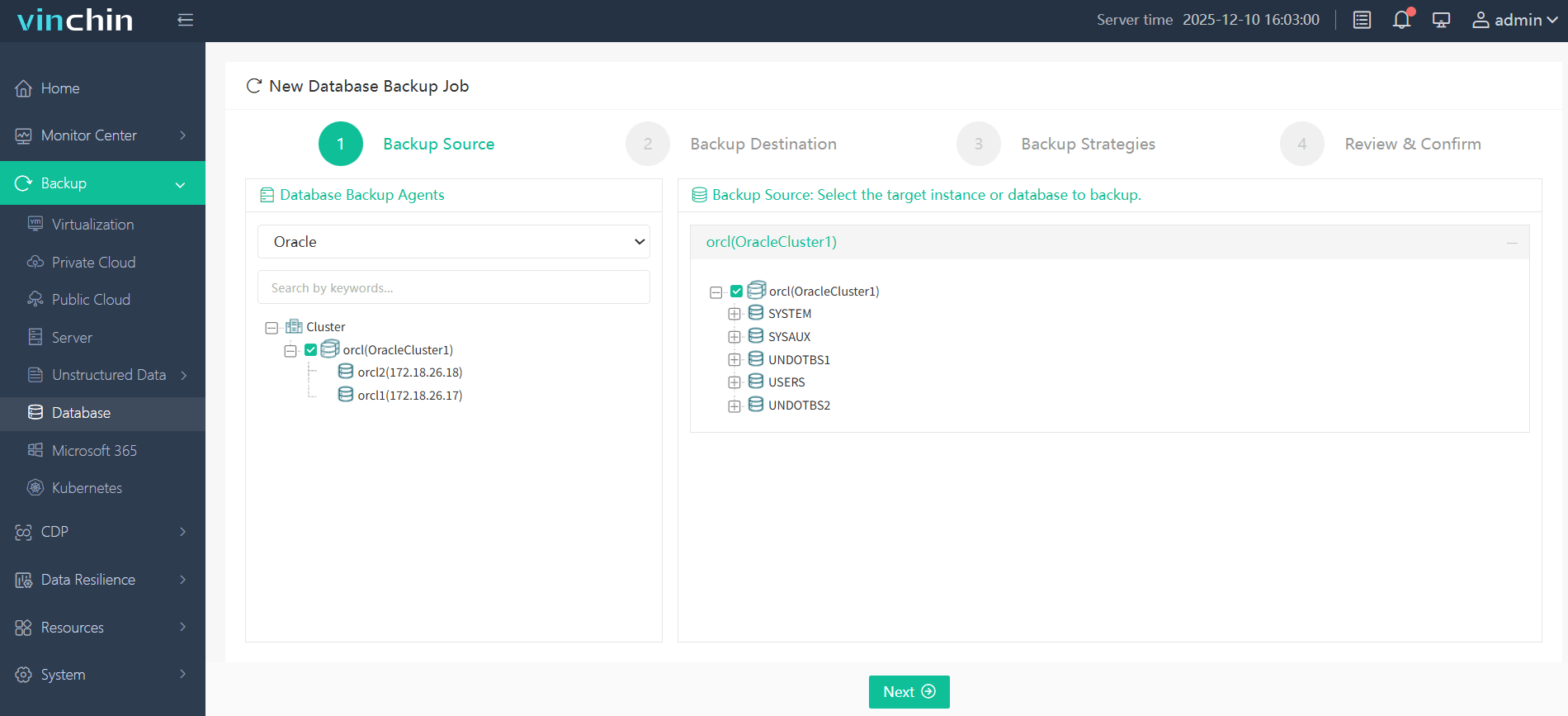

The intuitive web console makes safeguarding Oracle databases straightforward:

Step 1. Select the Oracle database to back up

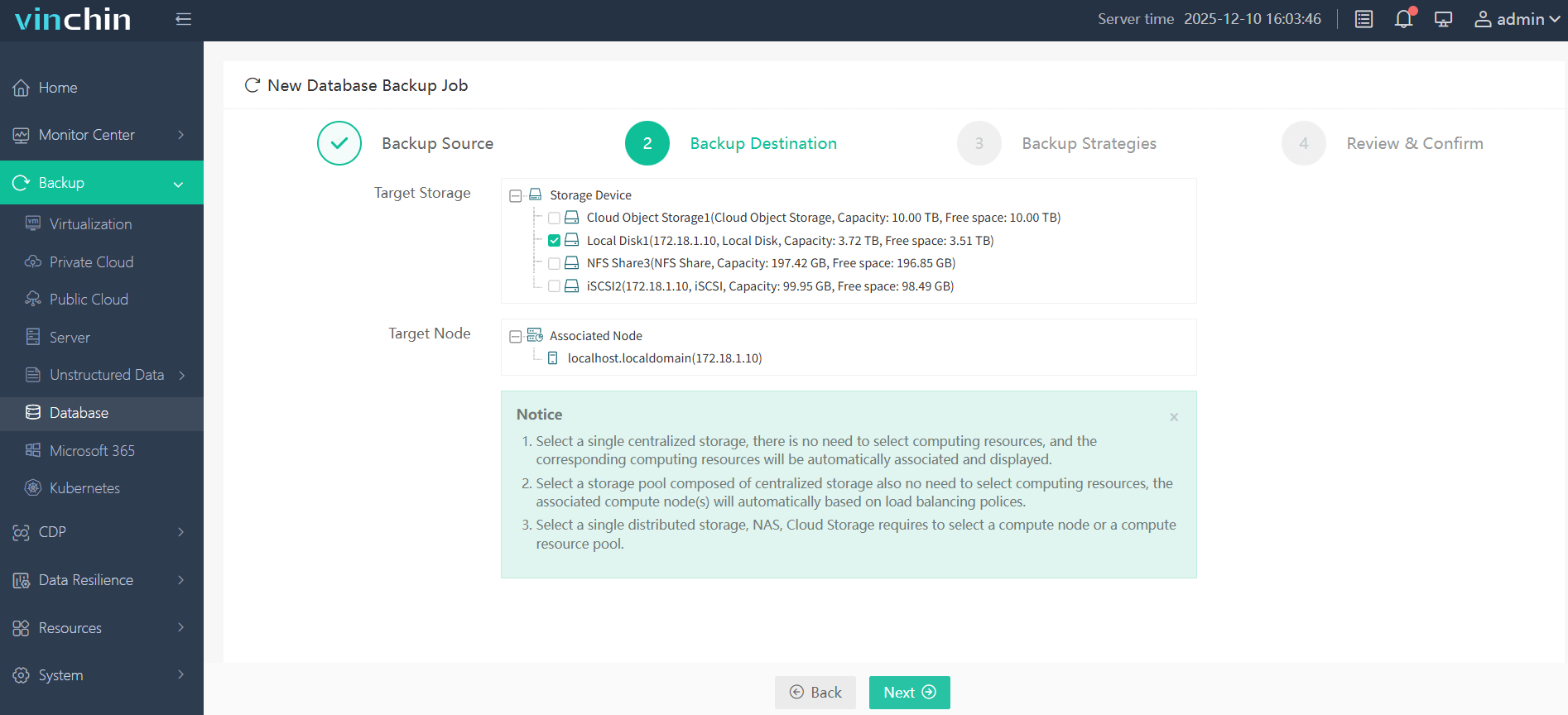

Step 2. Choose the backup storage

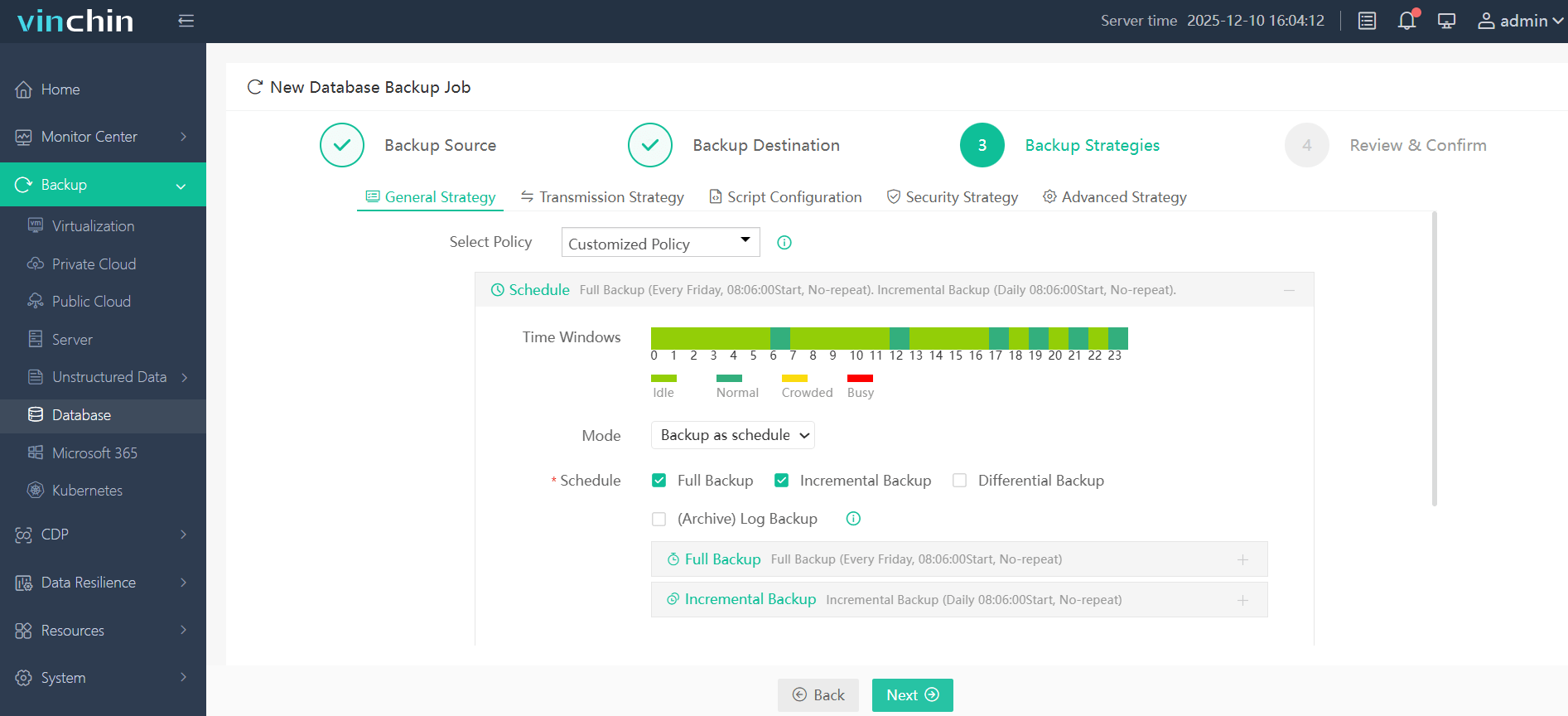

Step 3. Define the backup strategy

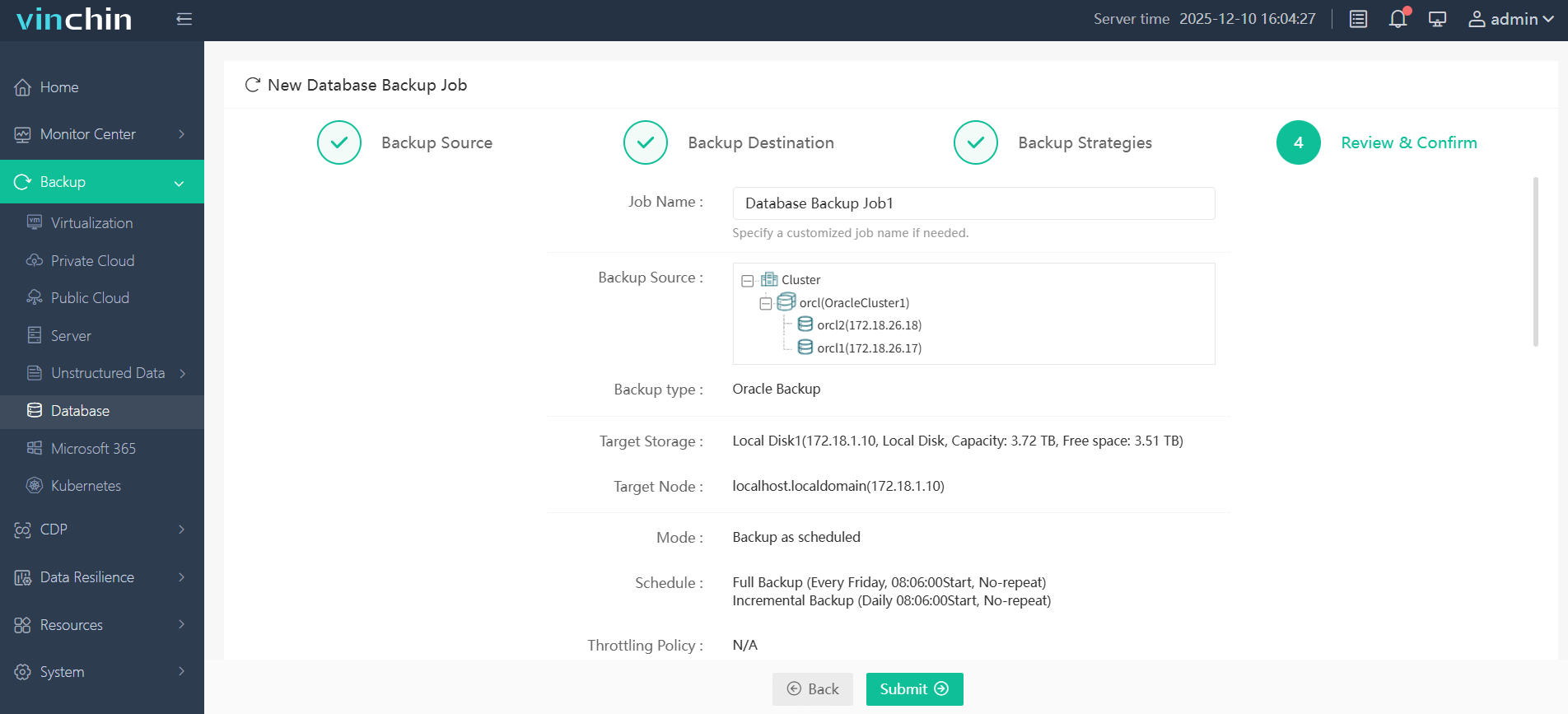

Step 4. Submit the job

A free 60-day trial is available alongside comprehensive documentation and responsive support engineers who help ensure smooth deployment tailored precisely to your environment's needs.

aws rds oracle rman FAQs

Q1: Can I schedule automatic deletion of old exported dump files?

No—you must manually remove old dump files using UTL_FILE.FREMOVE after verifying they’re no longer needed.

Q2: Is it possible to encrypt my Data Pump exports natively?

Yes—use ENCRYPTION parameters during expdp jobs if Advanced Security Option is enabled in your license agreement with Oracle Cloud Service Provider/AWS Marketplace subscription terms permit it.

Q3: What happens if my DATA_PUMP_DIR fills up during export?

The export fails immediately due to insufficient space; monitor usage closely before large jobs begin.

Conclusion

Choosing among aws rds oracle rman options depends on whether you need full-instance rollback speed (snapshots), selective object migration (Data Pump), or cross-platform portability (RMAN). For complex hybrid setups spanning clouds/datacenters/Vinchin unifies protection seamlessly so every workload stays safe wherever it lives!

Share on: