-

What Is Big Data?

-

What Is Hadoop?

-

Why Use Hadoop for Big Data?

-

Protecting Big Data and Hadoop with Vinchin Backup & Recovery

-

Big Data And Hadoop: FAQs

-

Conclusion

Are you struggling to manage exploding volumes of business data? You’re not alone—data volumes are doubling every two years worldwide. Traditional systems can’t keep up with this growth or complexity. That’s why big data and Hadoop have become essential tools for modern IT operations teams. In this guide, we’ll break down what these technologies mean for your daily work—and how you can protect your critical information as it grows.

What Is Big Data?

Big data describes datasets that are too large or complex for traditional databases to handle efficiently. Experts often define big data by five key characteristics—the “five Vs”: volume (amount), velocity (speed), variety (types), veracity (accuracy), and value (usefulness).

Volume refers to massive amounts of information from sources like sensors or social media.

Velocity means new data arrives fast—sometimes thousands of records per second.

Variety covers different formats: structured tables from databases; semi-structured logs; unstructured images or videos.

Veracity highlights concerns about accuracy—how do you trust all this raw input?

Value focuses on extracting insights that drive decisions or profits.

For operations administrators, managing big data means more than just storage—it involves ensuring quality control across diverse sources while keeping everything secure. Poor governance can lead to compliance risks or unreliable analytics results.

Organizations use big data analytics to predict customer trends, detect fraud quickly, optimize supply chains—or even monitor equipment health in real time. But without scalable infrastructure like Hadoop behind the scenes, these projects would stall under their own weight.

What Is Hadoop?

Hadoop is an open-source software framework built specifically for storing and processing big data across clusters of computers. It solves many problems that traditional databases face when dealing with petabytes of information spread across hundreds—or thousands—of servers.

Let’s look at its core components:

Hadoop Distributed File System (HDFS): This system splits files into blocks stored across multiple machines (“DataNodes”). A central server called the “NameNode” keeps track of where each block lives.

MapReduce: This engine breaks down large jobs into smaller tasks processed in parallel by many nodes—then combines results quickly.

YARN (Yet Another Resource Negotiator): YARN manages resources cluster-wide so jobs don’t compete unfairly for CPU or memory.

Hadoop Common: These libraries support all other modules with essential utilities.

Why does this matter? If one DataNode fails—a common event in large clusters—HDFS automatically recovers using copies stored elsewhere (“replication,” usually three times). This design makes Hadoop highly reliable even as hardware fails unpredictably.

You can deploy Hadoop on-premises using commodity servers or run it on cloud platforms if you need elastic scaling without buying hardware upfront. Either way, it handles structured tables from relational databases alongside logs or multimedia files—all within one ecosystem.

Resource management becomes crucial as clusters grow larger; balancing workloads between nodes requires careful tuning so no single machine becomes a bottleneck. Security also matters: configuring Kerberos authentication helps prevent unauthorized access while encryption protects sensitive information both at rest and during transmission.

Why Use Hadoop for Big Data?

Why do so many organizations choose Hadoop as their foundation for big data? Let’s explore its advantages step by step:

First comes scalability—you can start small but add more nodes easily as your needs grow without replacing existing infrastructure. This flexibility keeps costs predictable since you avoid expensive proprietary appliances upfront.

Second is fault tolerance: HDFS replicates every block across several machines so losing one node doesn’t mean losing your only copy of important files—a lifesaver during hardware failures common at scale!

Third is speed through parallelism: MapReduce divides huge jobs among dozens—or hundreds—of worker nodes who process chunks simultaneously then merge answers together rapidly. For example: analyzing terabytes might take hours instead of days compared to older systems relying on single-threaded queries.

Fourth comes flexibility—not just handling structured tables but also logs from web servers or sensor readings from IoT devices without needing rigid schemas ahead of time (“schema-on-read”).

Fifth is ecosystem richness: Tools like Hive let admins write SQL-like queries over massive datasets; Spark enables advanced analytics including machine learning—all running atop HDFS storage seamlessly integrated into workflows familiar to database professionals transitioning into big data roles.

To put things in perspective:

| Feature | Traditional Database | Hadoop |

|---|---|---|

| Scalability | Limited | Virtually unlimited |

| Fault Tolerance | Basic | Built-in via replication |

| Supported Data Types | Mostly structured | Structured + unstructured |

| Cost | High | Commodity hardware/cloud |

In summary: if your organization faces rapid growth in both size and diversity of digital assets—from spreadsheets to video streams—big data and Hadoop offer an affordable path forward that scales with demand rather than against it.

Protecting Big Data and Hadoop with Vinchin Backup & Recovery

While robust storage architectures like Hadoop HDFS provide inherent resilience, comprehensive backup remains essential for true data protection. Vinchin Backup & Recovery is an enterprise-grade solution purpose-built for safeguarding mainstream file storage—including Hadoop HDFS environments—as well as Windows/Linux file servers, NAS devices, and S3-compatible object storage. Specifically optimized for large-scale platforms like Hadoop HDFS, Vinchin Backup & Recovery delivers exceptionally fast backup speeds that surpass competing products thanks to advanced technologies such as simultaneous scanning/data transfer and merged file transmission.

Among its extensive capabilities, five stand out as particularly valuable for protecting critical big-data assets: incremental backup (capturing only changed files), wildcard filtering (targeting specific datasets), multi-level compression (reducing space usage), cross-platform restore (recovering backups onto any supported target including other file servers/NAS/Hadoop/object storage), and integrity check (verifying backups remain unchanged). Together these features ensure efficient operations while maximizing security and flexibility across diverse infrastructures.

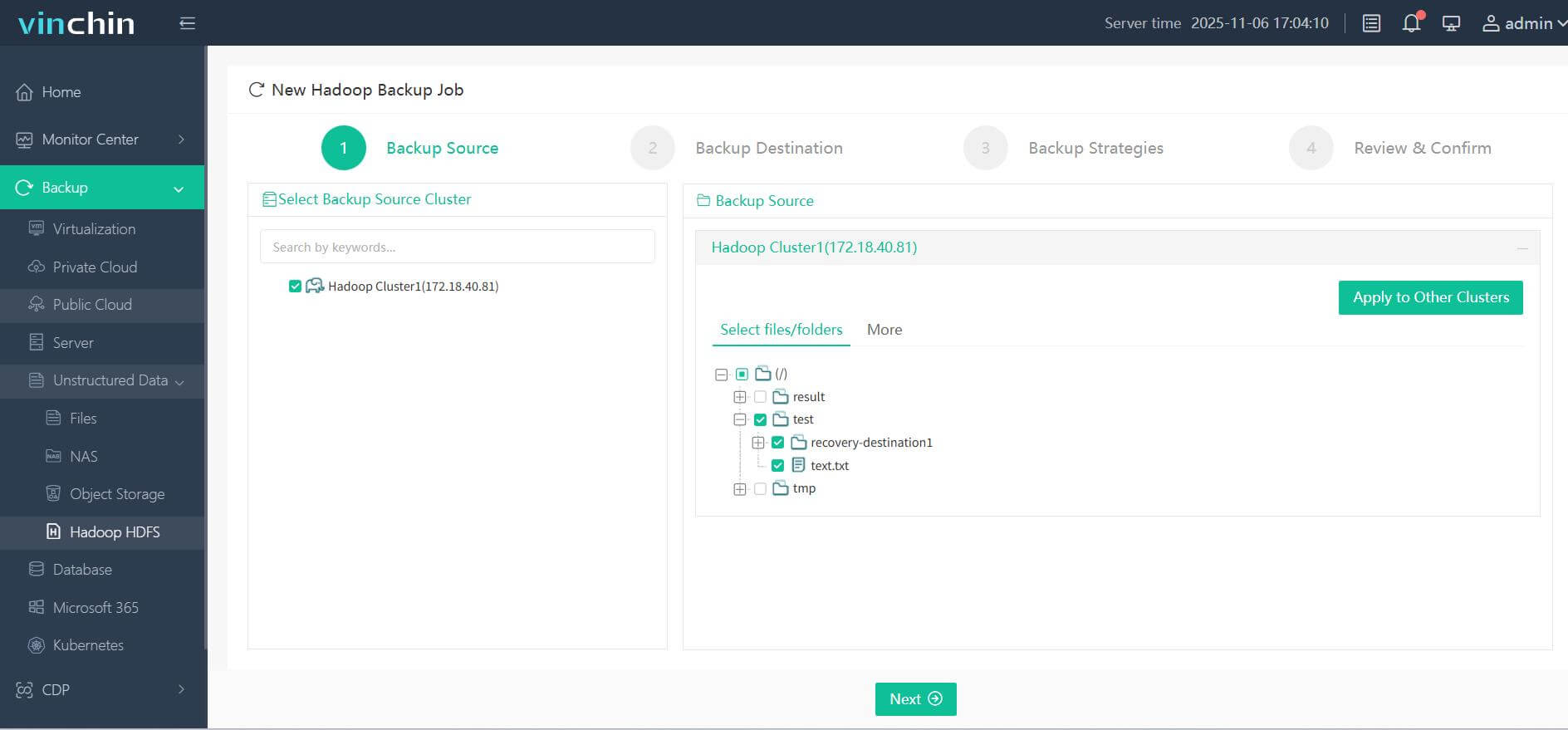

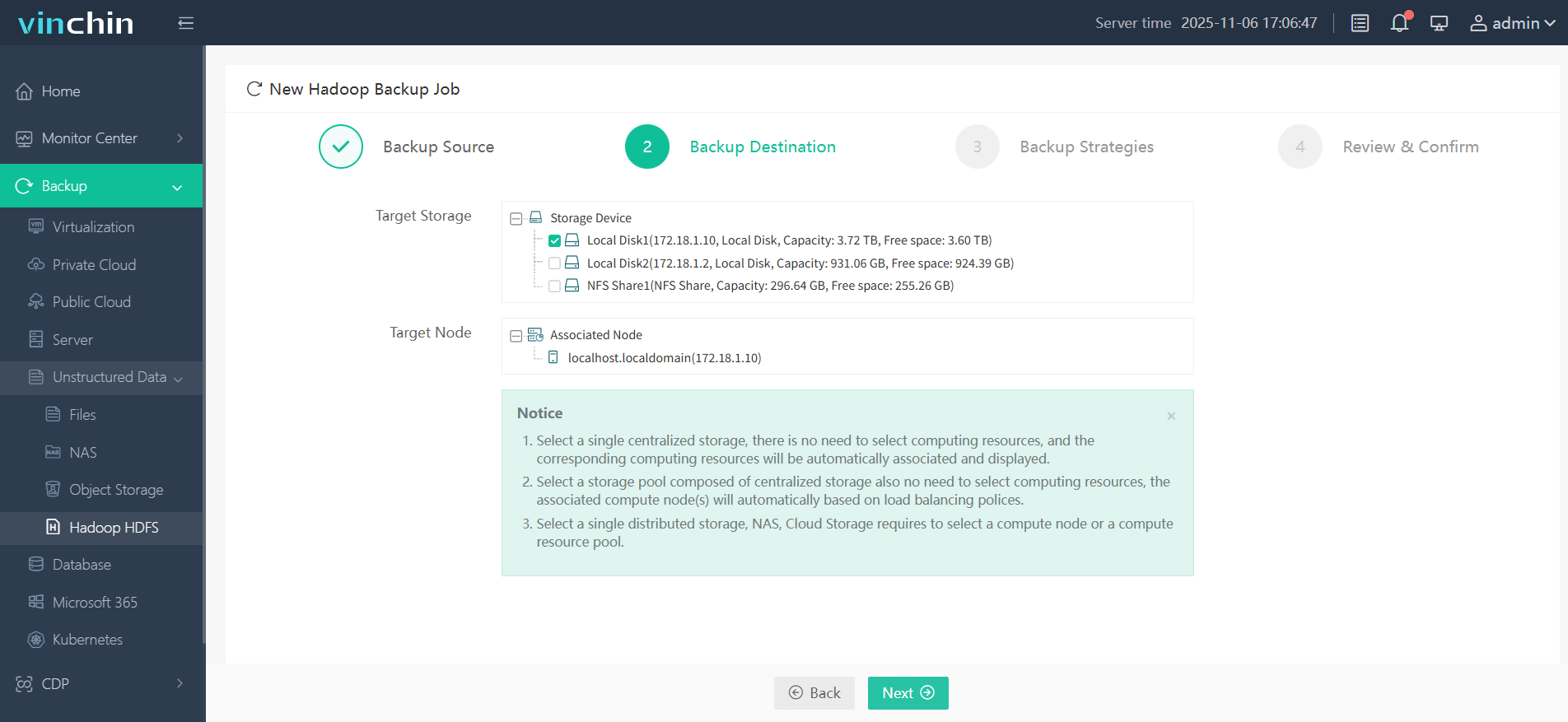

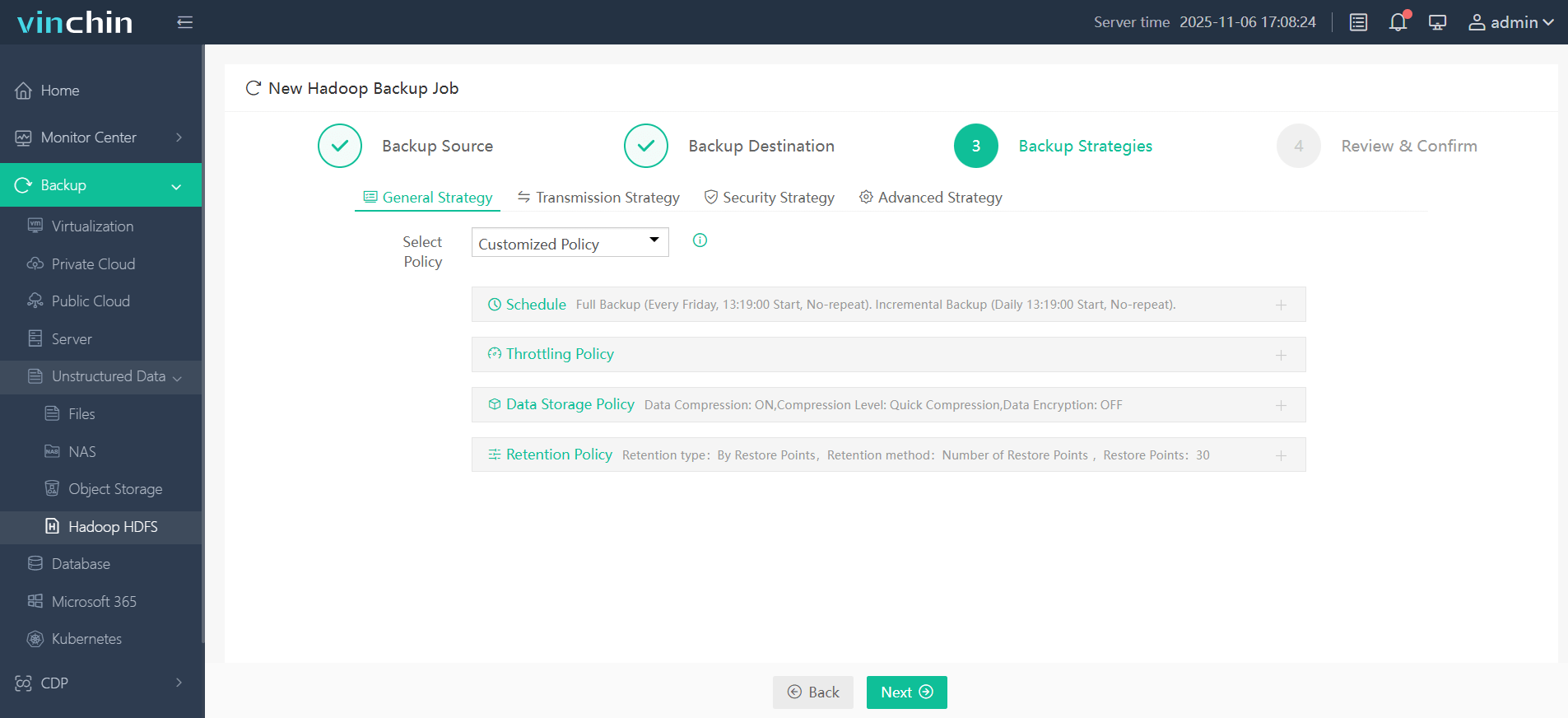

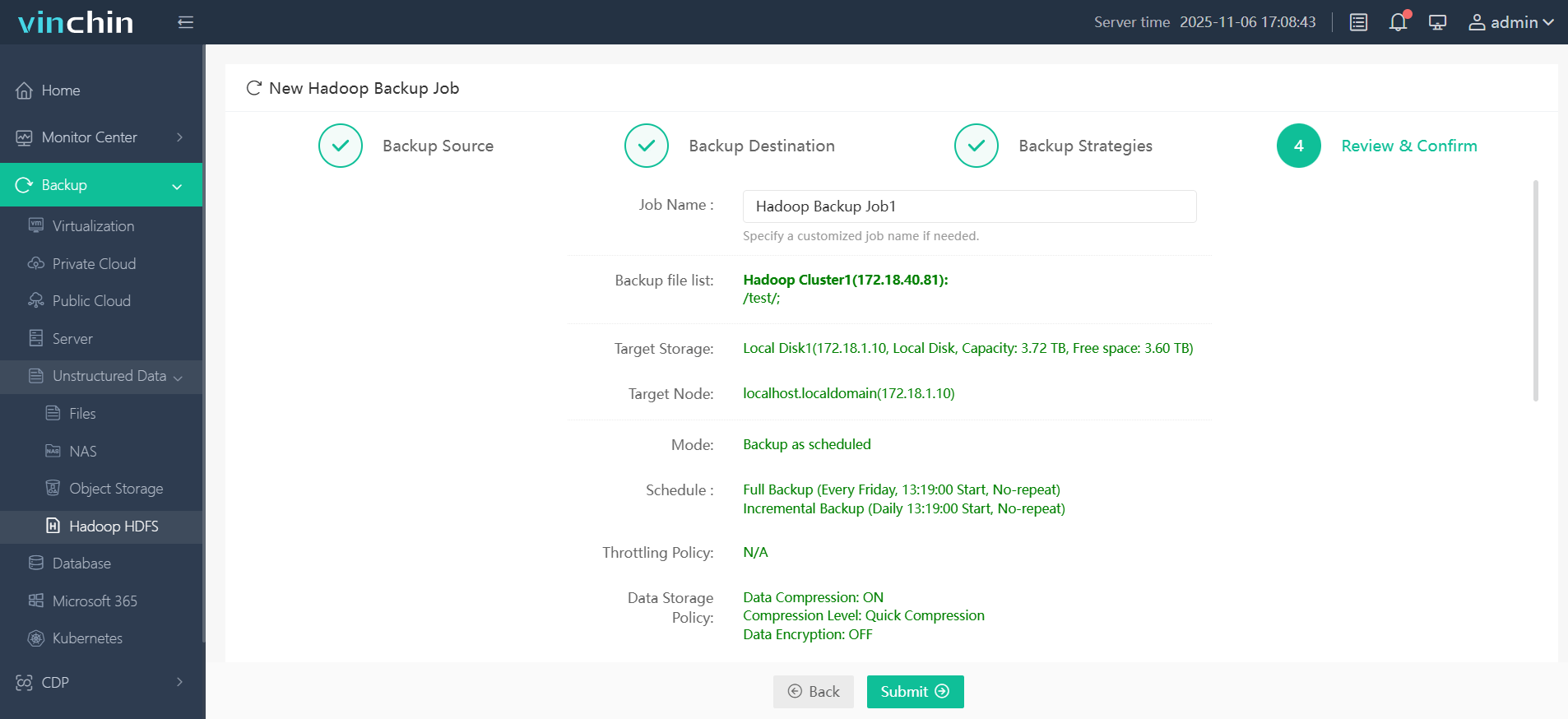

Vinchin Backup & Recovery offers an intuitive web console designed for simplicity. To back up your Hadoop HDFS files:

Step 1. Select the Hadoop HDFS files you wish to back up

Step 2. Choose your desired backup destination

Step 3. Define backup strategies tailored for your needs

Step 4. Submit the job

Join thousands of global enterprises who trust Vinchin Backup & Recovery—renowned worldwide with top ratings—for reliable data protection. Try all features free with a 60-day trial; click below to get started!

Big Data And Hadoop: FAQs

Q1: How do I improve MapReduce job performance?

A1: Tune parameters like block size/memory allocation within YARN—increase parallelism where possible—and monitor slow tasks via Job History UI/dashboard tools

Q2: What steps should I take if NameNode enters Safe Mode unexpectedly?

A2: Check disk space availability > Review recent error logs > Restart NameNode service if needed > Run fsck utility > Exit Safe Mode manually if required

Q3: Can I automate regular health checks across my entire hadoop cluster?

A3: Yes—schedule scripts leveraging hdfs dfsadmin commands combined with alerting tools integrated into existing monitoring dashboards

Conclusion

Big data solutions powered by hadoop continue transforming enterprise IT landscapes everywhere—as demands rise scalable reliable frameworks become mission-critical foundations beneath every digital initiative imaginable! With Vinchin protecting those workloads has never been easier—or safer—for tomorrow's challenges starting today.

Share on: