-

What is MML in Backup?

-

What Does Write Backup Piece Mean?

-

Why Write Backup Piece Errors Occur?

-

How to Troubleshoot Write Backup Piece Issues?

-

Diagnosing Network and Hardware Bottlenecks

-

How to Prevent Write Backup Piece Failures?

-

Tuning RMAN Configuration for MML Performance

-

Simplifying Oracle Data Protection with Vinchin Backup & Recovery

-

Backup MML Write Backup Piece FAQs

-

Conclusion

For Oracle DBAs managing backups to tape or cloud storage, the wait event Backup: MML write backup piece can cause confusion—and sometimes frustration. This event often appears during slow or stuck backups. What does it mean? Why does it happen? In this article, we’ll explain what’s going on behind the scenes when you see this message. We’ll walk through how to diagnose problems step by step—from basic checks to advanced troubleshooting—and share proven ways to prevent issues in your backup environment. Finally, you’ll learn how Vinchin can help simplify Oracle data protection.

What is MML in Backup?

The Media Management Layer (MML) acts as a bridge between Oracle Recovery Manager (RMAN) and external storage devices. When you use RMAN to back up your database, it can write directly to disk—or hand off data to third-party media management software through MML. This lets you store backups on tape libraries or cloud storage instead of just local disks.

MML handles tasks like writing data blocks, labeling backup pieces, tracking their location on physical media, and reporting status back to RMAN. By separating these duties from core database operations, Oracle ensures that backups can scale across many types of enterprise storage systems.

What Does Write Backup Piece Mean?

When you see Backup: MML write backup piece as a wait event in Oracle monitoring tools or logs, it means RMAN has handed over a chunk of backup data—a “backup piece”—to the Media Management Layer for writing onto external storage.

This process is normal whenever your backup destination uses tape drives or remote/cloud targets managed by third-party software. The event signals that Oracle itself is waiting while the external device writes data out. Usually this happens quickly—but if it takes too long or stalls entirely, something may be wrong with your storage path.

Why does this matter? Because long waits here slow down your entire backup job and may even lead to failures if not resolved promptly.

Why Write Backup Piece Errors Occur?

Most problems related to write backup piece are not caused by bugs in Oracle itself—they’re usually symptoms of trouble elsewhere in your infrastructure.

Common causes include:

Tape drives that are busy handling other jobs or have reached their performance limits

Storage devices (like NAS appliances or cloud gateways) suffering from high latency or downtime

Network congestion between your database server and remote storage targets

Incorrectly configured channels or parameters within RMAN/MML settings

Hardware failures such as faulty cables, failing disks/tapes, or exhausted system resources on either end

When any part of this chain slows down—whether due to hardware overloads or misconfiguration—the Media Management Layer cannot keep up with incoming data from RMAN sessions. As a result, those sessions enter a waiting state until space becomes available again.

Sometimes these waits resolve themselves after a few seconds; other times they persist until manual intervention is required.

How to Troubleshoot Write Backup Piece Issues?

Troubleshooting starts with pinpointing where delays are happening—inside Oracle itself or somewhere along the path out to external storage.

Beginner admins should start by checking current wait events inside Oracle:

Run this SQL command:

SELECT SID, EVENT, SECONDS_IN_WAIT, STATE FROM V$SESSION_WAIT WHERE EVENT LIKE '%MML%';

If you see active sessions waiting on Backup: MML write backup piece, note how long they’ve been stuck there (SECONDS_IN_WAIT). Long waits suggest an issue outside core database processing—usually at the device level.

Next steps depend on what kind of device backs your backups:

For tape libraries:

Check if all drives are online using vendor tools.

Look for queued jobs that might be blocking access.

Review logs for hardware errors like failed mounts or full tapes.

For network/cloud-based storage:

Test connectivity using standard OS commands (

ping,traceroute).Measure bandwidth usage during active backups; look for spikes that indicate congestion.

Check system logs for dropped packets or timeouts affecting NFS/CIFS mounts.

Intermediate users should also review both RMAN output logs and media manager logs side-by-side:

Search for error messages about failed writes (“I/O error”, “timeout”, etc.).

Confirm whether errors appear only during certain times (peak hours?)—this hints at resource contention rather than outright failure.

If you suspect configuration issues:

Double-check channel definitions in your RMAN scripts (

ALLOCATE CHANNELstatements).Make sure parallelism settings match what your hardware can handle without overloading any single component.

Verify all relevant parameters passed into the media management layer are correct per vendor documentation.

Advanced users may need to kill stuck processes if jobs refuse to complete:

1. Identify session/process IDs using:

SELECT s.SID, p.SPID, s.PROGRAM FROM V$PROCESS p JOIN V$SESSION s ON p.ADDR = s.PADDR WHERE s.PROGRAM LIKE '%rman%';

2. Use operating system commands (such as kill on Linux) targeting those SPIDs—but always check first whether terminating will disrupt other critical tasks running under that process ID!

Remember: Always consult official documentation before force-killing jobs managed by third-party software; improper termination could leave orphaned files behind needing cleanup later.

Diagnosing Network and Hardware Bottlenecks

Many persistent write backup piece waits trace back not just to software but also physical infrastructure bottlenecks—especially when backing up large databases over shared networks or aging tape systems.

Start diagnosis at the operating system level:

On Linux/Unix servers:

Use

iostatduring active backups; watch for high %iowait values indicating disk bottlenecks.Run

sarreports hourly/daily; spot trends where throughput drops below expected norms during scheduled jobs.For NFS-mounted shares used as targets: run

nfsstatbefore/during/after backups; rising retransmits signal network instability affecting file writes.

Check network health directly:

Use continuous ping tests (

ping <storage_ip>) while running test writes; packet loss above zero suggests unstable links.Try traceroute/mtr tools between DB server and target device; increased hop latency points toward overloaded routers/switches en route.

Tape library admins should log into their management consoles regularly:

Inspect drive status dashboards for signs of wear/failure (“cleaning required”, “media error”).

Review throughput statistics vs manufacturer specs—if actual MB/s rates fall well below rated speeds even after cleaning/maintenance cycles, consider replacing aging drives/media sooner rather than later!

Correlate findings across layers: If both OS-level metrics AND high Backup: MML write backup piece waits spike together, you’ve likely found your culprit.

How to Prevent Write Backup Piece Failures?

Prevention means building resilience into every link between source database and final destination media—and monitoring proactively so small hiccups don’t become big outages later!

First steps involve regular health checks: Ensure all tape drives/storage appliances follow vendor maintenance schedules—including cleaning cycles, firmware updates, periodic replacement of worn-out parts/cables/disks/tapes as needed; don’t let deferred maintenance create hidden risks!

Tune channel configurations thoughtfully:

Too many parallel channels overwhelm slow devices; too few waste available bandwidth; adjust PARALLELISM upward gradually while watching real-time performance metrics via V$SESSION_WAIT until diminishing returns set in; then dial back slightly for stability!

Set sensible limits using MAXPIECESIZE so individual files never exceed what downstream devices handle efficiently— especially important when archiving large databases onto older tapes/cloud endpoints prone to timeouts mid-transfer; Monitor everything continuously—not just after failures!

Query views like V$SESSION, V$SESSION_WAIT, V$RMAN_STATUS daily/weekly/monthly depending on environment size/risk profile; set up alerts via scripts/tools whenever abnormal spikes occur around key events like “MML write”;

Keep all supporting software/drivers patched/up-to-date—outdated versions introduce subtle incompatibilities/performance regressions hard to diagnose otherwise!

Tuning RMAN Configuration for MML Performance

Fine-tuning RMAN settings makes a big difference when working with complex media management setups—especially at scale!

Start simple: List current channel/device assignments inside RMAN shell using:

RMAN> LIST DEVICE TYPE ALL;

This confirms which SBT (System Backup Tape) interfaces/channels are registered—and helps spot misconfigurations early before they cause production headaches!

Adjust parallelism carefully based on observed results—not guesswork alone! Begin with one channel per physical drive/network endpoint, then incrementally increase only if no new bottlenecks appear in OS/network stats OR rising “MML write” waits;

Use explicit rate limiting where needed via:

ALLOCATE CHANNEL c1 DEVICE TYPE sbt PARMS 'SBT_LIBRARY=...' RATE=50M;

This throttles transfer speed so slower endpoints aren’t overwhelmed by bursts from fast CPUs/disks upstream;

For very large tablespaces/datafiles, consider splitting them internally via SECTION SIZE parameter so multiple streams feed same target concurrently without exceeding per-file/device limits;

Example syntax:

BACKUP AS BACKUPSET DATABASE SECTION SIZE = 10G;

Always validate changes against real-world workloads—not just lab tests—to ensure reliability holds under peak conditions!

Simplifying Oracle Data Protection with Vinchin Backup & Recovery

To further enhance reliability and efficiency when protecting Oracle environments against "Backup: MML write backup piece" issues, organizations can leverage Vinchin Backup & Recovery—a professional enterprise-level solution supporting today’s mainstream databases including Oracle (as well as MySQL, SQL Server, MariaDB, PostgreSQL, PostgresPro, and TiDB). For Oracle users specifically concerned about robust protection during complex operations like incremental backups and batch database jobs involving external media managers (MML), Vinchin Backup & Recovery delivers features such as incremental backup support, batch database backup capabilities, flexible retention policies including GFS retention policy options, integrity check mechanisms ensuring recoverability of each job run via SQL script verification tests prior to restore operations—all designed for maximum operational assurance while optimizing costs and compliance needs across hybrid infrastructures.

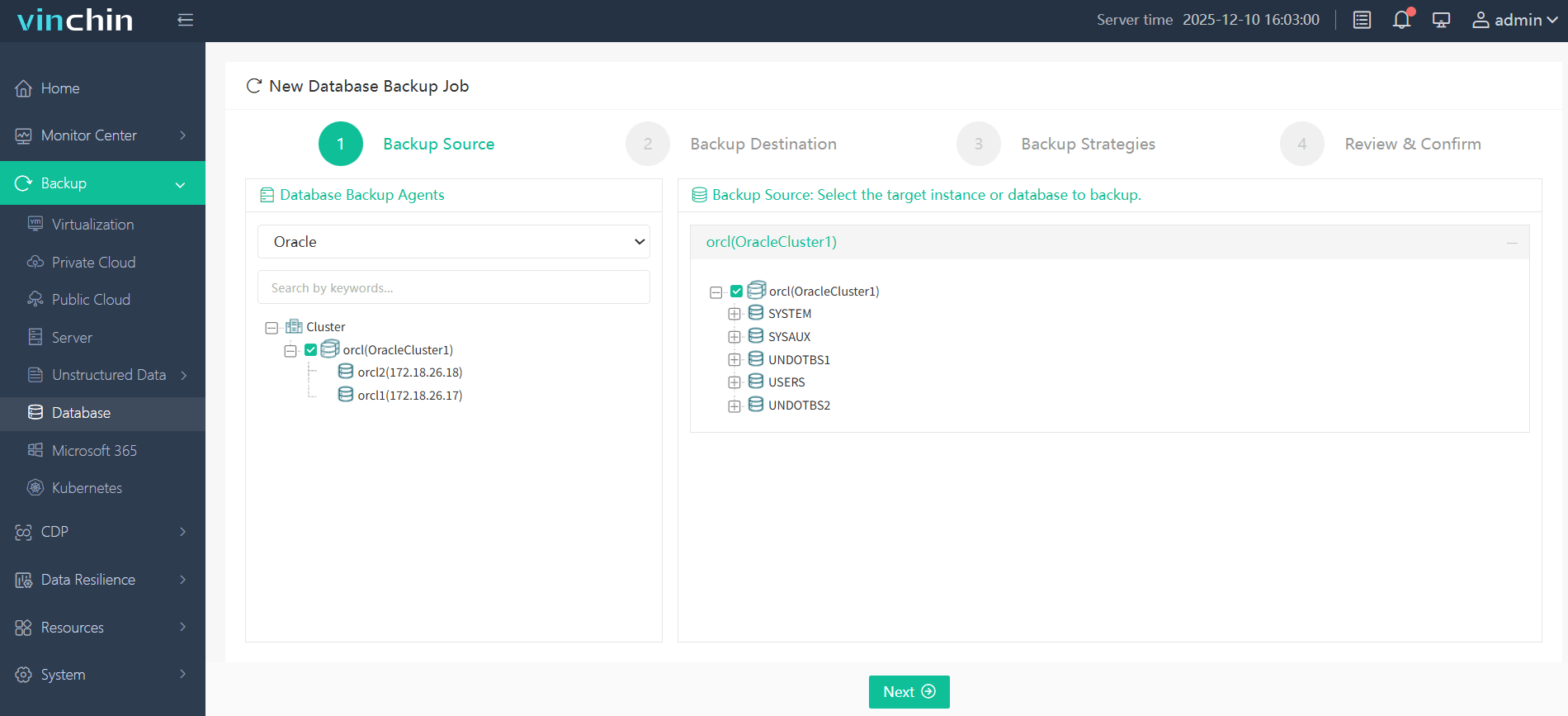

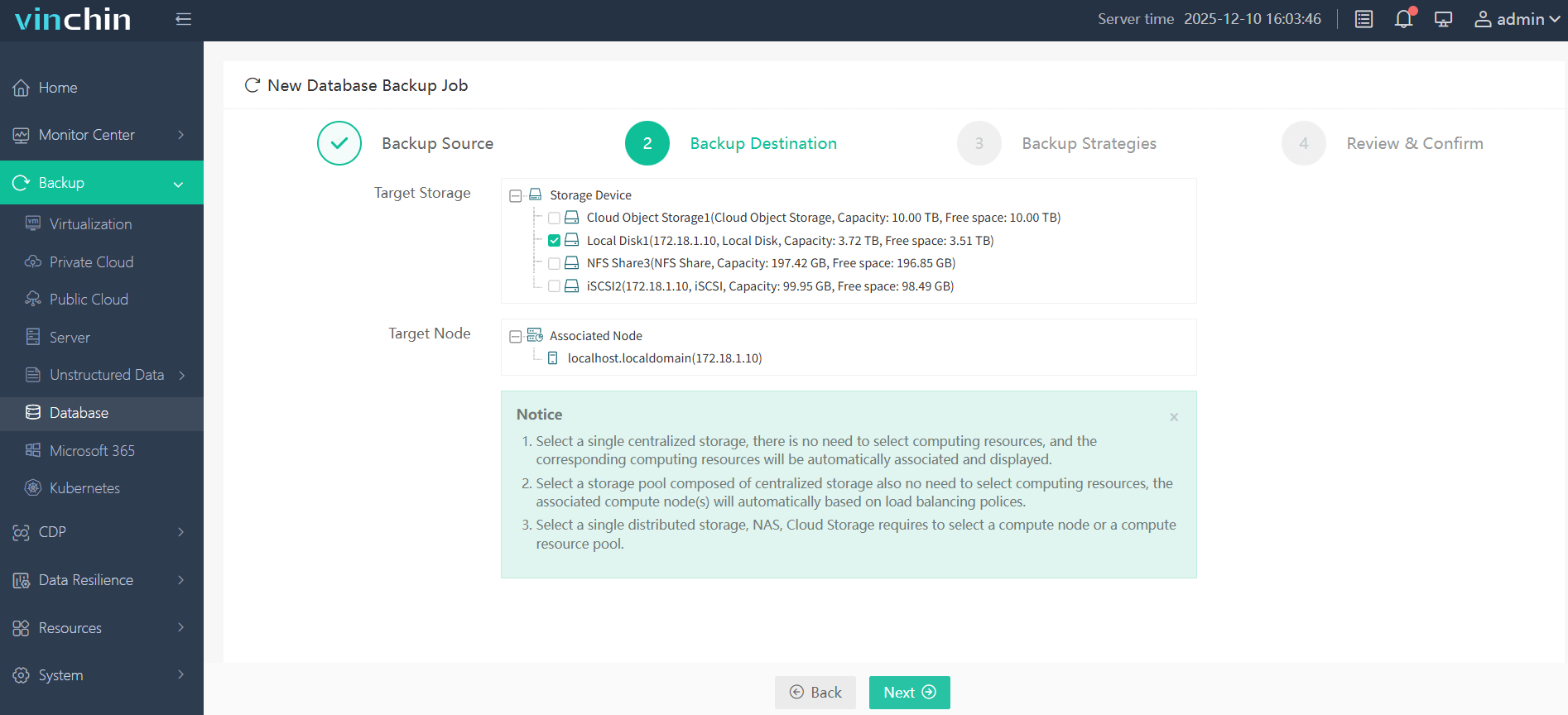

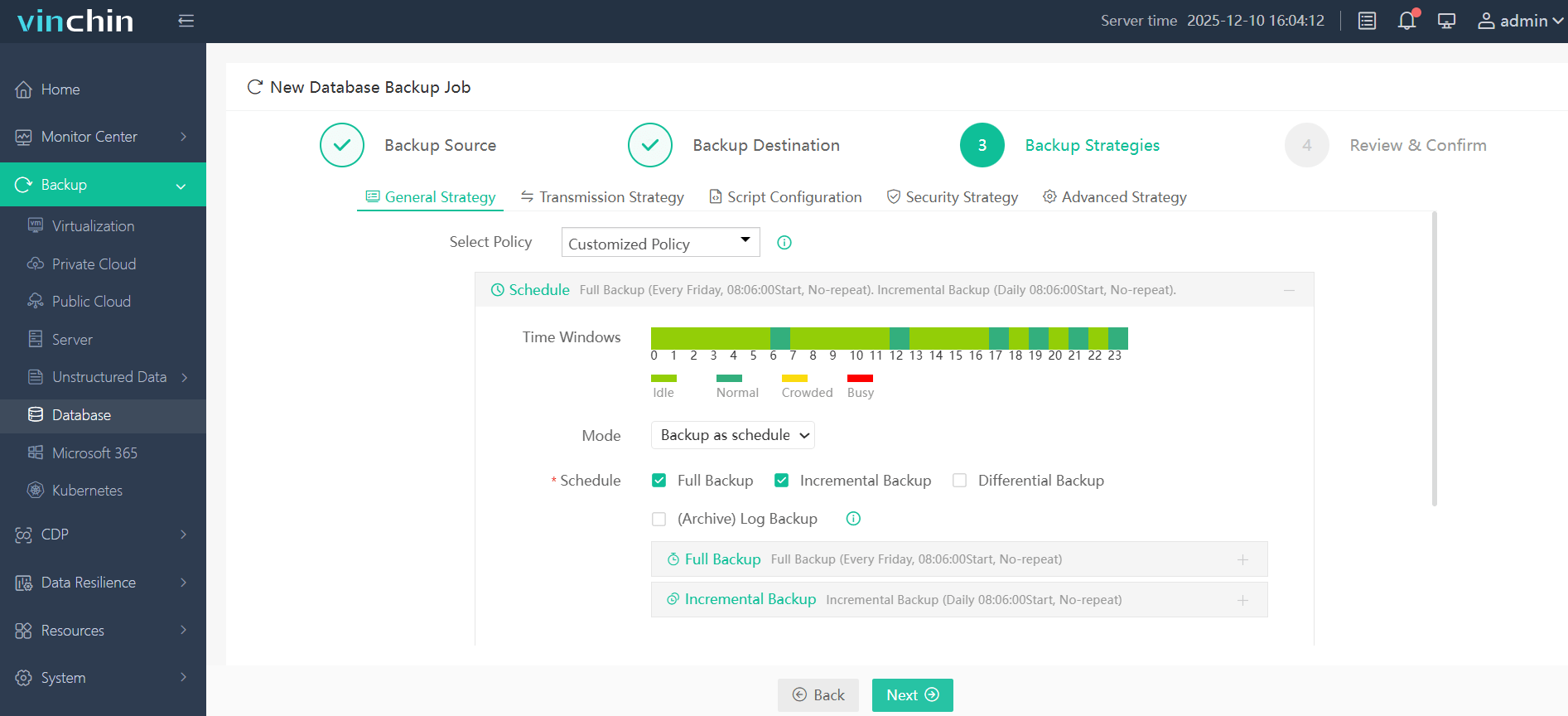

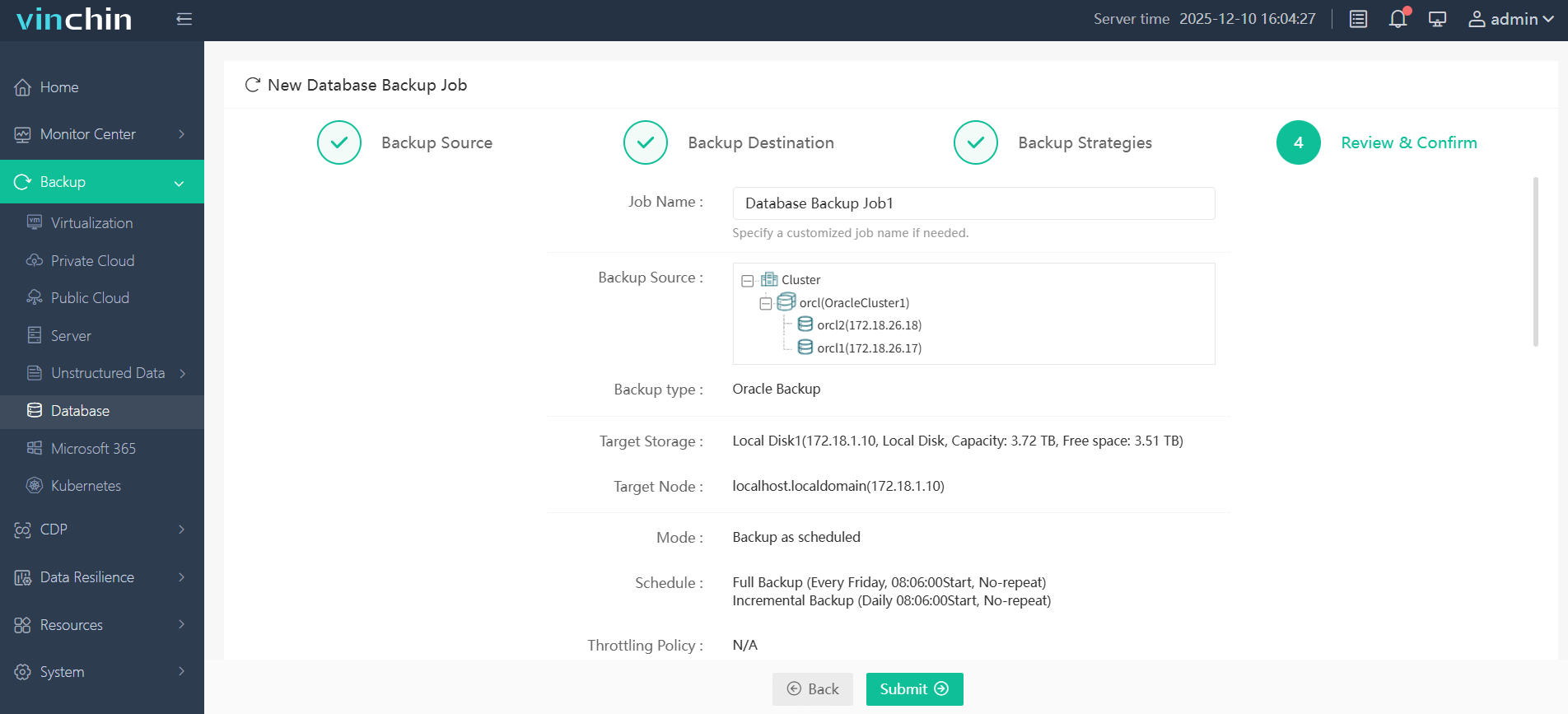

The intuitive web console streamlines every operation—in four straightforward steps tailored for Oracle environments:

Step 1. Select the Oracle database to back up

Step 2. Choose the backup storage

Step 3. Define the backup strategy

Step 4. Submit the job

Recognized globally with top ratings among enterprise customers seeking reliable data protection solutions—with thousands already relying on its proven technology—you can try Vinchin Backup & Recovery risk-free today with a full-featured free trial valid for sixty days; click download now and experience next-generation simplicity firsthand.

Backup MML Write Backup Piece FAQs

Q1: How do I know if my issue relates specifically to "Backup: MML write" versus another type of I/O wait?

Check session waits in V$SESSION_WAIT; "MML write" indicates delays writing out via media manager while "datafile i/o" points toward reading source files instead.

Q2: Can I safely restart an interrupted job stuck on "write backup piece" without risking corruption?

Yes—as long as incomplete pieces are cleaned up first per vendor guidelines before rerunning affected jobs from scratch.

Q3: What quick checks help rule out basic network problems causing slow writes?

Test connectivity using PING STORAGE_IP then monitor IOSTAT/NFSSTAT output during sample transfers.

Conclusion

Understanding why "Backup: MML write backup piece" occurs helps administrators troubleshoot slowdowns quickly—from checking device health through tuning advanced parameters inside RMAN itself. With proactive monitoring plus robust solutions like Vinchin available today—it’s easier than ever keeping mission-critical backups safe!

Share on: