-

What Is S3 Command Line?

-

How to Install AWS CLI?

-

How to Upload Files Using S3 Command Line?

-

How to Download Files Using S3 Command Line?

-

How to List Objects in S3 Using Command Line?

-

Protecting Your Amazon S3 Data with Vinchin Backup & Recovery

-

Frequently Asked Questions About The S3 Command Line

-

Conclusion

Managing data in Amazon S3 is a core task for IT operations teams everywhere. The S3 command line gives you direct control over your cloud storage without relying on web interfaces or manual clicks. Why does this matter? Because using the command line saves time, reduces mistakes from repetitive tasks, and opens doors to automation through scripts or scheduled jobs.

If you’re responsible for backups or large-scale file transfers—or just want to work smarter—mastering these commands is essential. In this guide, we start with basics and build up to advanced techniques so you can handle any S3 job with confidence.

What Is S3 Command Line?

The s3 command line means using text-based tools to interact with Amazon Simple Storage Service (S3). The most popular tool is the AWS Command Line Interface (AWS CLI), which lets you manage buckets and objects straight from your terminal or command prompt.

With AWS CLI’s s3 commands, you can upload files in bulk, download entire directories at once, list contents quickly—even automate complex workflows that would take hours by hand.

There are other tools like s3cmd or rclone, but AWS CLI is official and widely supported. It works well across Windows, Linux, and macOS systems.

Why choose the command line? It’s fast—especially when dealing with hundreds of files—and perfect for scripting routine jobs like nightly backups or data migrations between regions.

How to Install AWS CLI?

Before running S3 commands, you need to install AWS CLI on your system—a quick process that works across all major platforms.

On Windows:

Go to the official AWS site and download the installer package named “AWSCLIV2.msi.” Double-click it and follow the prompts until finished.

On Linux:

You have two main choices: direct download or using a package manager like apt. For direct download:

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip" unzip awscliv2.zip sudo ./aws/install

Or if you prefer package managers:

sudo apt update sudo apt install awscli

On macOS:

You can use either direct download:

curl "https://awscli.amazonaws.com/AWSCLIV2.pkg" -o "AWSCLIV2.pkg" sudo installer -pkg AWSCLIV2.pkg -target /

Or Homebrew if installed:

brew install awscli

After installing on any platform:

Type aws --version in your terminal to confirm installation succeeded.

Next step is configuring credentials so AWS knows who you are:

Run aws configure

Enter your Access Key ID

Enter your Secret Access Key

Set default region name (like us-east-1)

Choose output format (json is common)

Now your computer is ready for s3 command line work!

How to Upload Files Using S3 Command Line?

Uploading data into S3 buckets is one of the most common tasks—and where many admins start their journey with AWS CLI.

Uploading Single Files vs Folders

To upload a single file from your local machine into an S3 bucket:

aws s3 cp myfile.txt s3://my-bucket/

This puts “myfile.txt” at the root of “my-bucket.”

Want it inside a folder? Just add a path after the bucket name:

aws s3 cp myfile.txt s3://my-bucket/reports/

For uploading an entire directory—including all its files/subfolders—use the --recursive flag:

aws s3 cp /local/folder s3://my-bucket/folder --recursive

Filtering Which Files Get Uploaded

Sometimes you only want certain types of files moved—for example only images ending in .jpg. Use both --exclude and --include together:

aws s3 cp /local/folder s3://my-bucket/photos --recursive --exclude "*" --include "*.jpg"

This flexibility helps target exactly what needs uploading while skipping unnecessary files—saving bandwidth and time during large transfers.

Advanced Upload Options

Need public access right away? Add this flag during upload:

--acl public-read

Want faster uploads of big files? Try multipart uploads automatically handled by AWS CLI when needed; just use standard commands—the tool takes care of splitting behind-the-scenes.

How to Download Files Using S3 Command Line?

Downloading from S3 back down to local storage uses similar logic—but reverses source/destination order in each command.

Downloading Single Files vs Folders

To grab one file from an S3 bucket onto your machine:

aws s3 cp s3://my-bucket/myfile.txt ./

This copies “myfile.txt” into your current working directory locally.

For downloading whole folders—including everything inside them—again use --recursive:

aws s3 cp s3://my-bucket/project-data /local/project-data --recursive

Filtering Downloads by File Type

Maybe you only need CSV reports out of thousands of mixed documents stored remotely? Combine filters like before:

aws s3 cp s3://my-bucket/data /local/data --recursive --exclude "*" --include "*.csv"

This approach keeps downloads efficient—even when working with massive buckets full of diverse content types spread across many folders.

Syncing Local Folders With Buckets

For ongoing projects where changes happen often both locally and remotely—the sync feature shines:

To mirror all new/changed files from local folder up into an S3 bucket (or vice versa):

aws s3 sync /local/source-folder s3://my-bucket/destination-folder

Or reverse direction by swapping arguments above!

Sync compares timestamps/hashes so only changed items move each run—a huge time-saver over repeated full uploads/downloads.

How to List Objects in S3 Using Command Line?

Keeping track of what’s stored where matters as much as moving data itself! Listing features help admins audit usage fast without logging into consoles every time something changes.

To see all buckets under your account at once:

aws s3 ls

List everything inside a specific bucket’s root level?

aws s3 ls s3://my-bucket/

Drill deeper into subfolders (“prefixes”) within that bucket?

aws s3 ls s3://my-bucket/reports/

For detailed listings showing sizes/timestamps recursively throughout nested folders—and summary totals at end—combine flags like this:

aws s3 ls s3://my-bucket/archive/ --recursive --human-readable --summarize

Want even more control? Pipe results through grep or awk on Unix systems—or redirect output into logfiles for later review.

Protecting Your Amazon S3 Data with Vinchin Backup & Recovery

Beyond mastering daily operations via command-line tools, it's crucial to ensure long-term protection for critical Amazon S3 data. Vinchin Backup & Recovery stands out as a professional enterprise-grade file backup solution supporting nearly all mainstream file storage platforms—including Amazon S3 object storage, Windows/Linux file servers, and NAS devices. For organizations managing vast amounts of cloud-based content, Vinchin Backup & Recovery delivers exceptionally fast backup speeds compared to competitors thanks to its advanced architecture tailored for high-volume environments.

Leveraging proprietary technologies such as simultaneous scanning plus data transfer and merged file transmission, Vinchin Backup & Recovery achieves industry-leading performance that dramatically shortens backup windows even for massive datasets. Among its robust feature set are incremental backup support, wildcard filtering capabilities, multi-level compression options, cross-platform restore flexibility (store backups on any supported platform), and strong encryption—all designed for efficiency, security, and operational simplicity.

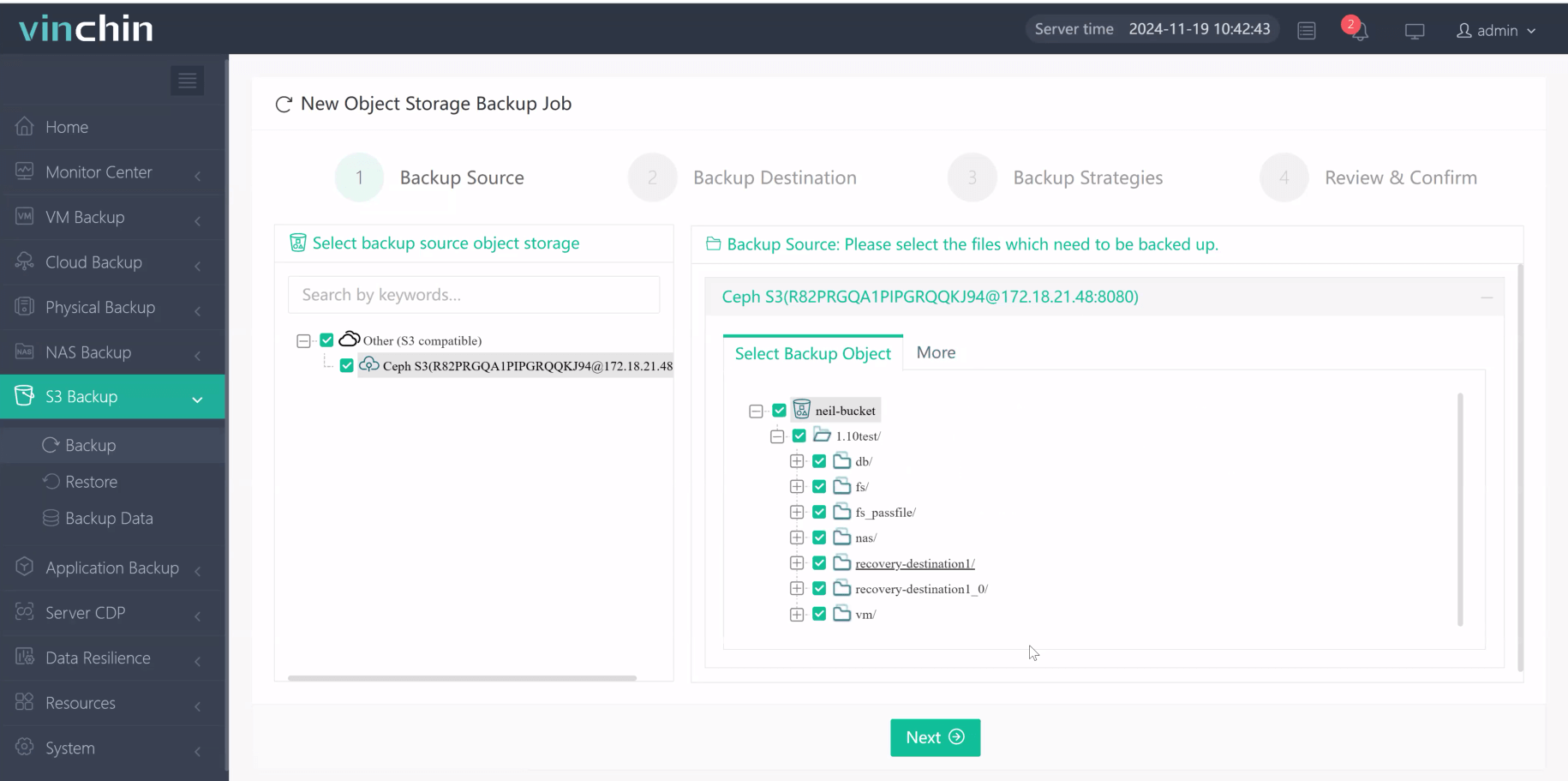

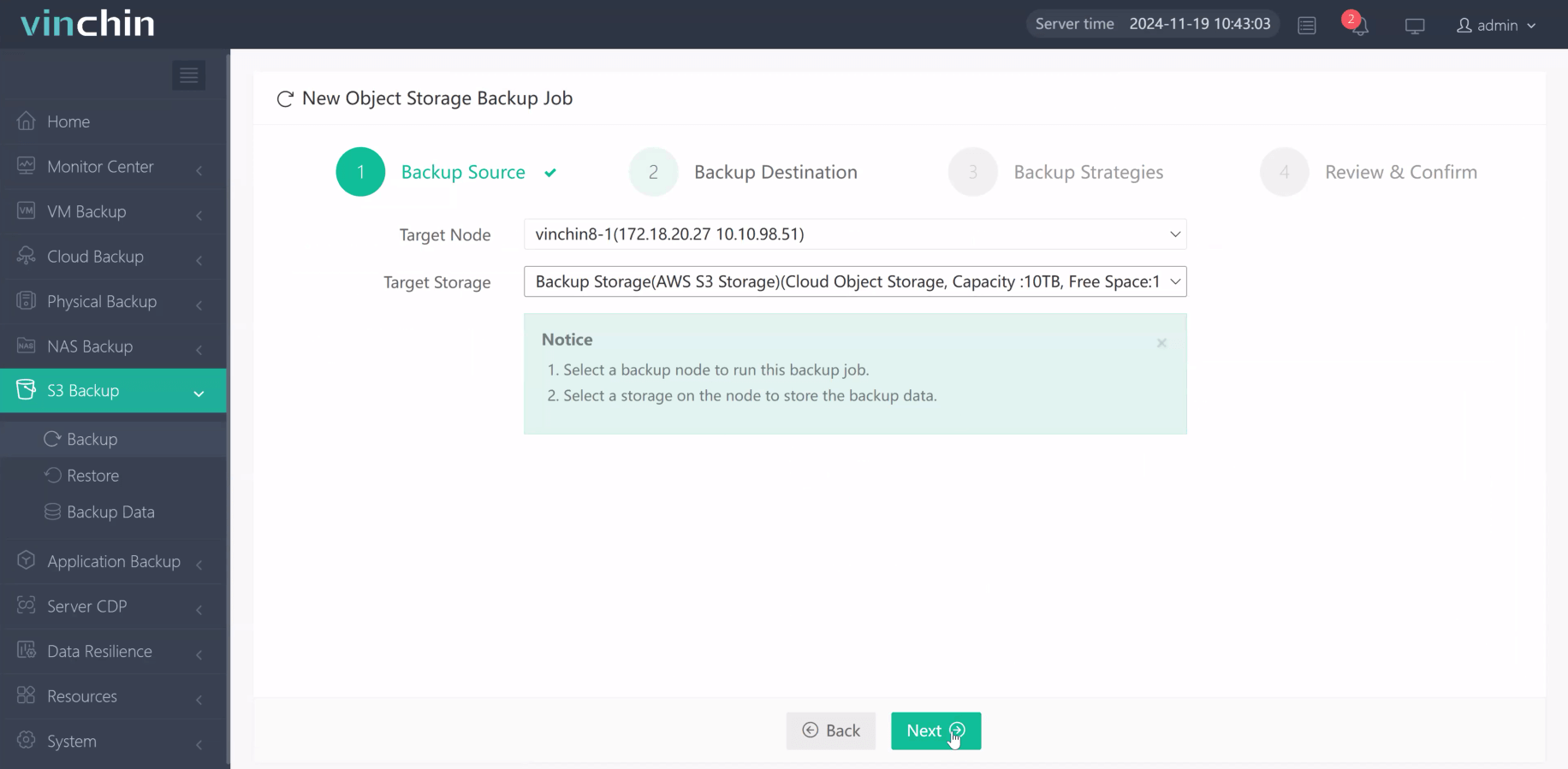

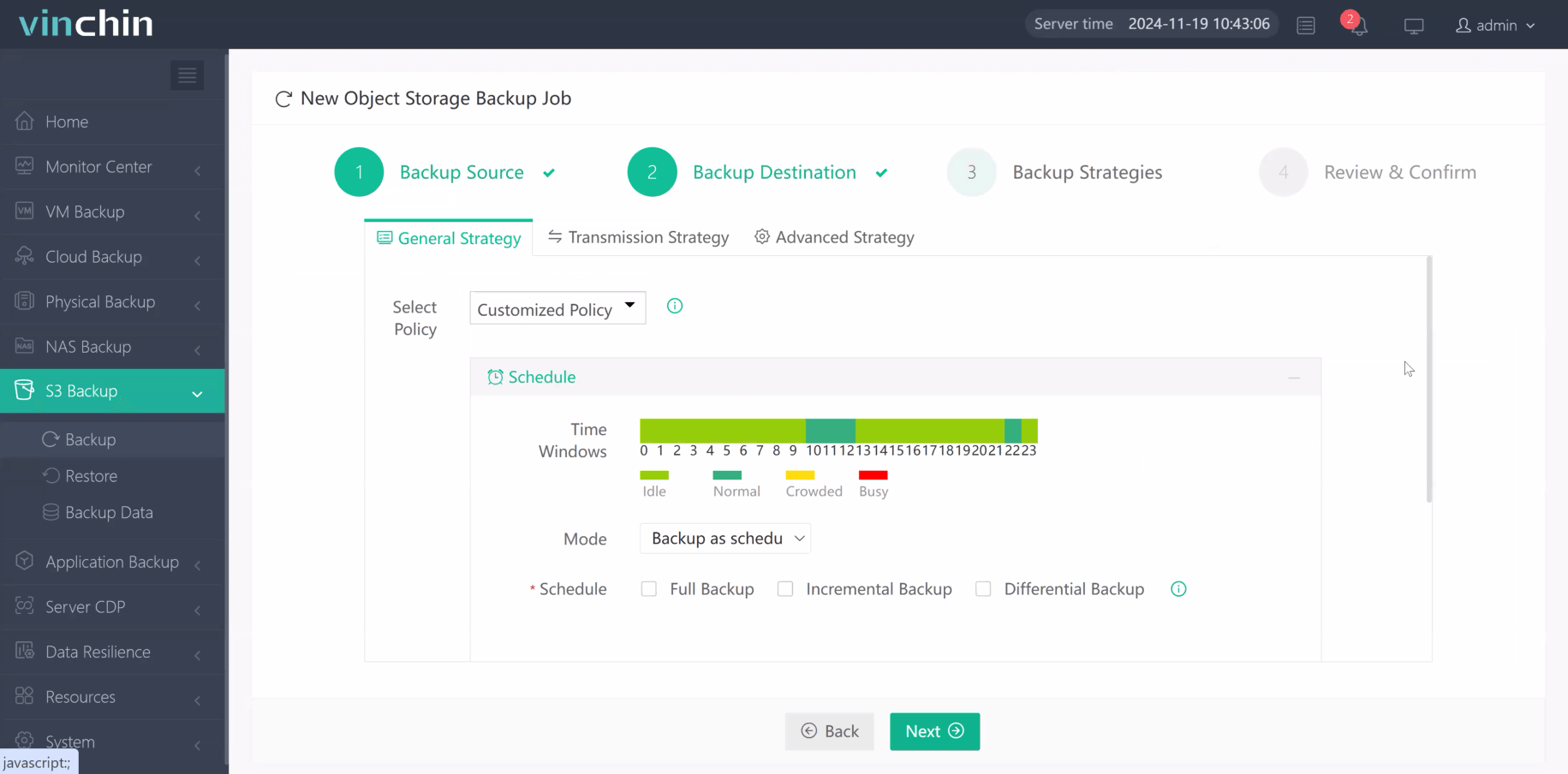

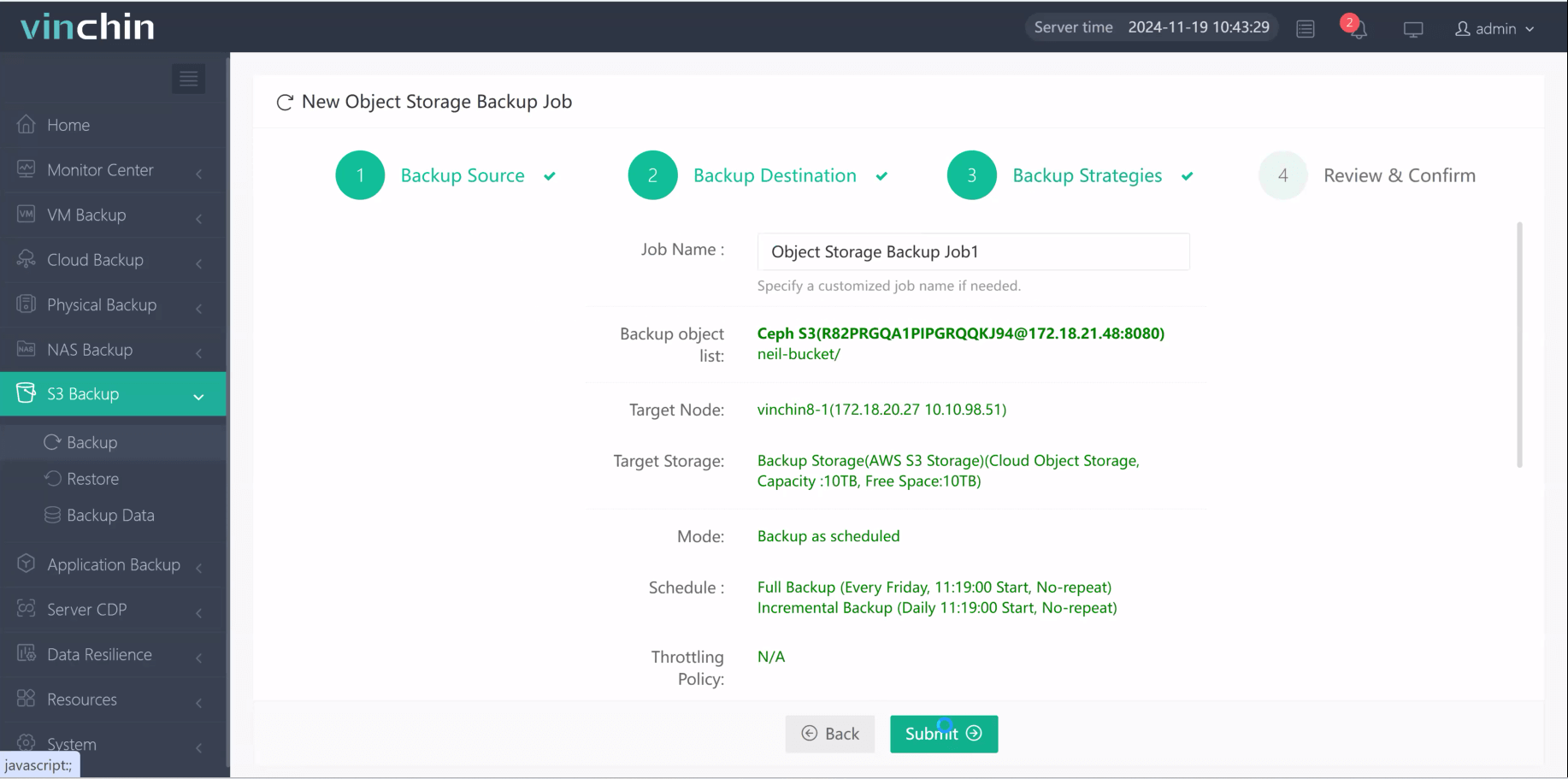

The intuitive web console makes protecting Amazon S3 objects straightforward. Typically you'll complete four steps:

Step 1. Select the Amazon S3 files to back up;

Step 2. Choose backup storage;

Step3. Define backup strategy;

Step 4. Submit job.

Recognized globally with top ratings among enterprise users worldwide, Vinchin Backup & Recovery offers a fully featured free trial valid for 60 days—click below to experience why leading organizations trust their data protection needs to Vinchin Backup & Recovery!

Frequently Asked Questions About The S3 Command Line

Q1: How do I sync two folders between my PC/Mac/Linux box & an Amazon bucket directly?

A1: Use aws sync/source/path://bucket-name/dest-path; swap order reverse direction

Q2: Can uploaded objects be made public immediately?

A2: Yes—add flag --acl public-read during upload step grant instant read access globally

Q3: What should I check first if uploads/downloads feel unusually slow?

A3: Test internet speed/run smaller batches/use regional endpoints closer physically/add flag ‘–no-verify-ssl’ temporarily diagnose SSL bottlenecks

Q4: How do I delete individual objects/buckets safely via cli?

A4: Run awrm://bucket/objectname.ext; remove whole empty buckets w/force via ‘a w r b ://bucketname –force’ but always double-check targets first

Conclusion

The power of the S3 command line lies in its speed and flexibility—from simple uploads/downloads through advanced automation tasks—all while reducing manual effort day-to-day. For complete protection alongside operational excellence consider pairing these skills with secure backups powered by Vinchin today!

Share on: