-

What Is Kubernetes Database Backup?

-

Why Backup Databases in Kubernetes?

-

Method 1: Back Up Databases in Kubernetes Using Velero

-

Method 2: Back Up Databases in Kubernetes Using Database-Native Utilities

-

Enterprise-Level Protection with Vinchin Backup & Recovery

-

Kubernetes Database Backup FAQs

-

Conclusion

Kubernetes has changed how we deploy and manage applications at scale. But as more organizations run databases inside Kubernetes clusters, protecting that data becomes critical. Why? Because losing database data can mean lost revenue, compliance issues, or even business failure.

A solid kubernetes database backup strategy is not just a checkbox—it’s your safety net when disaster strikes or mistakes happen. In this guide, you’ll learn what makes Kubernetes database backups unique, why they matter so much today, and how to implement them using both open-source tools and enterprise solutions.

What Is Kubernetes Database Backup?

A kubernetes database backup means creating a reliable copy of your database data along with its related Kubernetes resources—like pods, persistent volumes (PVs), persistent volume claims (PVCs), ConfigMaps, Secrets, and Services.

In traditional environments you might back up a single server or virtual machine running your database. But in Kubernetes everything is distributed: your database runs inside pods; its storage lives on PVCs; configuration details are stored in objects like Secrets or ConfigMaps.

To truly protect a stateful application in Kubernetes:

You must capture both the actual data (on disk) and all the objects that define how it runs.

This ensures you can restore not just raw tables but also user accounts, connection settings—even automated failover logic if present.

If you only back up one part (say just the PV), recovery could be incomplete or fail entirely.

Why Backup Databases in Kubernetes?

Backing up databases in Kubernetes is essential for several reasons—and some are unique to containerized environments.

First off: containers are ephemeral by design. Pods come and go as nodes fail or get upgraded; PVCs might be deleted accidentally; namespaces can disappear due to human error or automation gone wrong.

Second: without regular kubernetes database backups you risk permanent data loss from hardware failures, ransomware attacks (which target cloud workloads too), software bugs that corrupt storage layers—or even simple typos during routine maintenance.

Third: regulatory compliance often requires proof of recoverability for sensitive information stored in databases—think GDPR or HIPAA mandates.

Fourth: backups make migrations safer when moving between clusters or cloud providers—a common scenario as companies modernize infrastructure.

Finally: testing new features safely often means restoring production-like datasets into isolated namespaces—a process made possible by robust backup/restore workflows.

In short? If your business depends on its data—and whose doesn’t?—then regular kubernetes database backup is non-negotiable insurance against downtime and disaster.

Method 1: Back Up Databases in Kubernetes Using Velero

Velero is an open-source tool designed specifically for backing up and restoring entire Kubernetes clusters—including all resources and persistent volumes attached to them. It works by taking snapshots of cluster state plus underlying storage volumes if supported by your cloud provider or CSI driver.

Before starting with Velero:

Make sure it’s installed on your cluster

Confirm access to object storage like AWS S3

Check that your StorageClass supports volume snapshots

Handling Persistent Volumes with Velero

Persistent volumes store actual database files—the heart of any kubernetes database backup job. Not every StorageClass supports snapshotting out-of-the-box; check compatibility first using vendor documentation or kubectl get storageclass.

If supported:

Velero will create consistent snapshots alongside resource manifests

Otherwise consider using native dump utilities instead (see next method)

You can verify available snapshot locations with:

velero get snapshot-locations

This helps ensure backups include all necessary disk data—not just YAML definitions!

Backing Up a Database Namespace with Velero

To back up an entire namespace containing your stateful app:

velero backup create my-db-backup --include-namespaces my-database-namespace --snapshot-volumes

This command tells Velero to capture everything—including Deployments/StatefulSets running the DB engine itself plus associated PVCs/PVs holding actual records.

Want more control? Limit scope further:

velero backup create my-db-backup \ --include-namespaces my-database-namespace \ --include-resources deployments,persistentvolumeclaims,secrets \ --snapshot-volumes

This targets only deployments (or StatefulSets), PVCs/PVs storing tables/indexes/logs—and secrets holding passwords/connection strings needed at restore time.

Restoring Your Database from a Velero Backup

When disaster strikes—or during migration/testing—you can bring everything back using:

velero restore create --from-backup my-db-backup

Velero recreates all captured resources plus restores PV contents via snapshots if available. You may remap namespaces using --namespace-mappings if restoring into another environment for testing purposes.

Scheduling Regular Backups with Velero

Automate daily protection by scheduling jobs via cron syntax:

velero schedule create daily-db-backup \ --schedule "0 2 * * *" \ --include-namespaces my-database-namespace \ --snapshot-volumes

This creates fresh backups every day at 2 AM UTC—helpful for meeting retention policies without manual effort!

Method 2: Back Up Databases in Kubernetes Using Database-Native Utilities

Sometimes native tools like mysqldump (for MySQL/MariaDB) or pg_dump (for PostgreSQL) offer more flexibility than cluster-wide snapshotting—especially if you need logical exports compatible across versions/cloud providers or want table-level granularity during restores.

These utilities let you export schema/data directly from inside running pods then upload results anywhere—from NFS shares to cloud object stores like S3/Azure/GCS buckets.

Ensuring Backup Consistency During High Write Activity

Database consistency matters most when users are writing new records while dumps occur! Both MySQL (mysqldump) and PostgreSQL (pg_dump) support transaction-consistent exports:

For MySQL add

--single-transactionflag which takes a consistent snapshot without locking tables.For PostgreSQL use default behavior of

pg_dump, which runs inside a transaction unless dumping certain objects requiring exclusive locks.

For extra safety consider pausing writes briefly during scheduled jobs—or use built-in replication features for zero-downtime dumps if available.

Example Workflow: Automating MySQL Dumps via CronJob

Here’s how you might automate nightly logical exports using CronJobs inside Kubernetes:

1. Write Secure Dump Script

Store credentials as environment variables injected securely via Secrets—not hardcoded! Example script (backup.sh):

#!/bin/bash

set -euo pipefail # Exit on errors/unset vars/pipeline failures!

export MYSQL_PWD="${DB_PASSWORD}"

BACKUP_FILENAME="backup_$(date +%Y%m%d_%H%M%S).sql"

mysqldump --single-transaction -u "$DB_USER" -h "$DB_HOST" "$DB_NAME" > "$BACKUP_FILENAME"

aws s3 cp "$BACKUP_FILENAME" "s3://${S3_BUCKET}/"

rm "$BACKUP_FILENAME"Note: By setting MYSQL_PWD, password won’t appear in process lists. Never echo passwords directly!

2. Build Container Image

Include required binaries (mysqldump, awscli). Push image to private registry accessible by cluster nodes.

3. Create CronJob Resource

Sample manifest below schedules job nightly at 1 AM UTC:

apiVersion: batch/v1 kind: CronJob metadata: name: mysql-backup-job spec: schedule: "0 1 * * *" jobTemplate: spec: template: spec: containers: - name: mysql-backup-container image: <your-registry>/mysql-backup-image:v1 # Replace accordingly! envFrom: - secretRef: name: mysql-backup-secrets # Store DB creds/S3 keys here! restartPolicy: OnFailure # Retry failed jobs automatically!

Tip: Use kubectl create secret generic mysql-backup-secrets ... to inject sensitive values safely.

4. Monitor Job Status

Check logs regularly via:

kubectl logs job/<job-name> kubectl get cronjob/mysql-backup-job

Set alerts on failure events so missed backups never go unnoticed!

5. Restoring Data from Logical Dumps

Download SQL file from S3/object store then import into target DB instance:

mysql -u $DB_USER -p$DB_PASSWORD -h $DB_HOST $DB_NAME < /path/to/backup_file.sql

Or use similar commands/tools depending on RDBMS flavor.

Enterprise-Level Protection with Vinchin Backup & Recovery

For organizations seeking streamlined management and advanced capabilities beyond native tools, an enterprise-grade solution is essential. Vinchin Backup & Recovery stands out as a professional platform purpose-built for comprehensive Kubernetes backup needs at scale. The solution delivers full/incremental backups, fine-grained protection down to the namespace/application/PVC/resource level, policy-based automation including scheduled and one-off jobs, cross-cluster/cross-version recovery—even across heterogeneous environments—and robust security through encryption and WORM protection. These features collectively ensure efficient operations while maximizing reliability and flexibility for complex production scenarios.

The intuitive web console of Vinchin Backup & Recovery makes safeguarding your Kubernetes databases remarkably straightforward—just follow four steps:

Step 1. Select the backup source

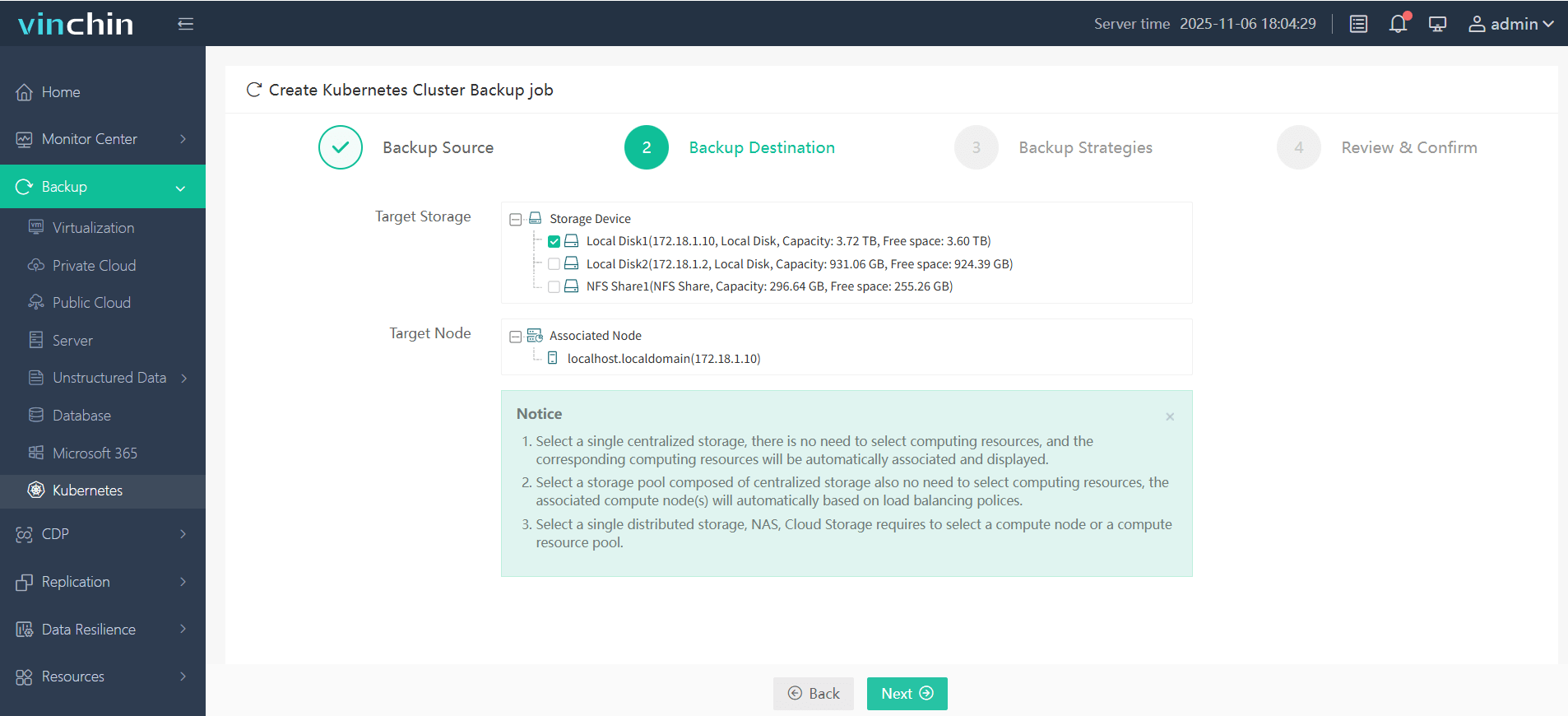

Step 2. Choose the backup storage location

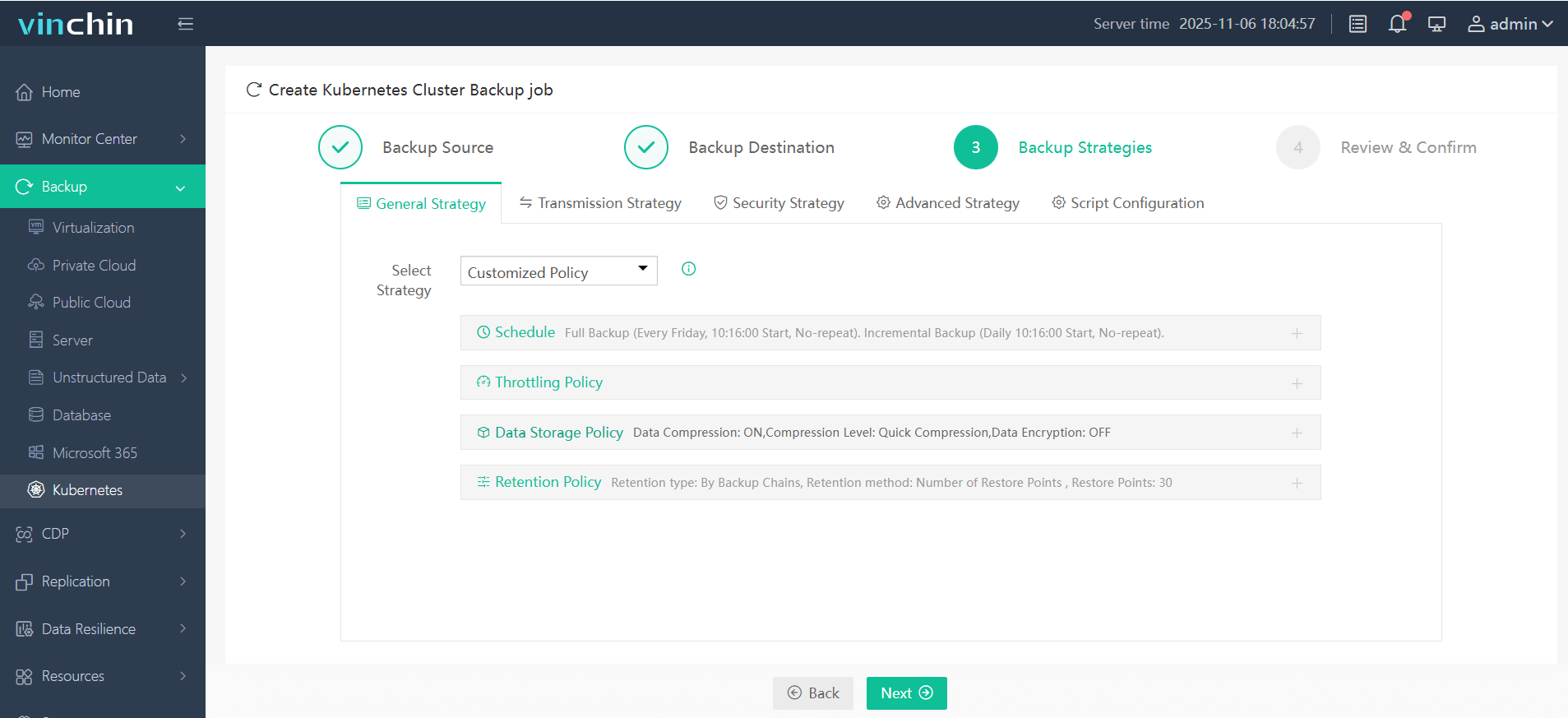

Step 3. Define the backup strategy

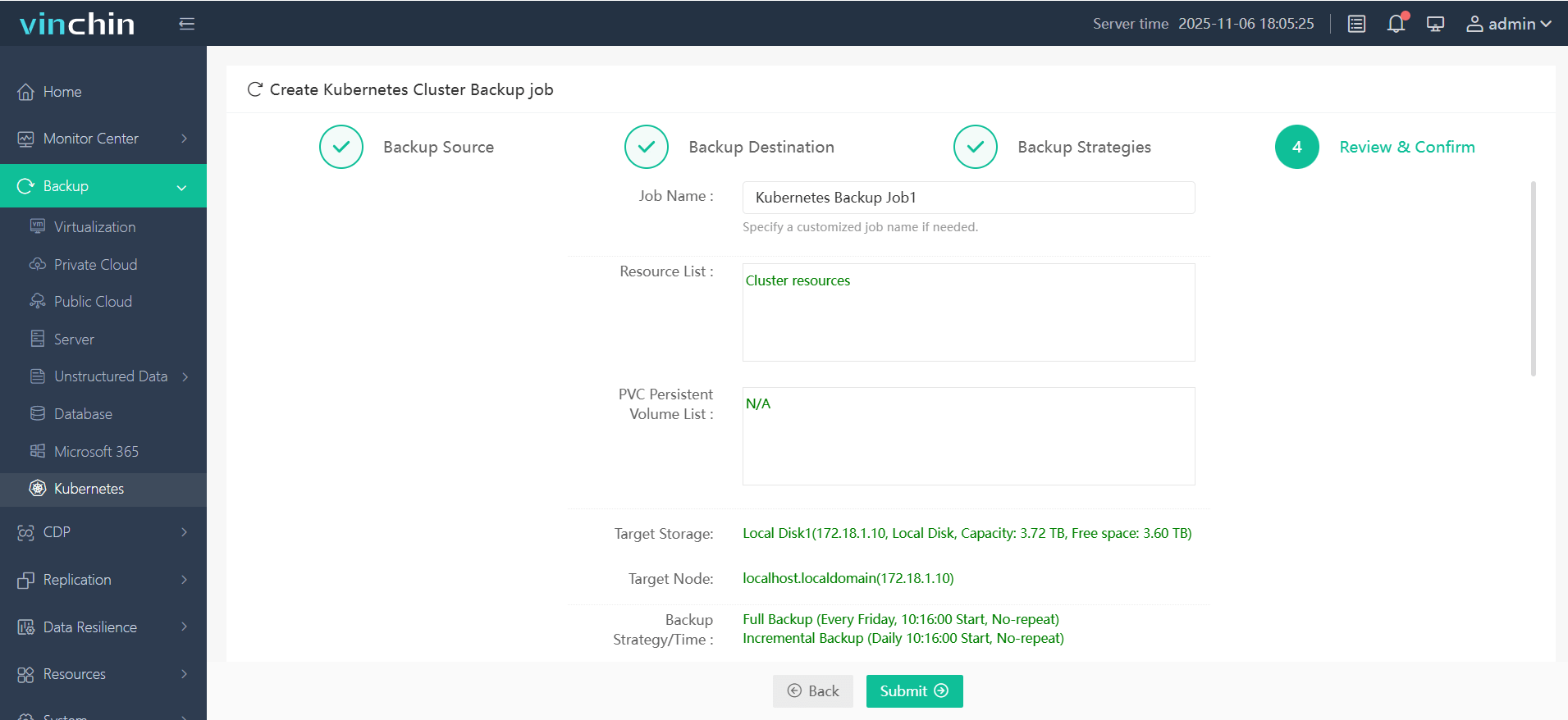

Step 4. Submit the job

Vinchin Backup & Recovery is trusted globally by enterprises of all sizes—with top ratings for reliability and support—and offers a free full-featured trial for 60 days so you can experience seamless enterprise-grade protection firsthand.

Kubernetes Database Backup FAQs

Q1. How do I handle multi-tenancy when backing up databases across several namespaces?

A1. Use label selectors or namespace filters within your chosen tool—or leverage Vinchin's fine-grained selection—to isolate tenant-specific resources easily without overlap.

Q2. Can I encrypt my kubernetes database backup before sending it offsite?

A2. Yes—enable encryption options provided by your tool/object store service; always encrypt sensitive SQL dumps prior to upload for maximum security.

Q3. What should I do if my scheduled CronJob fails unexpectedly?

A3. Check pod logs immediately using KUBECTL LOGS JOB/<JOB-NAME> REVIEW ENVIRONMENT VARIABLES VERIFY SECRET MOUNTS AND RESTART THE JOB IF NEEDED

Conclusion

Protecting databases inside containers demands careful planning—but pays off big when things go wrong. Kubernetes database backup keeps businesses safe. Vinchin delivers an easy yet powerful way tomake sure yours never gets left behind. Try it today!

Share on: