-

What is Ceph?

-

The core components of Ceph

-

The characteristics of Ceph

-

How to install Ceph in Proxmox VE?

-

How to monitor Proxmox Ceph?

-

Simplify your Proxmox VE VM protection

-

Conclusion

Proxmox is an open-source virtualization management platform based on KVM and LXC virtualization technologies. Proxmox VE supports a variety of storage methods including local storage, LVM, NFS, iSCSI, CephFS, RBD, and ZFS. Ceph is a distributed storage system. When integrated with Ceph, Proxmox can deliver a virtualization environment endowed with heightened performance and high availability.

What is Ceph?

Ceph is an open-source distributed storage system designed to offer high reliability, performance, and scalability. It employs a data distribution algorithm called CRUSH (Controlled Replication Under Scalable Hashing) to evenly distribute data across multiple storage nodes, ensuring data redundancy and fault tolerance.

The core components of Ceph

1. RADOS (Reliable Autonomic Distributed Object Store): Serving as the foundation of the Ceph storage cluster, RADOS provides object storage functionalities. It shoulders the responsibility for managing the distribution, redundancy, and automatic repair of data.

2. RADOS Block Device (RBD): RBD allows users to create block devices, akin to traditional block storage. This empowers Ceph to function as the backend storage for applications that require block storage, such as virtual machines and containers.

3. Ceph File System (CephFS): CephFS is a distributed file system presenting an interface and features similar to those of conventional file systems. It supports concurrent access from multiple clients and boasts high performance and scalability.

The characteristics of Ceph

Scalability: Ceph can horizontally scale according to demand by increasing the storage capacity and throughput via the addition of more storage nodes. It employs the CRUSH algorithm to uniformly distribute data across storage nodes, achieving a high degree of scalability.

Data Redundancy and Fault Tolerance: Ceph ensures the security and reliability of data through the use of data redundancy and erasure coding technologies. It can tolerate storage node failures and automatically repair and rebalance data to maintain system integrity.

Self-Managing and Auto-Recovery: Bearing self-managing attributes, Ceph can dynamically adjust and optimize the configuration of the storage cluster. It also incorporates an automatic recovery mechanism that can detect and repair corrupted or lost data, thus enhancing system availability.

High Performance: Ceph provides high performance through parallel and distributed data access. It boasts powerful data caching and asynchronous replication features to optimize read and write operations.

Community Support: As an open-source project, Ceph receives robust and vigorous community support and has a dedicated development team. Users can obtain assistance from the community, share experiences, and contribute to further development and enhancements.

How to install Ceph in Proxmox VE?

Requirements:

Proxmox VE Nodes: You need at least one Proxmox VE node, but for a production environment, it’s recommended to have at least three nodes for redundancy and high availability.

Hardware: Each Proxmox VE node should have enough CPU, RAM, and storage for your needs. For Ceph, it’s recommended to use dedicated disks (SSD or HDD). Ceph can work with a small amount of RAM, but the performance will be better with more. A fast network connection (10GbE) is also recommended.

Network: Each Proxmox VE node should have a static IP address. A separate network for Ceph traffic is also recommended.

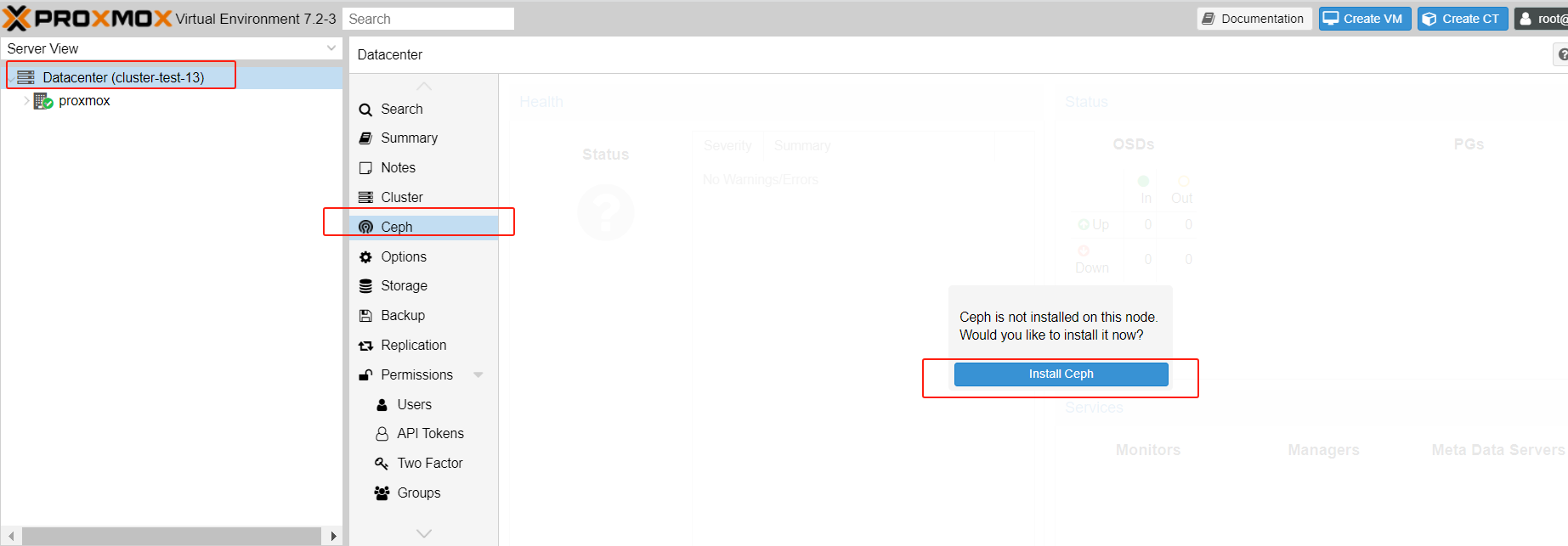

Go to Datacenter > Ceph, click Install Ceph

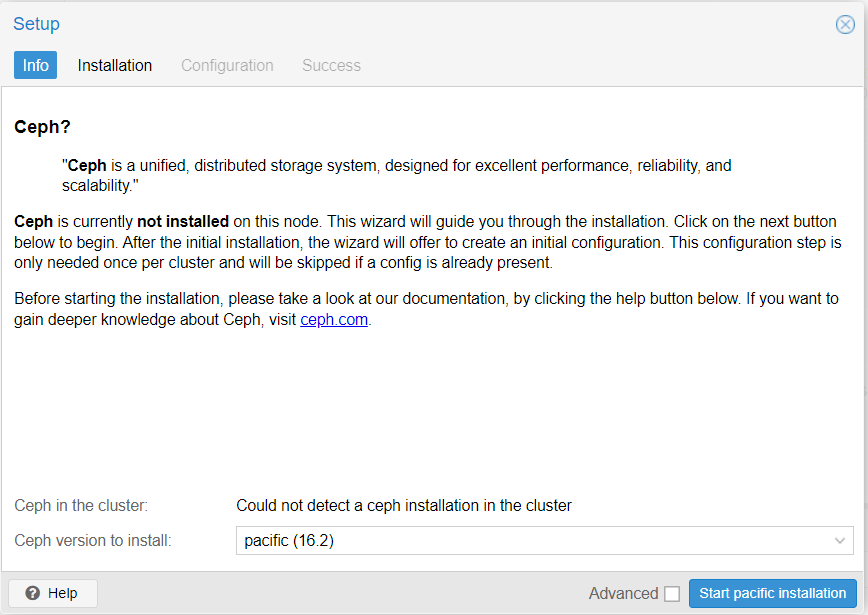

Choose the Ceph version to install, then click Start installation

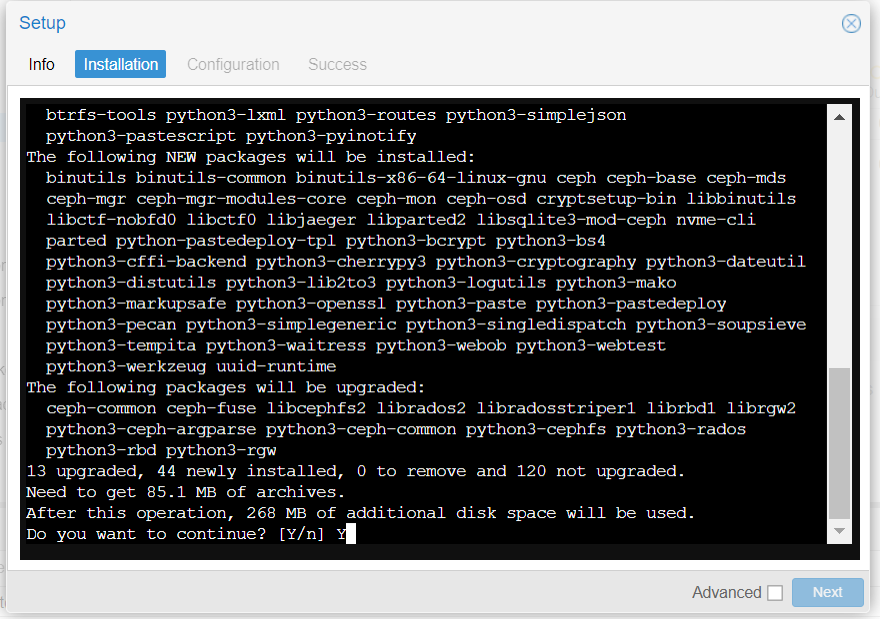

Enter Y to continue the process

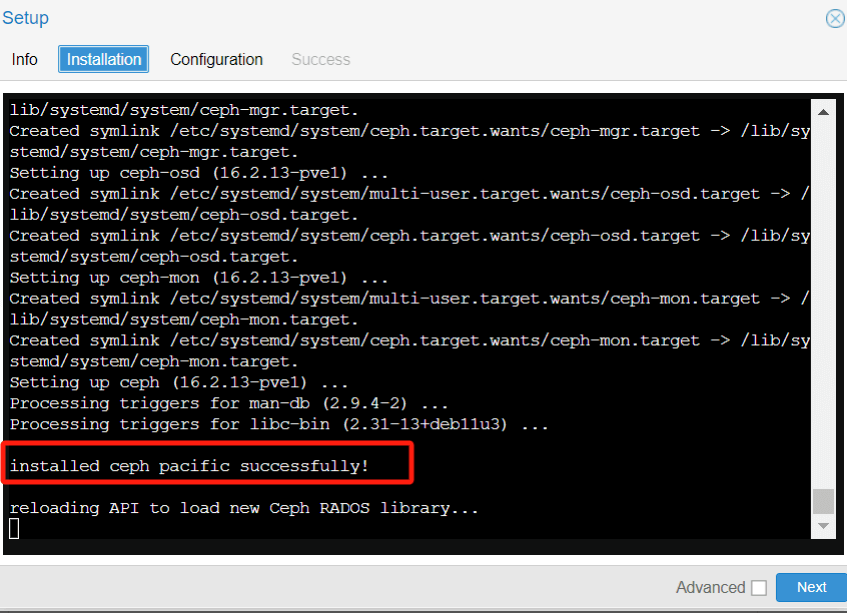

Wait for installation to complete. Then click Next.

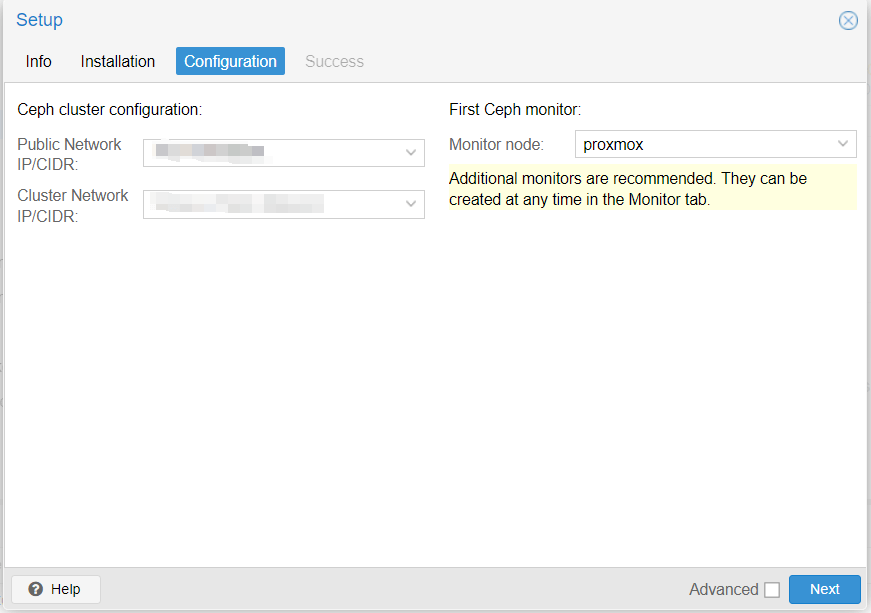

Set network information.

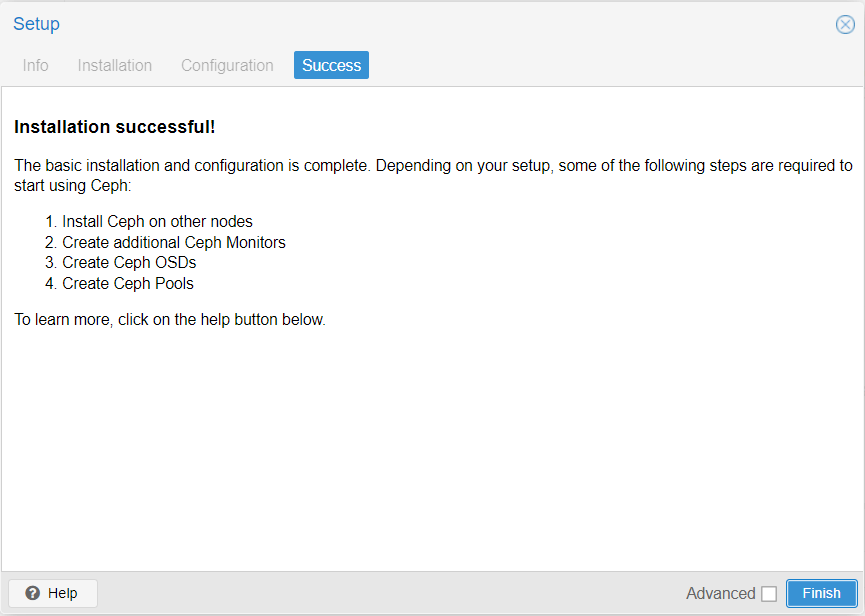

The installation is complete.

Then you can configure your Ceph settings, including creating monitors and OSDs. It’s recommended to have at least three monitors and OSDs for a production environment

After the installation, you can check the status of your Ceph cluster in the Proxmox VE web interface. Make sure that all monitors and OSDs are up and running.

How to monitor Proxmox Ceph?

To guarantee the smooth operation of Ceph within Proxmox integration, monitoring of the Ceph cluster is required. Here are some common Ceph monitoring parameters:

1.Monitoring OSD status: Within the realms of Proxmox Ceph integration, the OSD is responsible for the storage and restoration of data. The process of monitoring OSD status avails the ability to scrutinize the capacity of OSD and ascertain whether they are in operational state.

2.Monitoring PG status: A Placement Group (PG) operates as a logical container utilized for the storage of objects within the Ceph cluster. The act of monitoring PG status facilitates the opportunity to investigate the health status and quantity of PGs.

3. Monitoring sata availability: This involves verifying whether the storage maintains enough space to house data, as well as ascertaining the sufficiency of available PGs for data accommodation.

4. Checking Ceph monitor status: A Ceph monitor is a daemon residing within the Ceph cluster, which shoulders the responsibility of tracking and updating the status of the cluster. Monitoring the status of them provides a means to ensure they are effectively operational and detect any malfunction promptly.

5. Checking bandwidth utilization: This aids in checking whether network transmission is functioning normally. Excessive usage of bandwidth might imply an overabundance of data being transferred.

6. IOPS: The IOPS (Input/Output Operations Per Second) of a Ceph cluster serves as a crucial metric, playing a pivotal role in determining the performance of the Ceph cluster. Monitoring IOPS facilitates the evaluation of whether the cluster’s performance meets expectations.

Simplify your Proxmox VE VM protection

Proxmox and Ceph together provide a flexible and scalable solution for virtualization and storage needs. But to better protect your Proxmox environment, it is always recommended to backup your PVE VMs and critical data with a professional solution.

Vinchin Backup & Recovery is a robust Proxmox VE environment protection solution, which provides advanced backup features, including automatic VM backup, agentless backup, LAN/LAN-Free backup, offsite copy, effective data reduction, cloud archive and etc., strictly following 3-2-1 golden backup architecture to comprehensively secure your data security and integrity.

For recovery, Vinchin Backup & Recovery provides instant VM recovery, which could shorten RTO to 15 seconds by running the VM directly through its backup. You can also choose file-level granular restore to extract specifical files from Proxmox VE VM backup.

Besides, data encryption and anti-ransomware protection offer you dual insurance to protect your Proxmox VE VM backups. You can also simply migrate data from a Proxmox host to another virtual platform and vice versa.

Vinchin Backup & Recovery has been selected by thousands of companies and you can also start to use this powerful system with a 60-day full-featured trial! Also, contact us and leave your needs, and then you will receive a solution according to your IT environment.

Conclusion

Proxmox and Ceph enable you to build highly available and resilient infrastructure while benefiting from the advantages of open-source software. Whether you are running a small virtualization environment or a large-scale data center, Proxmox and Ceph offer a powerful combination for managing your virtual machines and storage resources efficiently.

Share on: