-

What Is SQL Server Database Compression?

-

Why Use SQL Server Database Compression?

-

Types of Compression in SQL Server

-

Method 1 Row-Level Compression in SQL Server

-

Method 2 Page-Level Compression in SQL Server

-

Protecting Compressed SQL Server Databases with Vinchin Backup & Recovery

-

SQL Server Database Compression FAQs

-

Conclusion

As your SQL Server databases grow over time, storage costs can rise fast—and so can query response times. Have you ever faced slow reports or warnings about low disk space? Many IT teams do. SQL Server database compression offers a built-in way to shrink data size without buying more hardware or sacrificing performance.

For example, one company compressed a 500 GB reporting table and saw query times drop by 30%. But remember: compression is not a magic fix for every problem. It works best alongside good indexing and query tuning—not as a replacement.

In this guide, we’ll explore what SQL Server database compression is, why it matters for operations administrators like you, how to use it step by step—and how to manage its impact in real-world environments.

What Is SQL Server Database Compression?

SQL Server database compression reduces the size of tables and indexes by storing data more efficiently on disk. Instead of saving every value in its full form, SQL Server uses algorithms such as dictionary-based encoding and prefix matching (for page-level compression) to shrink repeated values within rows or pages.

This means less disk space is used overall. More data fits into memory buffers too—which can speed up queries because fewer pages need to be read from disk into RAM.

Compression happens at storage level but is transparent to applications; your queries work just as before. You choose which tables or indexes get compressed—so you control where savings happen most.

It’s important to note that not all editions of SQL Server support compression features equally well. Row- and page-level compression are available in Enterprise Edition (all versions), Developer Edition (all versions), Standard Edition starting with SQL Server 2016 SP1. Always check your licensing before planning large-scale changes.

Why Use SQL Server Database Compression?

Why should you care about compressing your databases? There are three main reasons: saving storage space; improving input/output (I/O) performance; reducing memory usage during heavy workloads.

When data is compressed on disk:

Fewer pages are needed for each table or index.

More rows fit into buffer pool memory.

Queries often run faster because less data must be read from storage devices.

Backups may also become smaller since they copy compressed pages directly.

However—there’s always a trade-off! Compressing (and decompressing) data uses extra CPU cycles whenever records are read or written. For many analytic workloads with lots of reads but few writes (like reporting tables), this cost is small compared to the benefits gained from faster queries and lower storage needs.

But if you run high-volume OLTP systems with frequent inserts/updates? Test carefully first! Heavy write activity can increase CPU load enough to offset gains elsewhere.

Types of Compression in SQL Server

SQL Server offers several types of database compression—but let’s focus on those most relevant for rowstore tables:

Row-level compression stores fixed-length columns using variable-length formats when possible; it omits unnecessary zeros/nulls entirely.

Page-level compression builds upon row-level methods by adding prefix/dictionary techniques within each 8KB page—storing common values once per page instead of repeating them across rows.

Columnstore compression exists too—but it applies only to columnstore indexes used mainly for analytics workloads rather than transactional ones.

You can apply row/page compression at table level—or even just specific partitions if needed! Not all datatypes benefit equally though: large objects stored off-row (like images/blobs) aren’t compressed using these methods at all.

Method 1 Row-Level Compression in SQL Server

Row-level compression is usually the simplest option—and often provides quick wins for mixed workloads without much risk. It works by converting fixed-length fields into variable-length ones wherever possible; nulls/zeros take up no physical space at all!

Before applying row-level compression:

Make sure you have ALTER permission on target tables/indexes.

Confirm that your edition supports this feature.

Plan maintenance windows carefully—rebuilding large objects may require significant downtime plus extra transaction log space!

If running Enterprise Edition (or higher), consider using

ONLINE = ONduring rebuilds for minimal disruption:

ALTER TABLE schema_name.table_name REBUILD WITH (DATA_COMPRESSION = ROW, ONLINE = ON);

Here’s how you enable row-level compression via Transact-SQL:

1. Connect to your server using tools like sqlcmd or SQL Server Management Studio.

2. Choose which table or index needs compressing based on size/redundancy analysis.

3. Run this command for tables:

ALTER TABLE schema_name.table_name REBUILD WITH (DATA_COMPRESSION = ROW);

4. Or use this command for indexes:

ALTER INDEX index_name ON schema_name.table_name REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = ROW);

Want an estimate before making changes? Try:

EXEC sp_estimate_data_compression_savings @schema_name = 'schema_name', @object_name = 'table_name', @index_id = NULL, @partition_number = NULL, @data_compression = 'ROW';

After applying changes—or just checking status later—you can verify current settings with:

SELECT data_compression_desc FROM sys.partitions WHERE object_id = OBJECT_ID('schema_name.table_name');Method 2 Page-Level Compression in SQL Server

Page-level compression takes things further than row-only methods—it starts with variable-length conversion but then looks inside each physical page for repeated patterns among values stored there together.

This approach uses two main tricks:

1. Prefix encoding finds shared beginnings among strings/numbers within one page;

2. Dictionary encoding replaces duplicate values with references instead of storing them again each time;

The result? Even greater savings—especially when working with lookup/reference tables filled with repeated codes/descriptions!

To enable page-level compression:

1. Connect as usual via preferred tool;

2. Identify target table/index based on redundancy analysis;

3. Run this command for entire tables:

ALTER TABLE schema_name.table_name REBUILD WITH (DATA_COMPRESSION = PAGE);

4. Or apply it across all partitions of an index like so:

ALTER INDEX index_name ON schema_name.table_name REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = PAGE);

Estimating potential gains first makes sense—compare both options side-by-side using:

EXEC sp_estimate_data_compression_savings @schema_name='schema_name', @object_name='table_name', @index_id=NULL, @partition_number=NULL, @data_compression='PAGE';

If results show big repeats across rows/pages? Page-mode likely delivers best bang-for-buck! But beware: decompression overhead rises slightly versus row-only mode due to extra lookups inside dictionary structures during reads/writes.

Always test both modes against real workload samples before standardizing policies organization-wide!

Protecting Compressed SQL Server Databases with Vinchin Backup & Recovery

To ensure reliable protection alongside advanced features like database compression, organizations turn to professional solutions such as Vinchin Backup & Recovery—a robust enterprise-grade platform supporting today’s mainstream databases including Oracle, MySQL, SQL Server, MariaDB, PostgreSQL, PostgresPro, and MongoDB—with full compatibility for compressed Microsoft SQL Server environments specifically highlighted here. Among its extensive capabilities are incremental backup, batch database backup, multiple level data compression, GFS retention policy management, and ransomware protection—all designed to maximize efficiency while minimizing risk across diverse infrastructures.

Vinchin Backup & Recovery streamlines backup tasks through an intuitive web console requiring just four steps:

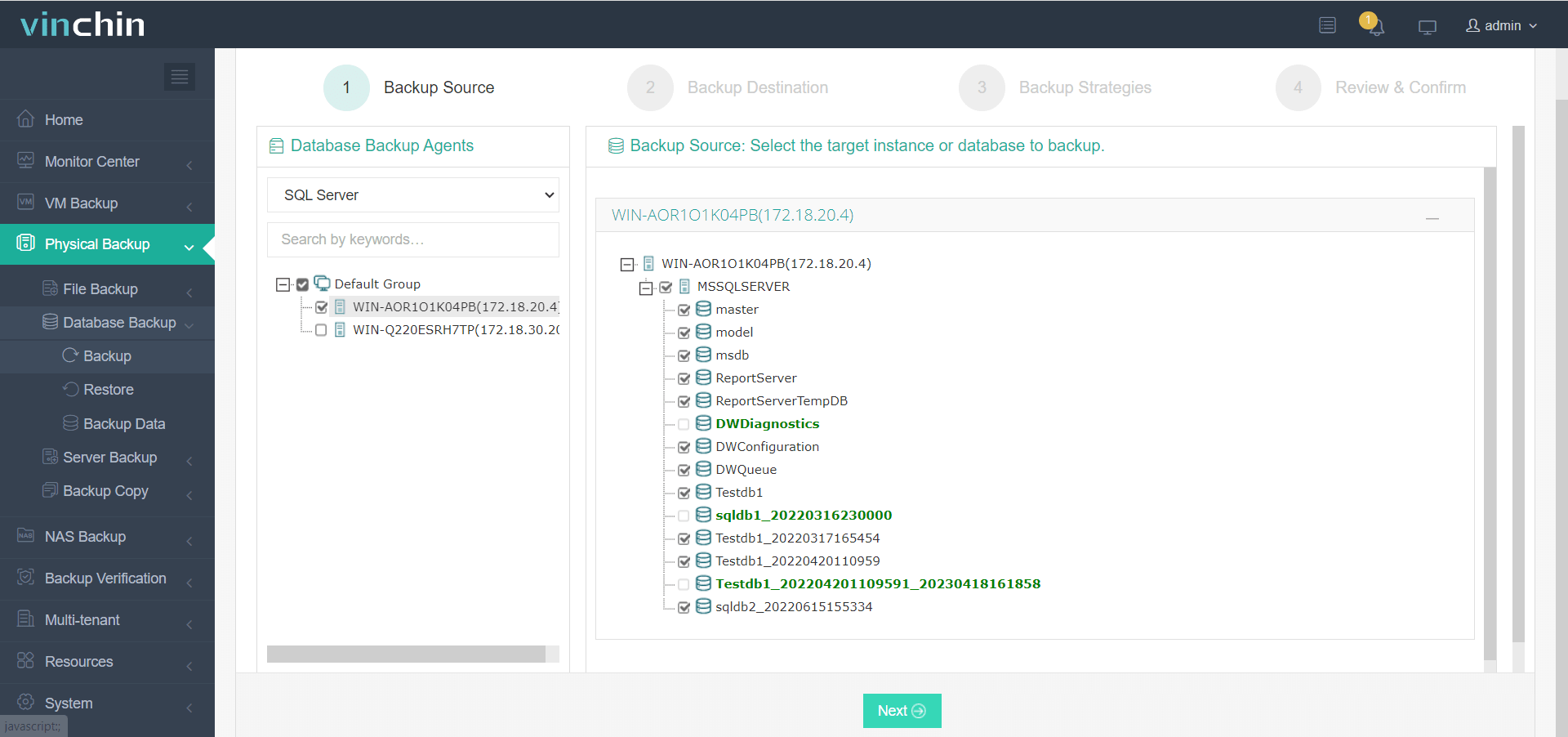

Step 1—Select the Microsoft SQL Server instance you wish to back up;

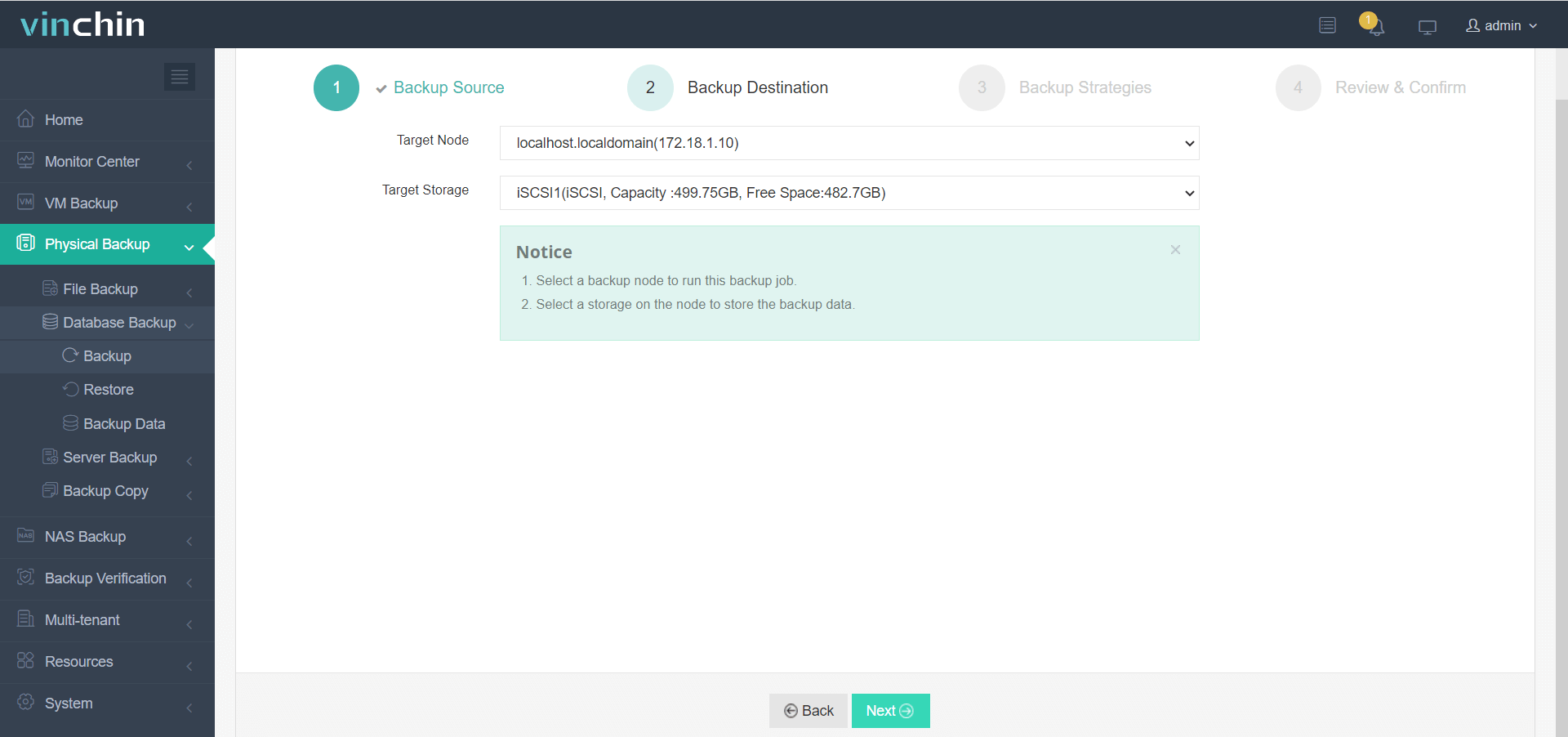

Step 2—Choose the desired storage target;

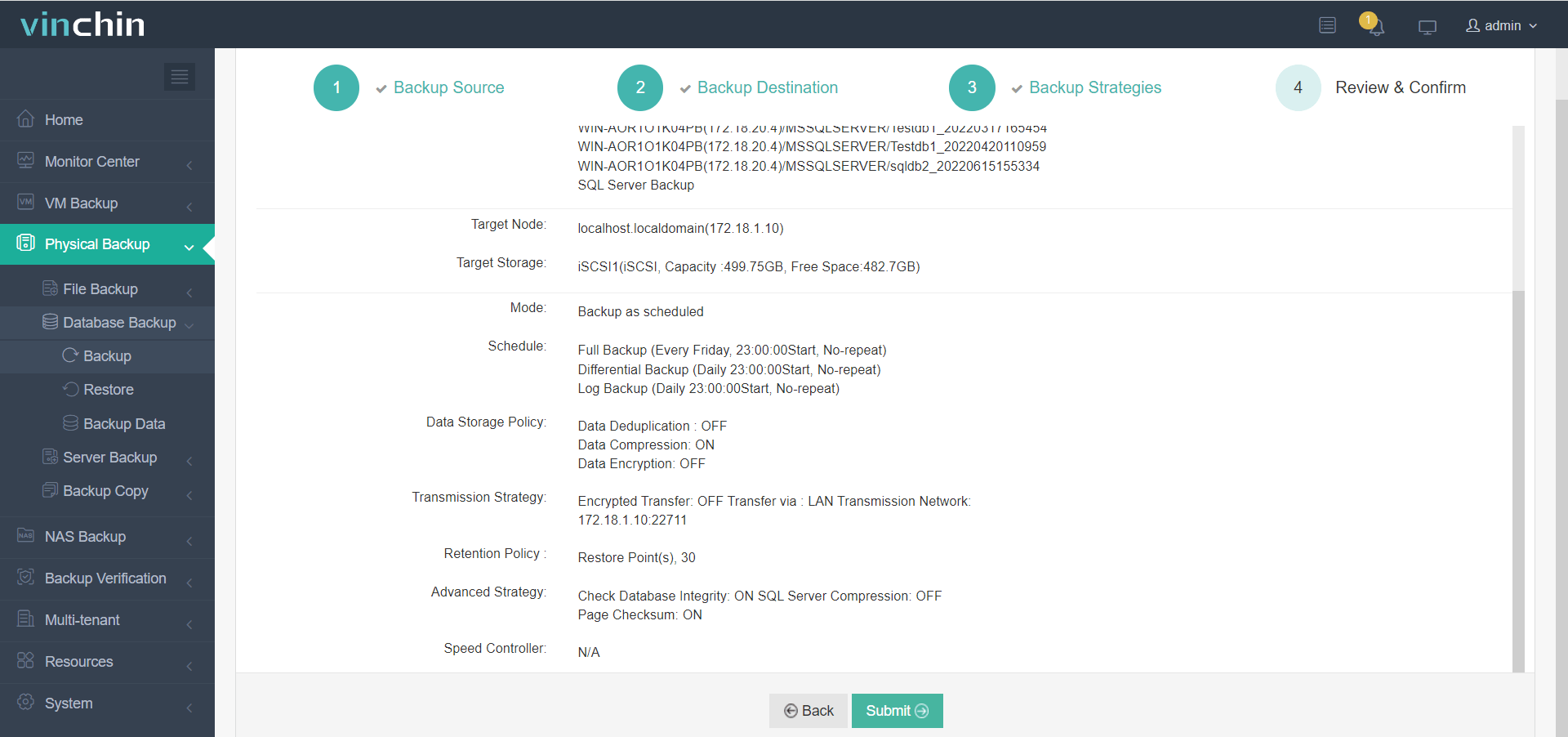

Step 3—Define scheduling and retention strategies according to business requirements;

Step 4—Submit the job.

Trusted worldwide by thousands of enterprises and highly rated by industry experts, Vinchin Backup & Recovery offers a fully featured free trial valid for sixty days—click below to start safeguarding your critical databases today.

SQL Server Database Compression FAQs

Q1: Can I revert a table back from compressed state?

A1: Yes—run ALTER TABLE schema.table REBUILD WITH (DATA_COMPRESSION=NONE) or similar command on indexes/tables needing decompression.

Q2: Will restoring a backup containing compressed tables fail if my destination server lacks proper licensing?

A2: Yes—the restore operation will fail unless destination instance supports same/comparable edition features used originally during backup creation.

Q3: How do I identify which objects offer highest potential savings from further compressions?

A3: Use sp_estimate_data_compression_savings procedure iteratively across largest/heaviest-used candidates identified via sys.dm_db_partition_stats.

Conclusion

SQL Server database compression helps save space while boosting performance when applied wisely—with careful testing beforehand recommended every time! Vinchin ensures backups stay seamless regardless of chosen settings so admins sleep easier knowing their critical assets remain safe yet efficient day-to-day.

Share on: