-

What Is Hadoop Migration?

-

Why Migrate Your Hadoop Environment?

-

Method 1: Migrating On-Premises Hadoop to Cloud Using AWS Data Migration Service or Azure Data Factory

-

Method 2: Upgrading or Switching Hadoop Distributions Using Apache DistCp

-

Challenges in Hadoop Migration Projects

-

How to Protect Hadoop Data with Vinchin Backup & Recovery?

-

Hadoop Migration FAQs

-

Conclusion

Hadoop has powered big data workloads for over a decade. But technology never stands still. Many organizations now face aging on-premises hardware, rising support costs for legacy Hadoop distributions, or pressure to integrate with modern cloud services. These drivers make Hadoop migration an urgent project for IT operations teams. In this guide, we’ll break down what Hadoop migration means, why it matters operationally, and how you can approach it—whether moving to the cloud, switching distributions, or migrating virtual machines.

What Is Hadoop Migration?

Hadoop migration is the process of moving not just your data but also jobs (like MapReduce or Spark), metadata (such as Hive schemas), workflows (Oozie schedules), and sometimes entire clusters from one environment to another. This could mean shifting from on-premises Hadoop to a cloud service or upgrading to a new distribution. The aim? Improve performance, reduce costs, enable new features—or simply keep up with business demands.

Why Migrate Your Hadoop Environment?

Why do so many organizations consider Hadoop migration? For starters, cloud platforms offer better scalability and lower maintenance overheads than traditional setups. Newer Hadoop distributions or alternative platforms bring improved performance and stronger security controls. Hardware refresh cycles can be expensive; older clusters often cost more in support than they’re worth. Migrating also unlocks advanced analytics capabilities like machine learning integration or seamless connections with modern data tools. Operational efficiency improves too—less time spent patching servers means more time delivering value.

Method 1: Migrating On-Premises Hadoop to Cloud Using AWS Data Migration Service or Azure Data Factory

Moving your on-premises Hadoop environment into the cloud is one of the most common—and challenging—migration paths today. Cloud providers such as AWS and Azure offer dedicated tools designed specifically for large-scale data transfer projects.

Pre-Migration Assessment and Planning

Before you touch any data transfer tool, take stock of your current environment. Inventory all components: HDFS storage volumes; YARN resource manager settings; Hive metastore databases; HBase tables; Oozie workflows; custom scripts; even user permissions. Use commands like hdfs dfs -count -q /data to measure file counts and sizes accurately.

Profile job history using built-in logs or tools like Ambari Metrics so you understand peak loads and access patterns. Identify dependencies between jobs—are there nightly ETL pipelines that must run without fail? Document everything clearly before planning your move.

Security policies matter too: note any Kerberos authentication settings or encryption at rest/in transit requirements that must be preserved after migration.

Using AWS Data Migration Service (DMS) and AWS Schema Conversion Tool (SCT)

AWS offers several ways to migrate big data workloads—but not every tool fits every use case. While AWS DMS focuses mainly on relational databases’ movement into Amazon RDS or Redshift environments, some scenarios allow its use alongside Amazon EMR when paired with AWS SCT for schema conversion tasks.

For bulk HDFS-to-S3 migrations—which most ops teams need—the recommended approach is often using S3 DistCp (s3-dist-cp) rather than DMS alone because it handles massive parallel transfers efficiently.

Here’s how you might proceed using these tools:

1. Connect source clusters:

Launch AWS SCT and create a new project using CreateProject in its interface. Connect both your source Hadoop cluster (on-premises) and target Amazon EMR cluster via AddSourceCluster/AddTargetCluster options.

2. Set mapping rules:

Define which databases/tables should move by setting up server mappings through AddServerMapping.

3. Generate assessment report:

Run an assessment (CreateMigrationReport) before starting actual transfers—it highlights unsupported types/functions so you can fix issues early.

4. Migrate cluster:

Use SCT’s batch mode (RunSCTBatch.cmd --pathtoscts "C:\script_path\hadoop.scts") after saving commands in .scts format.

5. Monitor progress:

Track status via MigrationStatus within SCT UI; resume interrupted jobs if needed using ResumeMigration.

6. Validate results:

After completion check row counts/file checksums between source/target locations using CLI tools (hdfs dfs -du, aws s3 ls). Adjust compute resources if bottlenecks appear during validation runs.

If you prefer direct file-level copying at scale—and want fine-grained control over throughput—consider running s3-dist-cp directly from an EMR node:

yarn jar /usr/lib/hadoop/tools/lib/hadoop-distcp.jar \ hdfs://namenode/data s3a://your-bucket/data \ -m 50 -update -delete

This command copies files in parallel (-m 50 sets mapper count), updates only changed files (-update), deletes obsolete files from target (-delete). Always test first with non-production datasets!

Using Azure Data Factory

Azure Data Factory (ADF) supports two main modes for migrating HDFS content into Azure Blob Storage or Data Lake Storage Gen2: DistCp mode leverages your existing cluster’s compute power while native integration runtime mode uses ADF-managed resources instead.

In DistCp mode ADF submits distributed copy jobs directly onto your cluster—a good fit if network bandwidth isn’t constrained internally but external links are limited.

Native integration runtime mode lets ADF connect straight from Microsoft-managed nodes into your HDFS endpoints—but requires opening firewall ports between ADF IP ranges and local datacenter nodes which may involve coordination across networking/security teams.

Both methods let you partition large datasets by folder/date range so incremental syncs are possible without downtime risk:

1. Set up linked services in ADF portal pointing at both source HDFS endpoint & destination storage account

2. Author pipeline activities specifying either “Copy activity” (for native runtime) or “HDInsight activity” running DistCp script

3. Schedule incremental runs based on last-modified timestamps

4. Monitor progress/errors via built-in dashboard alerts

Always validate transferred files by comparing record counts/checksums before decommissioning old storage systems.

Method 2: Upgrading or Switching Hadoop Distributions Using Apache DistCp

Sometimes staying on-premises makes sense—but maybe you need better performance through newer hardware/software stacks or want vendor support changes that require switching distributions entirely.

Apache DistCp remains the go-to utility for moving petabyte-scale datasets between compatible clusters quickly thanks to its parallelism features:

1. Prepare target cluster:

Deploy new distribution/version ensuring network connectivity exists between old/new NameNodes/DataNodes

2. Run enhanced DistCp command:

hadoop distcp -update -delete -pbugpax -m 100 \ hdfs://source-cluster/path hdfs://target-cluster/path

Here -update syncs only changed files since last run; -delete removes obsolete ones from target; -pbugpax preserves permissions/block size/user/group/access times/xattrs; -m 100 sets number of mappers based on available cores/network speed

3. Migrate Hive metadata carefully:

For minor version upgrades exporting/importing metastore DB may suffice:

hive --service metatool --dumpMetaData > meta.sql hive --service metatool --loadMetaData < meta.sql

For major jumps (e.g., Hive 1.x → 3.x) consult upgrade guides—sometimes manual schema evolution scripts/HMSMirror utility are required due to breaking changes

4. Validate thoroughly:

Compare directory/file counts pre/post-migration (hdfs dfs -count) plus sample queries against migrated tables/jobs before cutover

5. Cut over users/applications once satisfied—all traffic should point at new endpoints only after final delta sync completes successfully

DistCp also works well as part of hybrid workflows where initial bulk load happens offline then periodic incremental sync keeps environments aligned until full switchover day arrives—isn’t flexibility great?

Challenges in Hadoop Migration Projects

No two migrations look alike—but certain pain points crop up again and again:

Large data volumes slow things down unless phased approaches/throttling are used during transfer windows (“move by business unit,” anyone?). Network bandwidth constraints demand careful scheduling outside peak hours—or even physical disk shipping if links are too slow!

Metadata headaches abound especially around Hive schemas/custom UDF compatibility across versions/distributions—always dry-run upgrades first! Application/script reconciliation takes time since hard-coded paths/configurations rarely match perfectly between old/new environments (“grep ‘/old/path’ *.sh” saves lives).

Testing/validation cannot be skipped—run checksum comparisons/sample queries regularly throughout project phases rather than waiting until endgame when rollback gets harder each day! User training/change management needs attention too since even small UI tweaks confuse long-time operators.

Mitigation strategies include:

Throttle transfer rates during peak hours;

Use pilot migrations for timing estimates;

Automate validation scripts wherever possible;

Maintain detailed runbooks documenting every change made along way;

Have you ever underestimated how much documentation helps future-you?

How to Protect Hadoop Data with Vinchin Backup & Recovery?

While robust storage architectures like Hadoop HDFS provide inherent resilience, comprehensive backup remains essential for true data protection. Vinchin Backup & Recovery is an enterprise-grade solution purpose-built for safeguarding mainstream file storage—including Hadoop HDFS environments—as well as Windows/Linux file servers, NAS devices, and S3-compatible object storage. Specifically optimized for large-scale platforms like Hadoop HDFS, Vinchin Backup & Recovery delivers exceptionally fast backup speeds that surpass competing products thanks to advanced technologies such as simultaneous scanning/data transfer and merged file transmission.

Among its extensive capabilities, five stand out as particularly valuable for protecting critical big-data assets: incremental backup (capturing only changed files), wildcard filtering (targeting specific datasets), multi-level compression (reducing space usage), cross-platform restore (recovering backups onto any supported target including other file servers/NAS/Hadoop/object storage), and integrity check (verifying backups remain unchanged). Together these features ensure efficient operations while maximizing security and flexibility across diverse infrastructures.

Vinchin Backup & Recovery offers an intuitive web console designed for simplicity. To back up your Hadoop HDFS files:

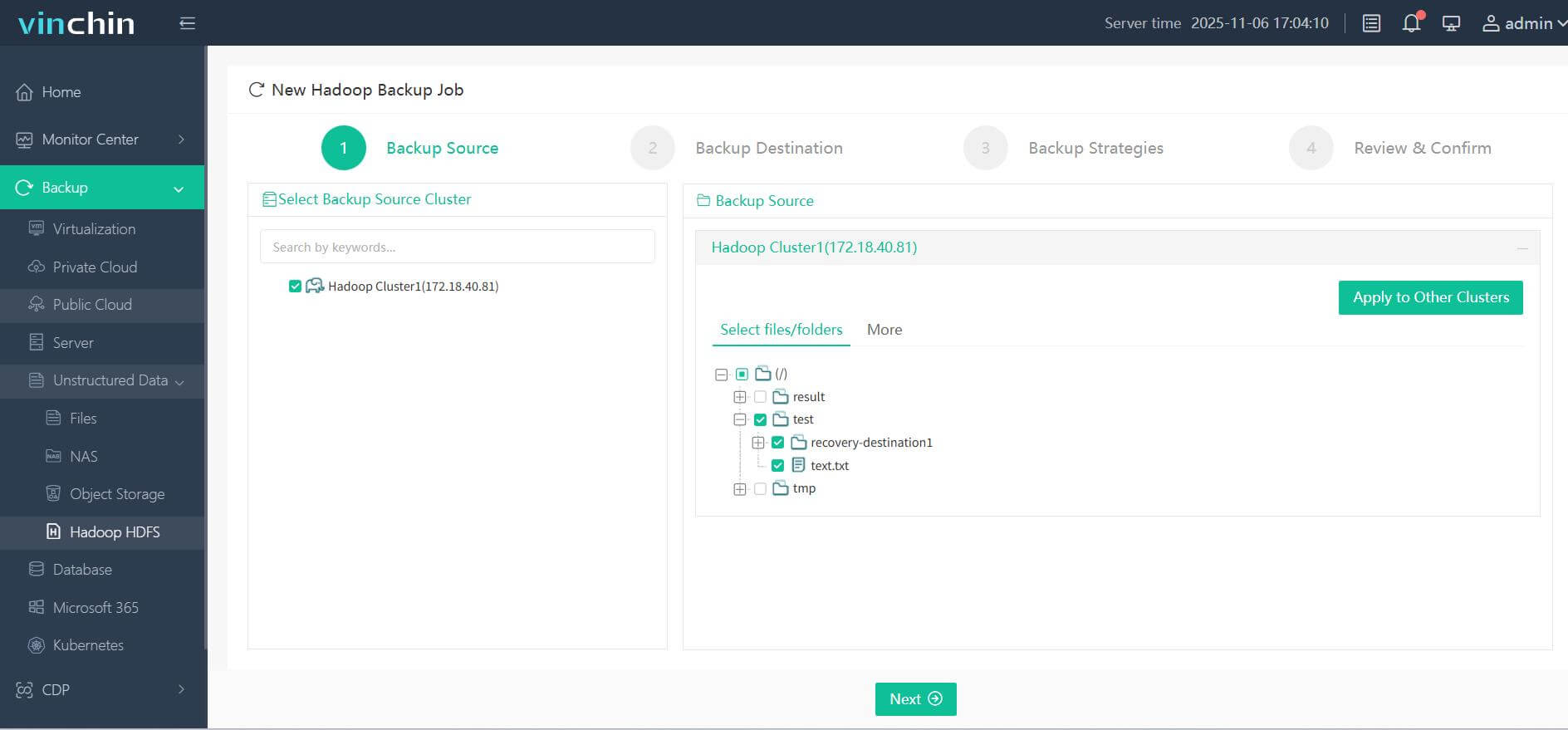

Step 1. Select the Hadoop HDFS files you wish to back up

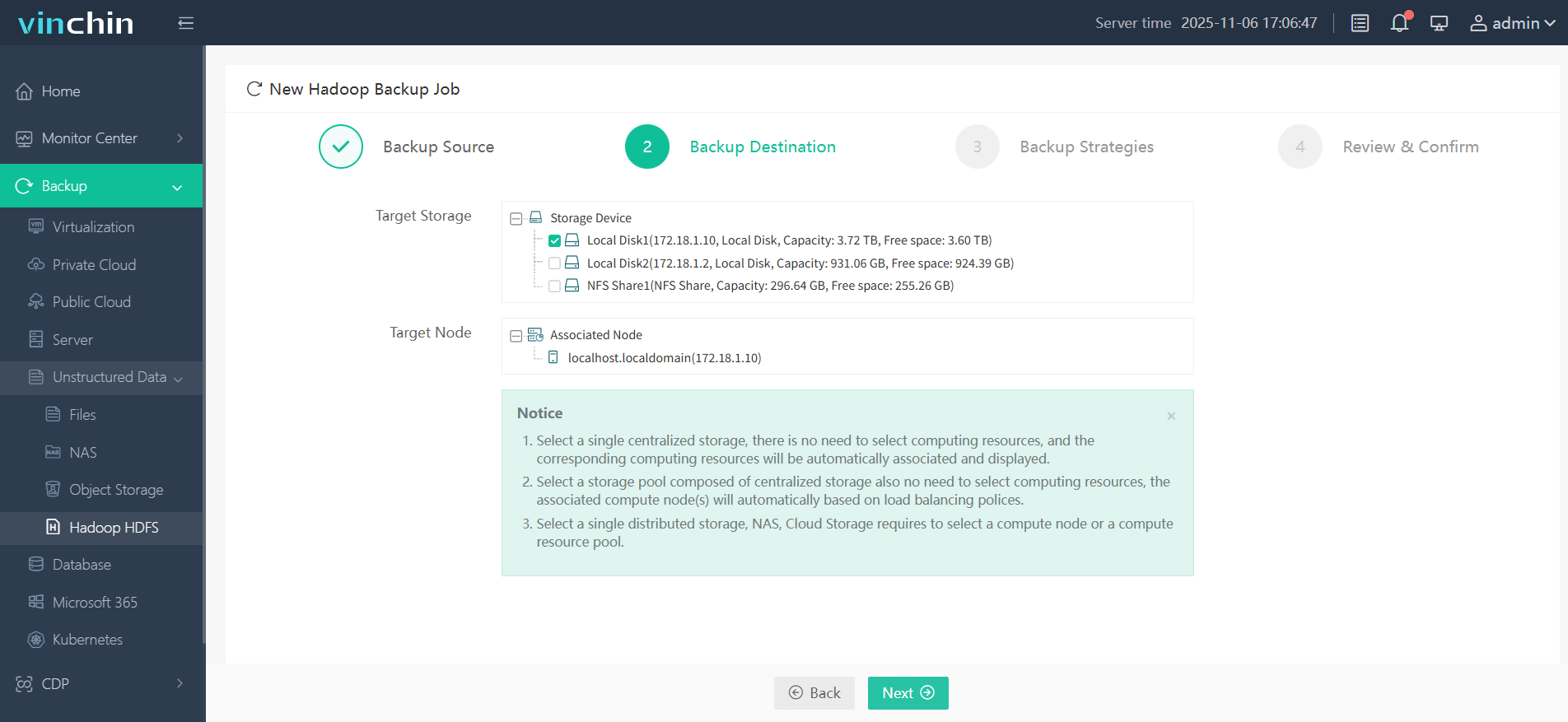

Step 2. Choose your desired backup destination

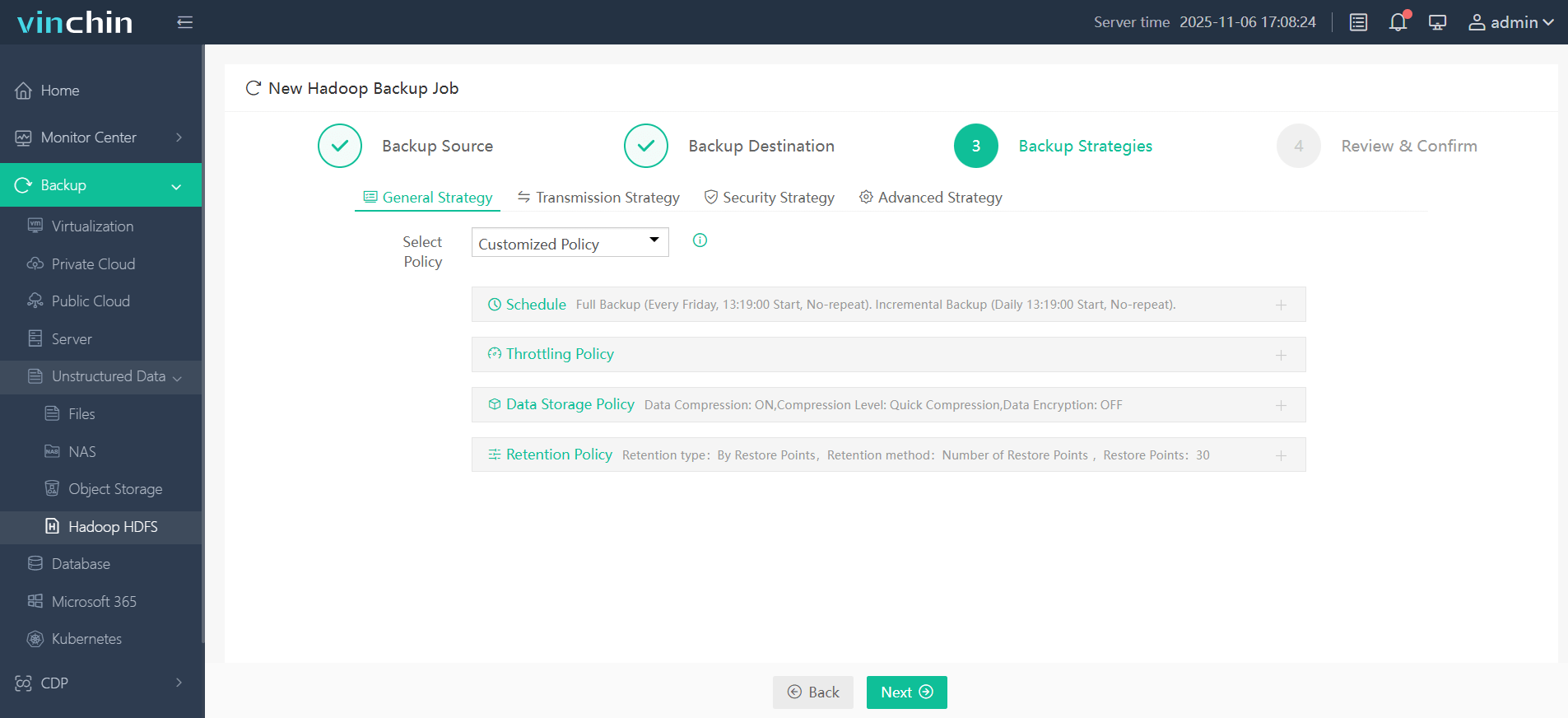

Step 3. Define backup strategies tailored for your needs

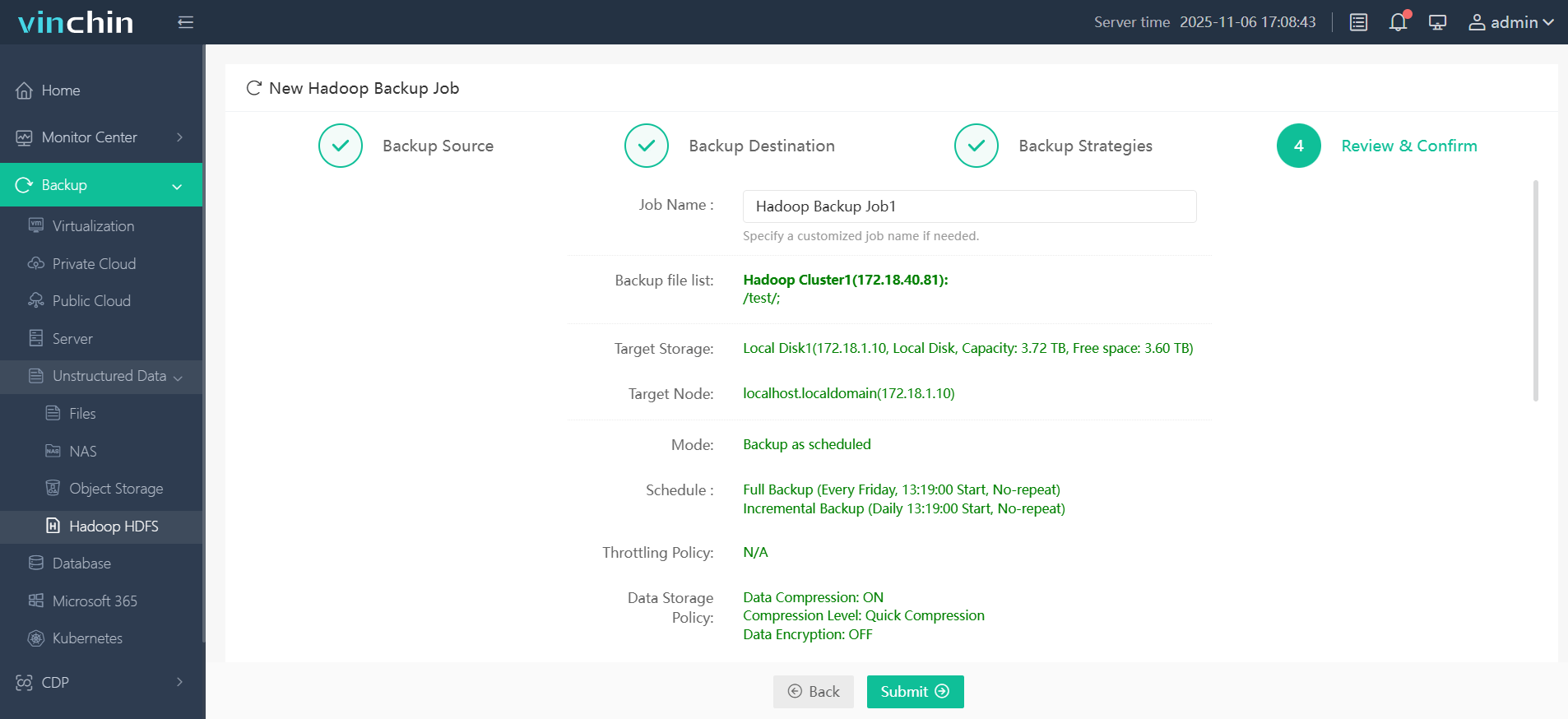

Step 4. Submit the job

Join thousands of global enterprises who trust Vinchin Backup & Recovery—renowned worldwide with top ratings—for reliable data protection. Try all features free with a 60-day trial; click below to get started!

Hadoop Migration FAQs

Q1: How do I handle encrypted HDFS blocks during cross-cloud migrations?

A1: Enable SSL/TLS encryption during transfer processes such as DistCp or leverage cloud-native key management services when configuring destination storage accounts.

Q2: What’s an effective way to estimate total project duration?

A2: Perform a pilot run transferring about one terabyte then extrapolate based on measured throughput adding buffer time for retries/testing phases as needed.

Q3: How should I reconcile custom shell scripts referencing old paths after cutover?

A3: Search recursively through code repositories using grep/find utilities update hard-coded references test thoroughly before production switch-over.

Conclusion

Hadoop migration takes planning—from assessment through execution—to ensure success without surprises along way. Whether moving workloads into clouds, switching distributions, onboarding VMs, cautious stepwise testing pays off. Vinchin simplifies VM moves. Download their free trial todayand experience seamless transitions firsthand!

Share on: