-

What Is Docker?

-

What Is Kubernetes?

-

Why Use Docker With Kubernetes?

-

How to Deploy Containers on Kubernetes Using Kubectl?

-

How to Deploy Containers on Kubernetes Using Helm?

-

Vinchin Backup & Recovery – Enterprise-Level Protection for Your Kubernetes Workloads

-

Docker Kubernetes FAQs

-

Conclusion

Containers have changed how we build and run applications across environments. With Docker, you can package software so it runs the same everywhere—from your laptop to production servers. Kubernetes lets you manage these containers at scale with automation and resilience built in. But what does this mean for operations administrators? Let’s explore how docker kubernetes work together step by step.

What Is Docker?

Docker is an open-source platform that makes building, packaging, and running containerized applications simple. Containers are lightweight units that bundle everything an app needs—code, libraries, settings—so they're portable across systems with Docker installed.

The core of Docker is the Docker Engine, which creates and manages containers on your host machine or server. You define how to build your application image using a text file called a Dockerfile. Once built, this image can be shared through registries like Docker Hub or private repositories.

For multi-container setups—think databases plus web servers—you can use Docker Compose to define services in a single YAML file (docker-compose.yml). This helps developers mirror production locally while giving ops teams predictable deployments in staging or production environments.

What Is Kubernetes?

Kubernetes is an open-source system designed to automate deploying, scaling, networking, and managing containerized applications across clusters of machines (nodes). Originally created by Google engineers who ran billions of containers weekly, Kubernetes has become the industry standard for orchestration.

A typical cluster includes:

A control plane that schedules workloads,

Worker nodes that actually run your containers inside pods,

Networking components that connect everything together,

And controllers that monitor health and enforce desired state automatically.

If a pod fails? Kubernetes restarts it automatically—no manual intervention needed! If demand spikes? It scales out replicas based on policies you set ahead of time.

Key Kubernetes Concepts for Ops

Understanding some basic terms helps:

A pod is the smallest deployable unit—a wrapper around one or more containers.

Nodes are physical or virtual machines running pods.

The control plane manages scheduling decisions.

Services expose pods internally or externally via stable endpoints.

Namespaces let you segment resources logically within a cluster—for example by team or environment.

Mastering these concepts makes troubleshooting much easier down the line!

Why Use Docker With Kubernetes?

Why pair docker kubernetes instead of just one? Each solves different problems:

Docker simplifies packaging apps into portable units—great for consistency between devs’ laptops and cloud servers alike. But as soon as you need high availability across multiple hosts—or want automated scaling—you hit complexity fast!

That’s where Kubernetes shines: it orchestrates many containers across clusters seamlessly while handling failures gracefully behind the scenes.

For example: imagine deploying a microservices app with dozens of components (APIs, databases). With docker kubernetes working together:

Developers push new features as updated images,

Operators update deployments safely using rolling updates,

Traffic routing adapts automatically if nodes go down,

Security policies isolate workloads by namespace,

All while maintaining consistent performance—even under heavy load!

Common integration benefits include rapid rollouts/rollbacks (using image tags), simplified CI/CD pipelines (build once/deploy anywhere), robust self-healing mechanisms (auto-restart failed pods), plus granular access controls via RBAC.

Is it possible to use only Docker? Yes—but managing hundreds of containers manually isn’t scalable long-term! That’s why most enterprises rely on both tools together in production today.

How to Deploy Containers on Kubernetes Using Kubectl?

Deploying docker containers onto a live cluster starts with preparing your image—and ends with exposing your service outside the cluster if needed! Here’s how admins typically do it:

First confirm access with kubectl cluster-info. Make sure your kubeconfig points at the right cluster context before proceeding!

Next create a deployment manifest (app-deployment.yaml). Here’s an annotated example:

apiVersion: apps/v1 kind: Deployment metadata: name: my-app spec: replicas: 2 # Number of pod copies for high availability selector: matchLabels: app: my-app template: metadata: labels: app: my-app spec: containers: - name: my-app image: your-docker-image:latest # Replace with actual repo/image tag! ports: - containerPort: 80 # App listens here inside container resources: requests: cpu: "100m" memory: "128Mi" limits: cpu: "500m" memory: "256Mi"

Apply this manifest using:

kubectl apply -f app-deployment.yaml

Check status:

kubectl get deployments kubectl get pods

If all looks good (“READY” column shows expected count), expose your deployment so users can reach it externally:

kubectl expose deployment my-app --type=NodePort --port=80 --name=my-app-service kubectl get services

Find the assigned NodePort value; then access via http://<node-ip>:<node-port> from outside the cluster network!

Remember to clean up test resources when done:

kubectl delete service my-app-service && kubectl delete deployment my-app

How to Deploy Containers on Kubernetes Using Helm?

Helm streamlines complex deployments by templating manifests into reusable packages called charts—a huge win when managing multi-tier apps!

Start by installing Helm from its official site; verify installation with helm version.

Add trusted chart repositories next—for instance Bitnami offers many prebuilt charts:

helm repo add bitnami https://charts.bitnami.com/bitnami helm repo update

To install NGINX as an example web server:

helm install my-nginx bitnami/nginx --set service.type=NodePort --set service.nodePorts.http=30080

This command spins up all required objects (deployments/services) automatically! Validate success using:

kubectl get all | grep nginx helm list

Want custom configs? Pass extra flags like --set replicaCount=3 during install—or edit values.yaml directly before upgrading later via:

helm upgrade my-nginx bitnami/nginx --set replicaCount=5

To remove everything cleanly:

helm uninstall my-nginx

Helm also supports linting (helm lint <chart>) before installs—which catches typos early—and rollback commands (helm rollback <release> <revision>) if upgrades fail unexpectedly!

Vinchin Backup & Recovery – Enterprise-Level Protection for Your Kubernetes Workloads

After ensuring reliable operation of docker kubernetes clusters, safeguarding data becomes essential for business continuity and compliance requirements. Vinchin Backup & Recovery stands out as a professional enterprise-level solution purpose-built for comprehensive backup and recovery in modern Kubernetes environments. It delivers advanced capabilities including full/incremental backups, fine-grained restore options at various levels (cluster, namespace, application, PVC), policy-based automation alongside one-off jobs, cross-cluster/cross-version recovery—even between heterogeneous storage backends—and secure encrypted transmission throughout every process. These features empower organizations with flexible protection strategies while maximizing efficiency and minimizing risk across dynamic container infrastructures.

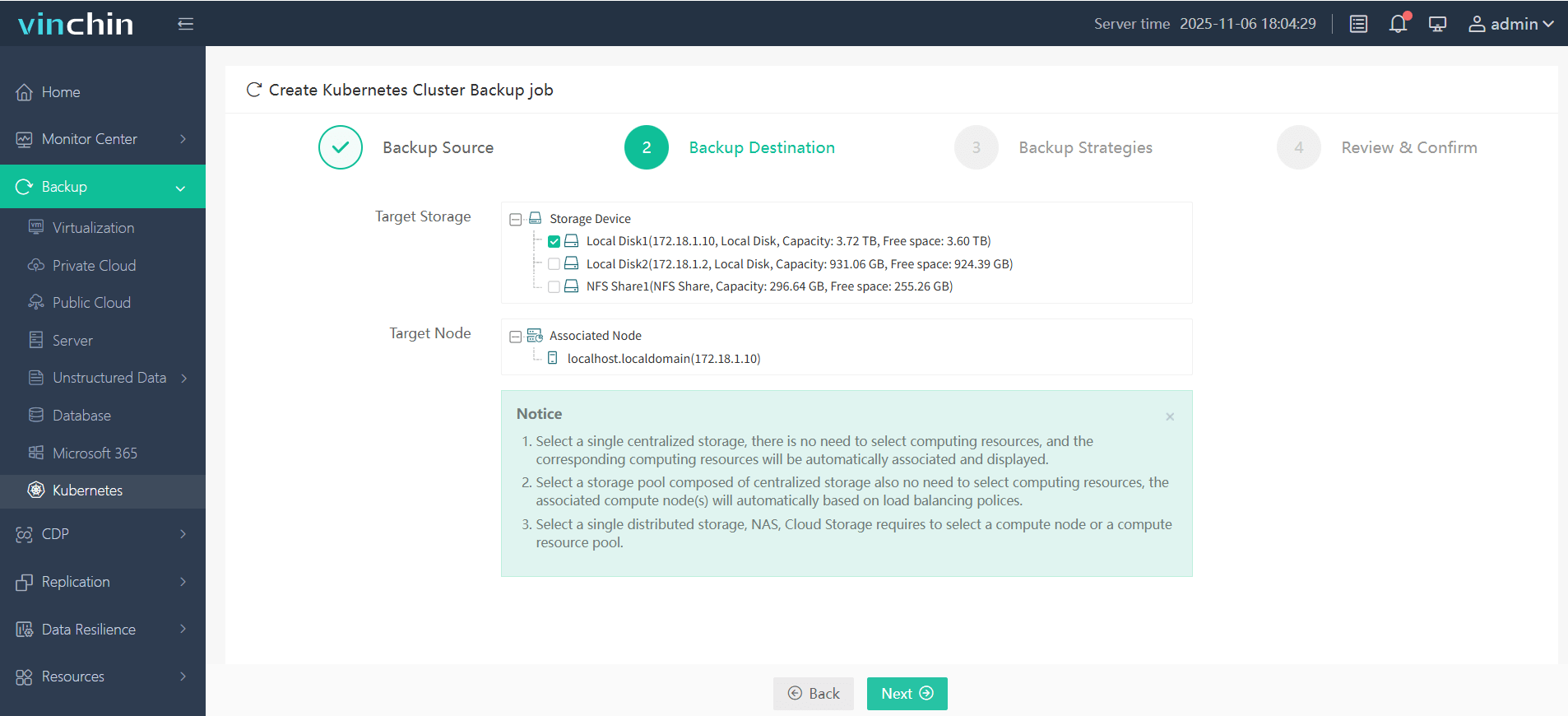

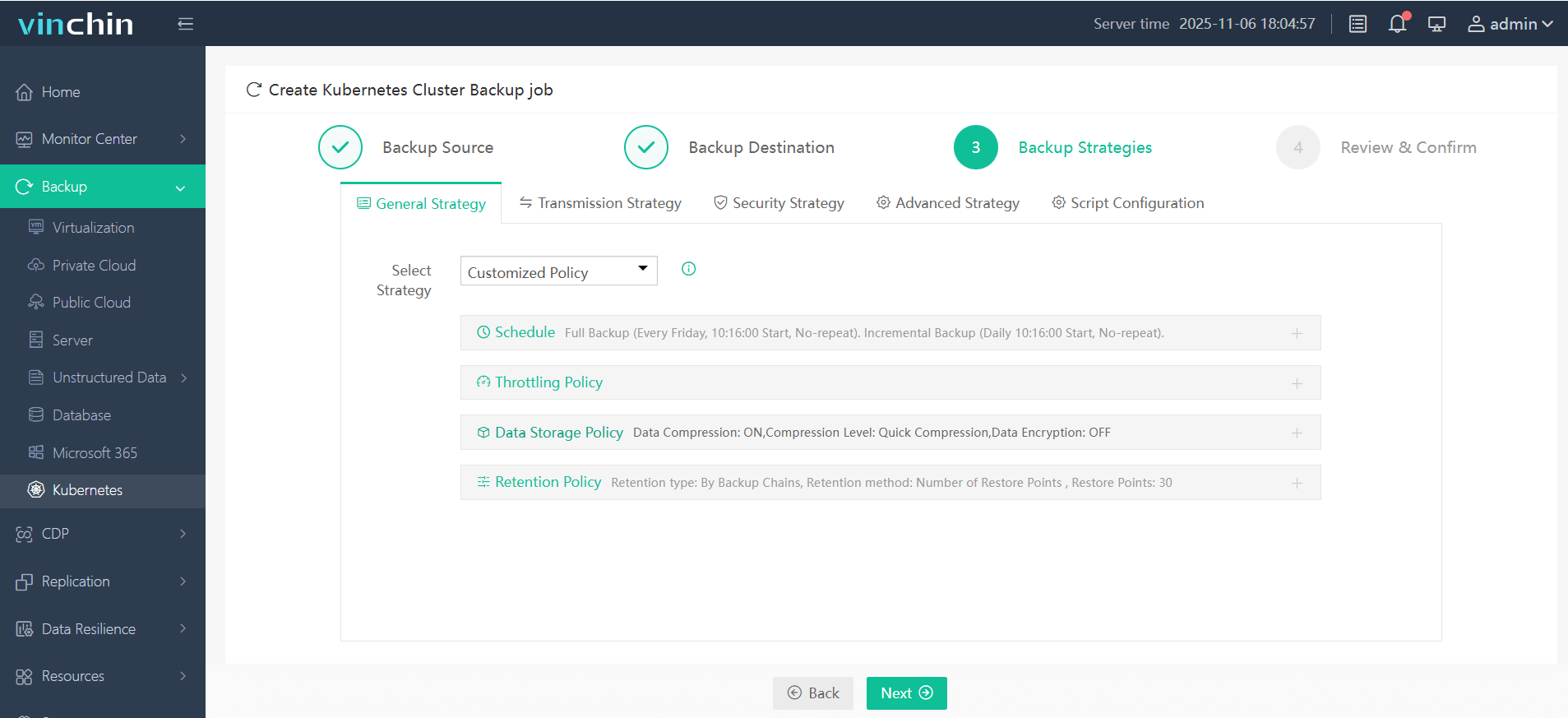

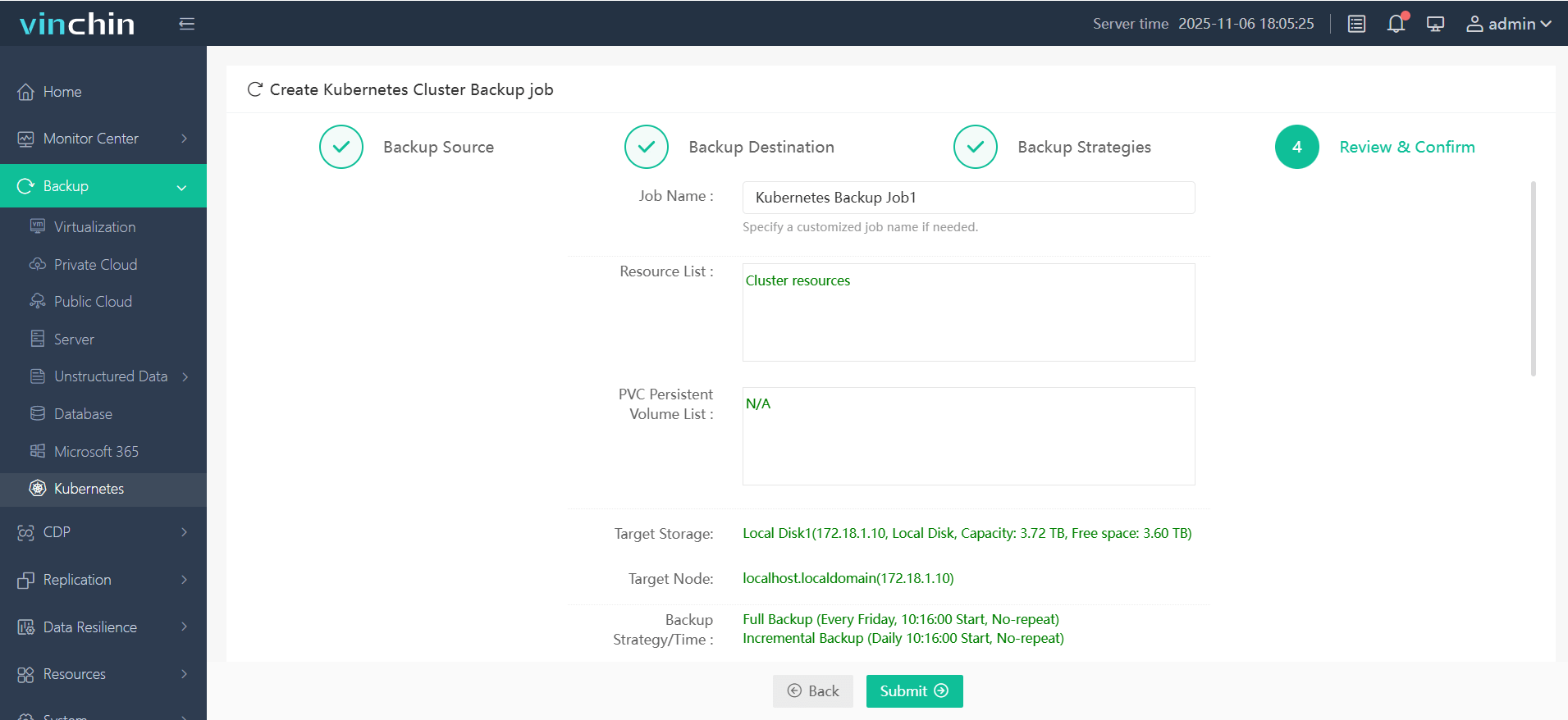

Vinchin Backup & Recovery offers an intuitive web console designed specifically for operational simplicity—even at scale—with backup workflows streamlined into four steps tailored to Kubernetes workloads:

Step 1. Select the backup source

Step 2. Choose the backup storage

Step 3. Define the backup strategy

Step 4. Submit the job

Recognized globally among enterprise data-protection leaders—with top ratings from customers worldwide—Vinchin Backup & Recovery provides a fully featured free trial valid for 60 days; click below to experience effortless protection firsthand.

Docker Kubernetes FAQs

Q1: Can I still use existing Docker images now that Dockershim has been deprecated?

A1: Yes—Kubernetes supports OCI-compliant images built by Docker even though newer runtimes like containerd handle execution behind scenes now [source].

Q2: What should I do if my pod stays stuck in ImagePullBackOff?

A2: Double-check image repository URL/tag credentials first then inspect node internet/firewall settings if pulls keep failing repeatedly

Q3: How do I monitor resource usage per pod?

A3: Enable metrics-server addon then run kubectl top pod—or integrate Prometheus/Grafana stack for deeper analytics/trending over time

Q4: Can I perform zero-downtime updates using docker kubernetes?

A4: Yes—with rolling updates enabled by default simply update Deployment manifest/image tag then reapply manifest; traffic shifts gradually without user impact

Conclusion

Using docker kubernetes together brings unmatched power—from development speed through resilient production operations at any scale worldwide! For peace of mind around backup/recovery Vinchin delivers proven protection purpose-built for modern clusters—try their free trial today.

Share on: