-

What Are S3 Lifecycle Policies?

-

Why Use S3 Lifecycle Policies?

-

Method 1: Setting Up S3 Lifecycle Policies Using AWS Console

-

Method 2: Configuring S3 Lifecycle Policies with AWS CLI

-

How to Protect Object Storage with Vinchin Backup & Recovery?

-

FAQs About Managing S3 Lifecycle Policies

-

Conclusion

Managing cloud storage can feel overwhelming as data grows every day. Costs rise fast if you do not control what stays or goes in your buckets. S3 lifecycle policies help automate this process by moving or deleting objects based on rules you set—saving money while keeping your data organized and compliant.

What Are S3 Lifecycle Policies?

S3 lifecycle policies are automated rules that manage how objects are stored or deleted within Amazon S3 buckets over time. These rules let you move files between storage classes or remove them after a certain period—without manual effort.

You can apply these policies to all objects in a bucket or target specific files using filters such as prefixes (for folders), tags (for labeled items), or object size limits (for large files). For example, you might set a policy that moves logs from S3 Standard to S3 Glacier after 90 days, then deletes them after one year—all without lifting a finger.

Lifecycle policies work for both versioned and non-versioned buckets but behave differently depending on your setup:

In versioned buckets, you can control both current versions (the latest file) and noncurrent versions (older copies).

In non-versioned buckets, actions only affect the single copy of each object.

Why Use S3 Lifecycle Policies?

S3 lifecycle policies offer several advantages for operations teams who want efficient data management:

They automate transitions between storage classes—so infrequently accessed data moves to cheaper options like S3 Glacier automatically.

They delete outdated logs or temporary files without manual cleanup.

They help enforce compliance by ensuring sensitive data is removed after its retention period ends.

They reduce human error by handling repetitive tasks through automation.

These benefits make it easier to meet organizational needs around cost savings and regulatory requirements—especially when managing large-scale environments with thousands of objects across many buckets.

Policies also support fine-grained controls using filters like prefixes or tags so you can tailor actions for different types of data within the same bucket—a must-have feature for complex workflows found in enterprise IT settings.

Method 1: Setting Up S3 Lifecycle Policies Using AWS Console

The AWS Management Console offers an easy way to create S3 lifecycle policies through a visual interface—no coding required! Here’s how:

First log into your AWS account and open the S3 service dashboard from the main menu bar at the top of your screen. Select the bucket where you want to add a policy by clicking its name from your list of buckets.

Next click on the Management tab near the top center of your bucket view; this brings up all available management features including lifecycle configuration tools.

Click Create lifecycle rule to start building your policy:

1. Enter a descriptive name under Lifecycle rule name so others know what this rule does later.

2. Define which objects it applies to:

Use Apply to all objects in the bucket if you want broad coverage,

Or select filters such as:

Prefix: Targets files whose names start with certain text (like “logs/”),

Tags: Applies only if an object has specific metadata labels,

Object size greater than/less than: Limits action based on file size.

Now choose what happens during each stage of an object’s life:

Under Lifecycle rule actions, pick one or more options such as:

Transition current versions between storage classes—for example move items older than 30 days into S3 Glacier Instant Retrieval,

Expire current versions—to delete them after their useful life,

Permanently delete noncurrent versions—in versioned buckets only,

Delete expired object delete markers/incomplete multipart uploads—to clean up unused space.

Set timing details next; enter how many days after creation each action should occur (e.g., transition at 30 days; expire at 365 days).

Review everything carefully before clicking Create rule at bottom right—the policy takes effect immediately once saved!

If your bucket uses versioning (enabled under Properties > Bucket Versioning), remember that some actions like deleting noncurrent versions only apply when versioning is turned on first.

Always test new rules on sample data before applying them broadly—you don’t want accidental deletions!

Method 2: Configuring S3 Lifecycle Policies with AWS CLI

For those comfortable with command-line tools—or who need precise control across many buckets—the AWS CLI lets you define S3 lifecycle policies using JSON files applied via simple commands.

Start by creating a JSON file called lifecycle.json describing your desired policy structure:

{

"Rules": [

{

"ID": "MoveLogsToStandardIA",

"Status": "Enabled",

"Filter": {

"Prefix": "logs/"

},

"Transitions": [

{

"Days": 30,

"StorageClass": "STANDARD_IA"

}

],

"Expiration": {

"Days": 365

}

}

]

}This example moves any object starting with “logs/” into S3 Standard-IA after thirty days then deletes it after one year—perfect for rotating log archives!

To apply this policy run:

aws s3api put-bucket-lifecycle-configuration --bucket YOUR_BUCKET_NAME --lifecycle-configuration file://lifecycle.json

Replace YOUR_BUCKET_NAME with your actual bucket name used above; make sure permissions allow changes here!

You can check existing configurations anytime using:

aws s3api get-bucket-lifecycle-configuration --bucket YOUR_BUCKET_NAME

And remove unwanted rules quickly via:

aws s3api delete-bucket-lifecycle --bucket YOUR_BUCKET_NAME

Remember: Always test new JSON configurations against staging/test buckets first! Mistakes here could result in permanent loss if applied carelessly—especially when automating across multiple environments via scripts or CI/CD pipelines.

How to Protect Object Storage with Vinchin Backup & Recovery?

After implementing S3 lifecycle policies, it's important to ensure comprehensive protection for critical cloud data beyond native automation alone. Vinchin Backup & Recovery is an enterprise-grade file backup solution supporting most mainstream file storage platforms—including Amazon-compatible object storage, Windows/Linux servers, and NAS devices—with exceptional performance tailored for demanding environments. Its proprietary technologies, such as simultaneous scanning/data transfer and merged file transmission, deliver backup speeds far exceeding those offered by other vendors.

Vinchin Backup & Recovery stands out thanks to features like incremental backup support, wildcard filtering capabilities, multi-level compression options, robust data encryption, and cross-platform restore functionality—which allows any backup job targeting file server, NAS, or object storage destinations. Together these features maximize efficiency while ensuring secure recovery flexibility across diverse infrastructures.

The web console is designed for simplicity:

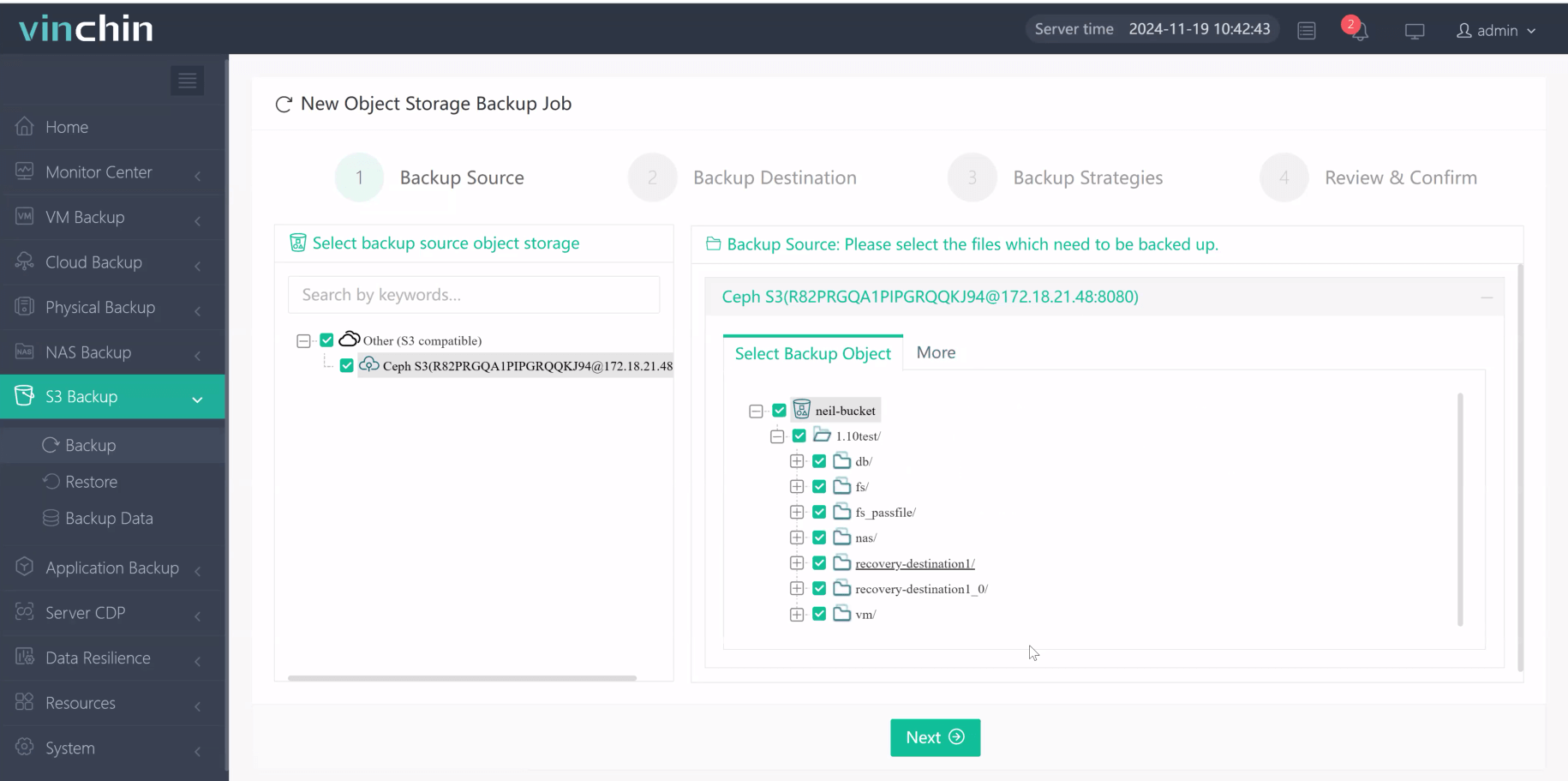

Step 1. Select the object storage files to backup

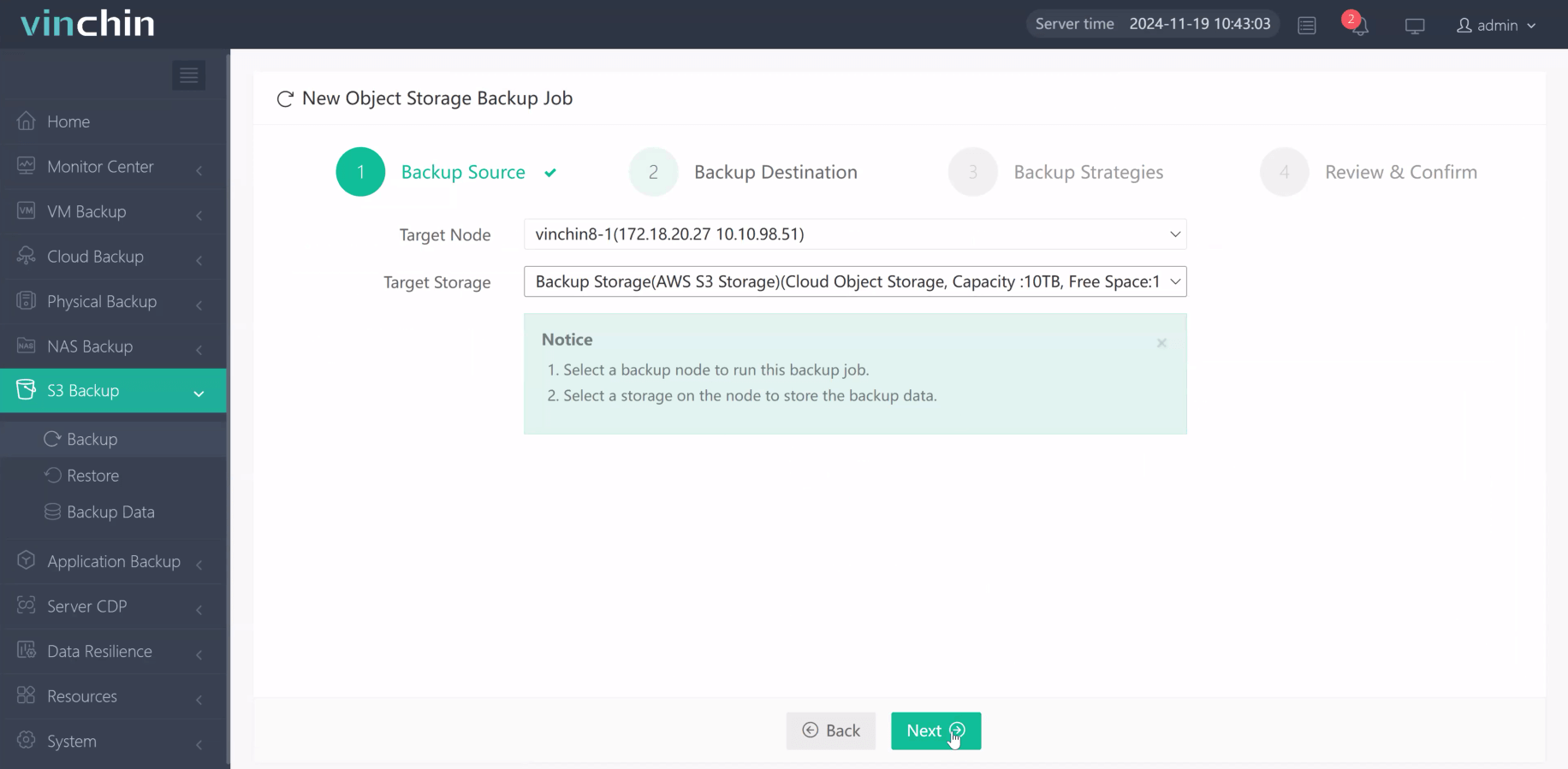

Step 2. Choose backup storage

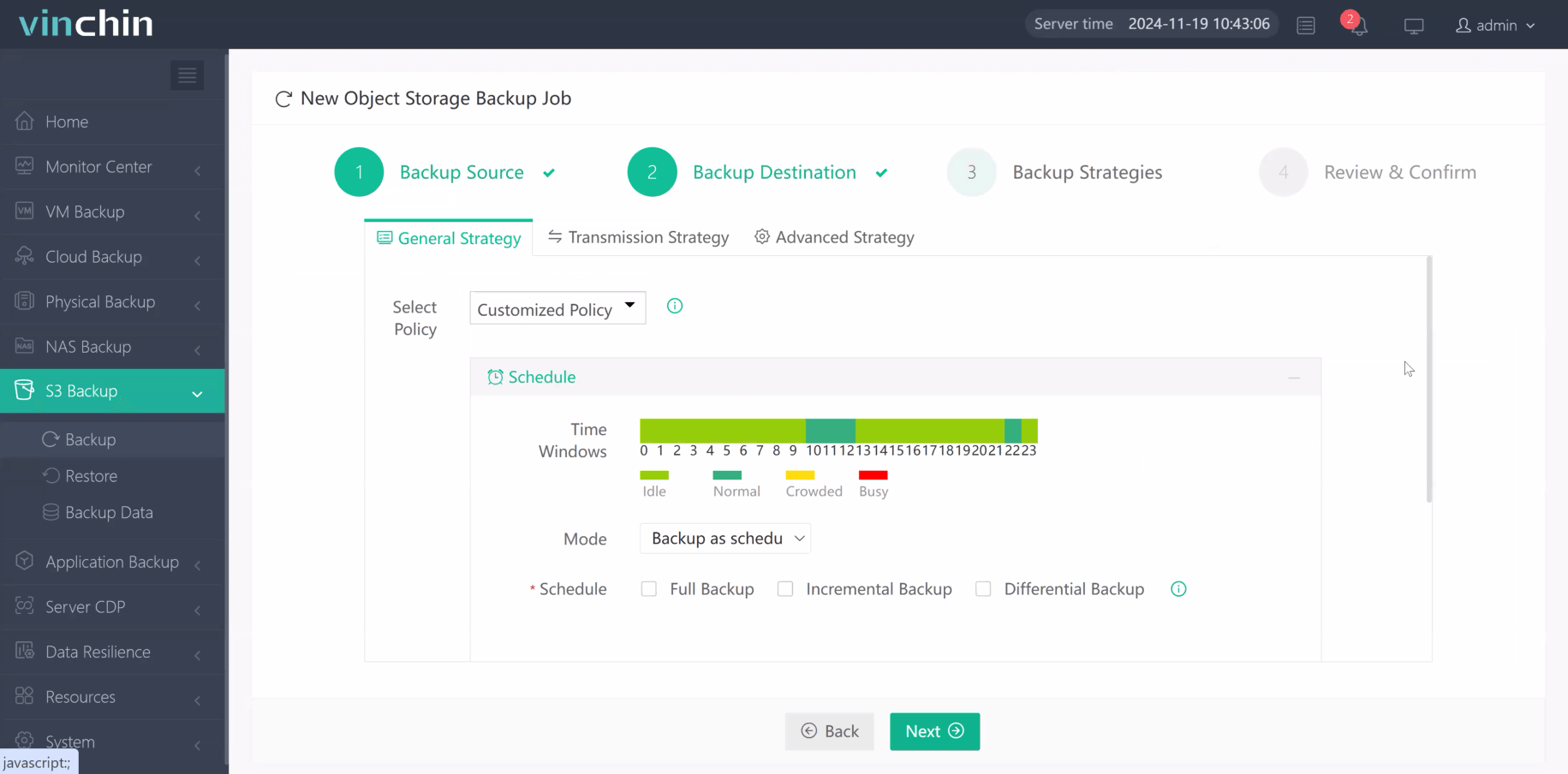

Step 3. Define backup strategy

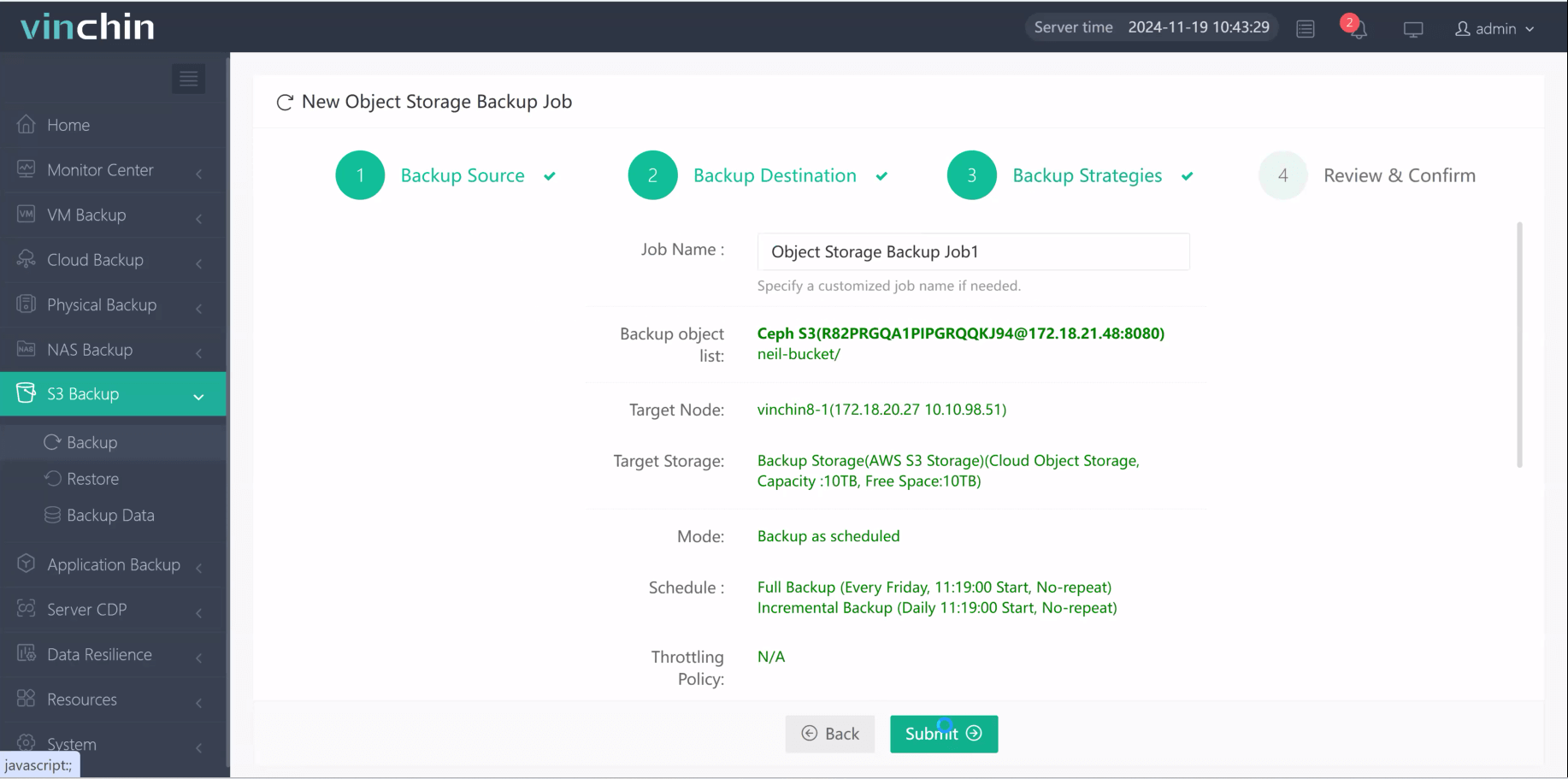

Step 4. Submit job

Recognized globally with high customer satisfaction ratings and trusted by enterprises worldwide, Vinchin Backup & Recovery offers a full-featured free trial for 60 days—click below to experience industry-leading protection firsthand.

FAQs About Managing S3 Lifecycle Policies

Q1: Can I use different S3 lifecycle policies within subfolders (“folders”) inside my bucket?

Yes; simply use distinct Prefix filters matching folder paths when creating separate rules per folder group needed.

Q2: How do I prevent accidental deletion of important business records?

Combine careful filter design/testing plus enable extra safeguards like Object Lock/MFA Delete features wherever possible before activating destructive expiration logic broadly!

Q3: Do S3 lifecycle policies interact automatically across regions if Cross Region Replication is enabled?

No; each region enforces its own independent set of rules—you must configure identical lifecycles separately per destination region involved.

Conclusion

S3 lifecycle policies give operations teams powerful automation tools for managing cloud storage efficiently while cutting costs long-term—and advanced solutions like Vinchin provide added security against modern threats plus seamless recovery options tailored specifically around enterprise-scale workloads everywhere!

Share on: