-

What Is MySQL Database Backup?

-

Why Use Command Line for Backup?

-

Backup MySQL with mysqldump

-

Restoring a Backup from Command Line

-

Backup MySQL with mysqlpump

-

Automating MySQL Backups with Scripts & Scheduling

-

Vinchin Backup & Recovery: Enterprise-Level Protection for Your MySQL Backups

-

Backup MySQL database command line FAQs

-

Conclusion

Backing up your MySQL database is not just a best practice—it is essential for every IT operations team. Data loss can strike at any time due to hardware failure, human error, or cyberattacks. Without a reliable backup strategy, you risk losing critical business data. For many organizations today, command-line tools are the backbone of automated backup workflows. They fit seamlessly into DevOps pipelines and disaster recovery plans because they offer direct control and easy scripting.

In this article, you will learn how to back up MySQL databases using command-line tools—from basic commands to advanced techniques that suit both small businesses and enterprise environments. We’ll also cover automation strategies, validation methods to ensure your backups work when needed most, and how Vinchin can help protect your MySQL data.

What Is MySQL Database Backup?

A MySQL database backup is a copy of your database’s structure and data saved in a format that allows you to restore it if needed. There are two main types: logical backups (like SQL statements) and physical backups (raw data files). Logical backups are common because they’re portable across systems—they contain SQL commands that recreate tables and insert data during restoration.

Physical backups copy actual database files from disk but often require matching server versions for recovery. Most administrators use logical backups for flexibility unless strict performance or point-in-time recovery needs dictate otherwise.

Why Use Command Line for Backup?

The command line gives you direct control over backup processes—no need for graphical interfaces or extra software layers. This approach offers several advantages:

First, it’s flexible; you can script complex tasks or integrate them into larger workflows using shell scripts or automation tools like Ansible or Jenkins. Second, command-line utilities run on any platform where MySQL operates—Linux, Windows, macOS—so there’s no vendor lock-in.

Finally, automation becomes simple: schedule regular jobs with cron or systemd timers so that no one has to remember manual steps each day. For large environments managing dozens—or hundreds—of databases across servers, these benefits add up quickly.

Backup MySQL with mysqldump

The mysqldump tool is the standard way to create logical backups of MySQL databases. It generates SQL statements that can recreate your database structure and data exactly as they were at backup time.

Let’s walk through its usage step by step:

Basic Backup of a Single Database

To back up a single database interactively:

mysqldump -u username -p database_name > backup_file.sql

This prompts you for your password before exporting all tables in database_name into backup_file.sql. Replace username, database_name, and backup_file.sql as needed.

Back Up Multiple Databases

If you need more than one database in one file:

mysqldump -u username -p --databases db1 db2 > multi_backup.sql

The --databases option includes necessary CREATE DATABASE statements so each schema can be restored separately later.

Full Server Backup: All Databases

For disaster recovery or migration purposes:

mysqldump -u username -p --all-databases > all_databases_backup.sql

This captures every schema on your server—including system schemas like mysql or information_schema if present.

Schema Only (No Data)

Sometimes only table definitions matter—for example when cloning an environment without sensitive production records:

mysqldump -u username -p --no-data database_name > schema_backup.sql

The --no-data flag skips row inserts entirely; only DDL statements appear in the output file.

Data Only (No Table Definitions)

If you want just raw records without recreating tables:

mysqldump -u username -p --no-create-info database_name > data_only_backup.sql

Here --no-create-info omits table creation lines; only INSERTs remain.

Back Up Specific Tables

You don’t always need everything—sometimes just key tables matter:

mysqldump -u username -p database_name table1 table2 > tables_backup.sql

List as many tables after the schema name as required; only those get exported.

Restoring a Backup from Command Line

Restoration is just as important as making good backups! Here’s how it works step by step:

1. If restoring onto an empty server—or creating new schemas—first log into mysql client:

mysql -u username -p

Then run:

CREATE DATABASE IF NOT EXISTS target_database; EXIT;

2. Next import your .sql file:

mysql -u username -p target_database < backup_file.sql

If your dump contains multiple databases (using --databases), omit specifying a target schema—the dump itself handles creation automatically during import:

mysql -u username -p < multi_backup.sql

Watch out for errors about missing users or privileges; review logs carefully after restores!

For very large files consider piping through compression utilities like gzip:

gunzip < compressed_backup.sql.gz | mysql -u username -p target_database

Or compress during export:

mysqldump ... | gzip > mybackup_$(date +%F).sql.gz

and decompress during restore as above.

Backup MySQL with mysqlpump

mysqlpump is another official tool designed by Oracle/MySQL engineers to improve upon some limitations of mysqldump—especially speed via parallel processing threads . It’s available starting from MySQL 5.7+ but not earlier versions; check compatibility before relying on it in production!

Here’s what makes it different—and sometimes better—for large-scale jobs:

Basic Usage Example

To back up one schema:

mysqlpump -u username -p database_name > pump_backup_file.sql

Like mysqldump this prompts interactively for credentials unless stored securely.

Multiple Databases at Once

Just list them after --databases:

mysqlpump -u username –p --databases db1 db2 > multi_pumped_backup.sql

All included schemas get their own DDL/DML blocks.

Parallel Processing Explained

By default mysqlpump uses multiple threads per job—which speeds things up dramatically compared to single-threaded mysqldump especially when dealing with many independent tables! Control thread count using --default-parallelism=4 (or higher/lower):

mysqlpump –u user –p --default-parallelism=4 big_db > fast_parallel_dump.sql

Be careful though: more threads mean higher CPU/disk load while running!

Automating MySQL Backups with Scripts & Scheduling

Manual commands work fine once—but real-world ops teams automate everything possible! Automation reduces human error risk while ensuring regular coverage even overnight/weekends/holidays.

Start simple—with shell scripts wrapping core logic plus logging/error handling:

Sample Bash Script With Logging & Rotation

Here’s an example script saving daily dumps plus rotating old ones offsite weekly:

#!/bin/bash

BACKUP_DIR="/var/backups/mysql"

DATE=$(date +%F)

LOGFILE="$BACKUP_DIR/backup_$DATE.log"

DB_USER="youruser"

DB_PASS="yourpass" # Or use .my.cnf!

DATABASE="production"

mkdir –p $BACKUP_DIR

echo "Starting backup at $(date)" >> $LOGFILE

if mysqldump –u $DB_USER –p$DB_PASS $DATABASE | gzip > $BACKUP_DIR/${DATABASE}_$DATE.sql.gz ; then

echo "Backup successful" >> $LOGFILE

else

echo "Backup failed!" >> $LOGFILE

fi

# Remove files older than 14 days

find $BACKUP_DIR/*.gz –mtime +14 –delete

echo "Cleanup done" >> $LOGFILE

echo "Finished at $(date)" >> $LOGFILE

exit 0Store this script somewhere secure; make executable (chmod +x) then schedule via cron:

Scheduling With Cron

Edit crontab (crontab –e) then add something like this line for daily runs at 2am:

0 2 * /path/to/your_script.sh

Cron handles timing reliably—even across reboots—as long as permissions allow access.

Offsite Copies & Retention Policies

For added safety sync completed dumps offsite regularly using rsync/scp/cloud CLI tools such as AWS S3 CLI depending on company policy.

Automated retention policies prevent disk bloat by deleting oldest files after N days/weeks/months based on compliance requirements.

Vinchin Backup & Recovery: Enterprise-Level Protection for Your MySQL Backups

While manual scripting provides flexibility, organizations seeking robust protection often turn to professional solutions tailored specifically for enterprise needs. Vinchin Backup & Recovery stands out as an advanced platform supporting most mainstream databases—including first-class support for MySQL alongside Oracle, SQL Server, MariaDB, PostgreSQL, PostgresPro, and MongoDB—to deliver comprehensive coverage across diverse IT environments.

Vinchin Backup & Recovery offers features highly relevant to modern operations teams such as incremental backup capabilities tailored to minimize storage usage and network load; batch database backup management; flexible data retention policies including GFS retention policy support; cloud backup integration with tape archiving options; and ransomware protection built-in by design—all streamlining compliance while reducing administrative overhead.

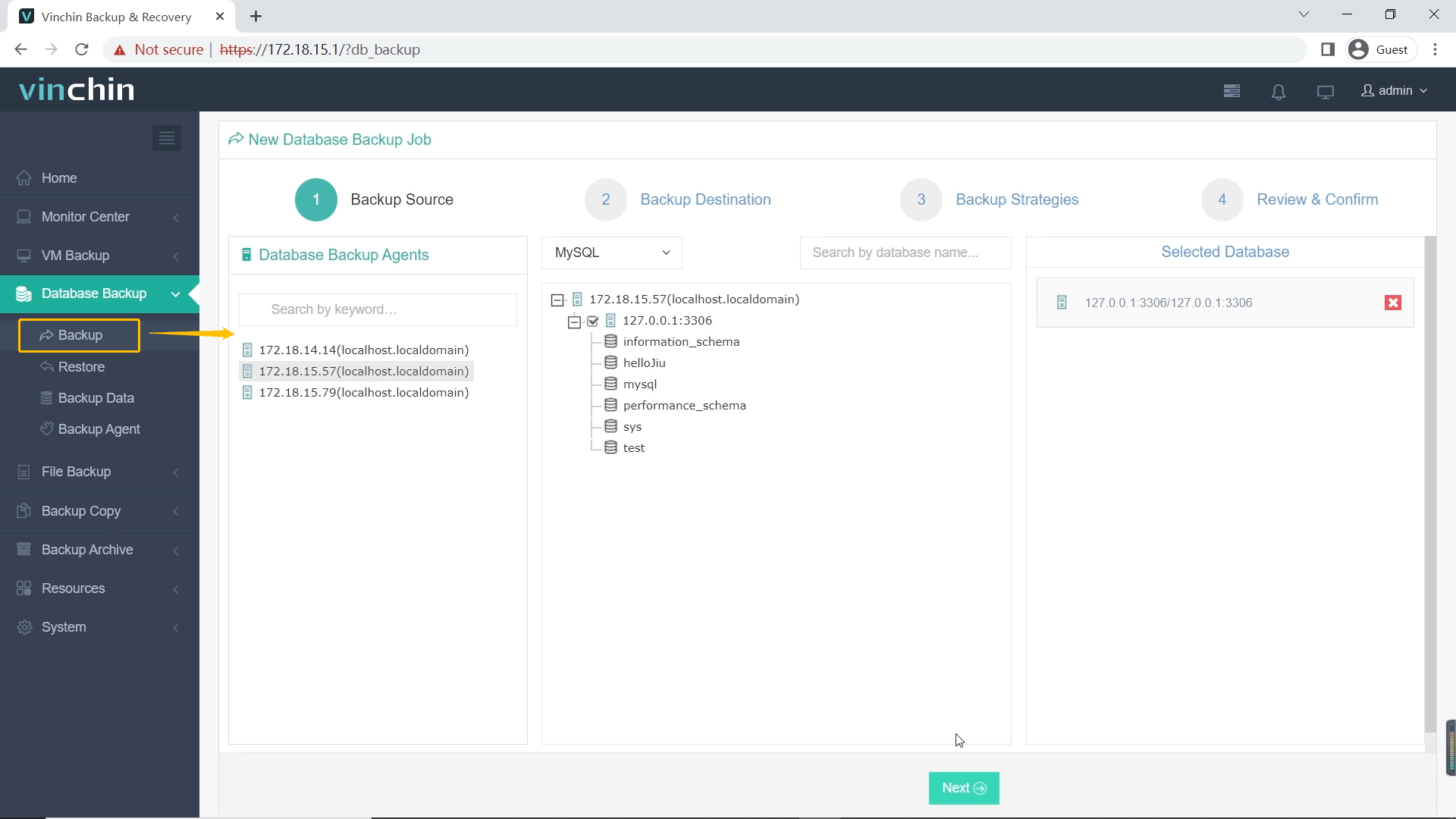

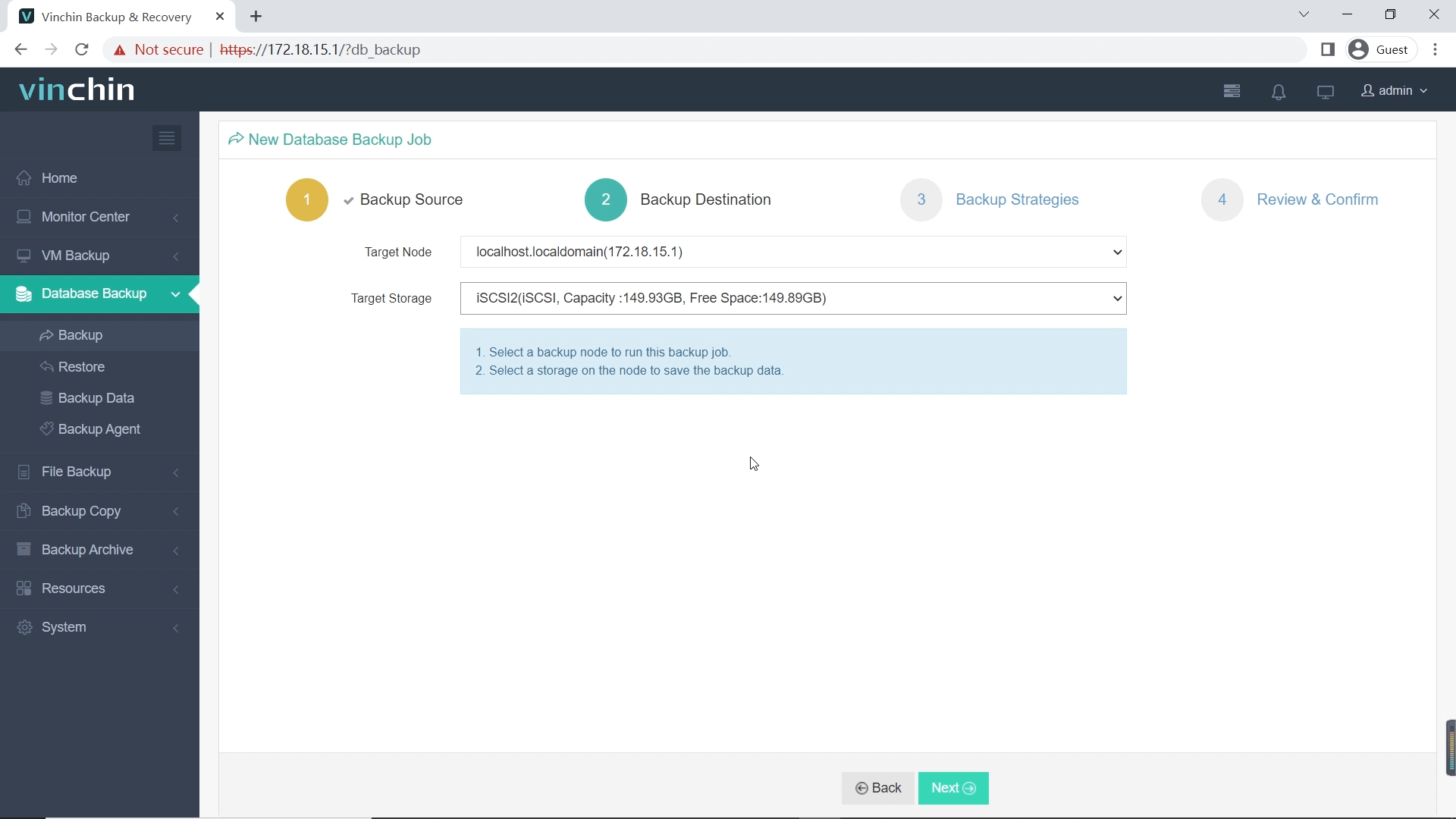

Its web console makes safeguarding your critical assets remarkably straightforward:

Step 1. Select the MySQL database to back up;

Step 2. Choose the appropriate storage destination;

Step 3. Define the desired backup strategy according to business requirements;

Step 4. Submit the job—all within an intuitive interface accessible from anywhere.

Trusted globally by thousands of enterprises—with top ratings from industry analysts—Vinchin Backup & Recovery offers a fully featured 60-day free trial so you can experience its power firsthand before committing further.

Backup MySQL database command line FAQs

Q1: How do I alert my team automatically if nightly backups fail?

A1: Add email notifications within scripts using mailx/sendmail utilities triggered when exit codes indicate failure—or integrate alerts into monitoring platforms like Prometheus/Nagios via webhook calls.

Q2: Can I minimize downtime during large production exports?

A2: Use replicas/read-only slaves dedicated solely to running heavy export jobs so primary workloads stay unaffected—even better combine snapshot-based approaches where supported.

Conclusion

Command-line tools give operations teams unmatched power over their backup routines—from scripting basics with mysqldump/mysqlpump through robust automation/testing frameworks ready for enterprise scale-out needs today! For streamlined management beyond DIY scripting Vinchin delivers agentless protection purpose-built around modern IT realities—start safeguarding critical data now before disaster hits tomorrow!

Share on: