-

What is S3 Live Replication?

-

Why Use S3 Live Replication?

-

Method 1: Enable S3 Live Replication via AWS Console

-

Method 2: Set Up S3 Live Replication With AWS CLI

-

Enterprise File Backup Solution for Amazon S3 Object Storage

-

S3 Live Replication FAQs

-

Conclusion

Data loss can cripple business operations in seconds. Downtime frustrates users and costs money every minute it drags on. That’s why many organizations rely on cloud storage solutions like Amazon S3—but even these need extra protection against accidents or disasters. Enter s3 live replication: a powerful tool to help you keep your data safe, available, and compliant across regions or accounts.

In this guide, you’ll learn what s3 live replication does behind the scenes, why it matters for your organization’s resilience strategy, how to set it up using both graphical tools and command-line automation—and how to avoid common mistakes along the way. We’ll also show where native S3 features end—and where specialized backup solutions like Vinchin step in to fill critical gaps.

What is S3 Live Replication?

S3 live replication automatically copies new or updated objects from one Amazon S3 bucket (the source) to another bucket (the destination). This process works asynchronously—meaning changes are detected quickly but may take seconds or minutes to appear at the destination depending on system load or network conditions. This model is called eventual consistency: all changes are guaranteed to arrive but not instantly synchronized.

Replication can happen within a single region (Same-Region Replication, SRR) or between different regions (Cross-Region Replication, CRR). You can also replicate between buckets owned by different AWS accounts if permissions allow it.

A few important points:

Only objects created after you enable a replication rule are copied automatically.

Existing objects require separate action using S3 Batch Replication.

Both source and destination buckets must have versioning enabled before setting up any rules; turning off versioning later breaks ongoing replication.

Some object metadata—including tags—can be replicated if specified in your rule configuration.

For full technical details about supported features and limitations, see AWS documentation.

Why Use S3 Live Replication?

Why do so many organizations invest time configuring s3 live replication? Because it solves several real-world problems:

First comes compliance: Many regulations require storing certain data within specific geographic boundaries—or maintaining redundant copies across locations for legal reasons.

Second is disaster recovery: If one region suffers an outage due to hardware failure or natural disaster, having replicas elsewhere means your business keeps running with minimal interruption.

Third is performance optimization: By replicating data closer to end-users around the world (for example from Europe to Asia), you reduce latency when they access files—making applications feel faster everywhere.

Fourth comes operational flexibility: You might want production data available instantly in test environments—or share information securely across departments without manual copying chores.

Finally—and perhaps most importantly—live replication helps protect against accidental deletion or corruption by ensuring there’s always another copy somewhere else under your control.

Of course, all these benefits come with some trade-offs: cross-region transfers incur additional costs for bandwidth and requests; managing multiple buckets adds complexity; not every type of object can be replicated seamlessly (such as those encrypted with certain keys). But overall? For most admins responsible for uptime and compliance—it’s worth every penny spent on peace of mind!

Method 1: Enable S3 Live Replication via AWS Console

Setting up s3 live replication through the AWS Management Console offers an intuitive experience—but each step must be followed carefully for success.

Before starting:

Make sure both source AND destination buckets have versioning enabled; otherwise you cannot proceed.

Here’s how you do it:

1. Log in to the AWS Management Console then open the S3 service

2. Select your source bucket from the list

3. Click on the Management tab then scroll down to find Replication rules

4. Click on Create replication rule

5. Enter a name for your rule then set its status as Enabled

6. Under Choose a rule scope, pick either “Apply to all objects in the bucket” if you want everything copied—or define filters using prefixes/tags if only certain files should replicate

7. In the Destination section select your target bucket; if it's owned by another account enter their Account ID plus Bucket Name

8. If prompted that versioning isn’t enabled yet click on Enable

9. Under IAM role choose either “Create a new role” (recommended unless you already have one) OR select an existing role with proper permissions

10.(Optional) Adjust settings such as changing object ownership at destination (“Change object ownership to destination bucket owner”), replicating delete markers (“Replicate delete markers”), or altering storage class (“Change storage class”) as needed

11.(Optional) Enable “Replication Time Control” if strict SLA-bound timing (<15 minutes per copy) matters for compliance reasons

12.Review all settings then click on Save

Once done upload any new file into your source bucket—it should appear automatically at its replica location within minutes! Remember though: only newly added/modified files get copied going forward unless batch jobs are used retroactively.

Method 2: Set Up S3 Live Replication With AWS CLI

Prefer scripting deployments? The AWS Command Line Interface gives full control over setup—even allowing bulk automation across dozens of buckets/accounts simultaneously!

Before proceeding:

Confirm both source/destination buckets have versioning turned ON using

aws s3api get-bucket-versioning

If not run:

aws s3api put-bucket-versioning --bucket SOURCE_BUCKET --versioning-configuration Status=Enabled aws s3api put-bucket-versioning --bucket DESTINATION_BUCKET --versioning-configuration Status=Enabled

Next create an IAM role granting permission for cross-bucket writes—a common stumbling block! Here’s an example trust policy snippet:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {"Service": "s3.amazonaws.com"},

"Action": "sts:AssumeRole"

}

]

}Attach policies allowing s3:GetObject, s3:ReplicateObject, s3:ListBucket, etc., targeting both source/destination ARNs as needed.

Now build your actual configuration file (replication.json):

{

"Role": "arn:aws:iam::ACCOUNT_ID:role/REPLICATION_ROLE",

"Rules": [

{

"ID": "S3LiveReplicationRule",

"Status": "Enabled",

"Filter": {"Prefix": ""},

"Destination": {

"Bucket": "arn:aws:s3:::DESTINATION_BUCKET"

}

}

]

}Replace placeholders accordingly!

Apply this config using:

aws s3api put-bucket-replication --bucket SOURCE_BUCKET --replication-configuration file://replication.json

To check status run:

aws s3api get-bucket-replication --bucket SOURCE_BUCKET

Want proof that specific files made it? Upload something new then use:

aws s3api head-object --bucket DESTINATION_BUCKET --key OBJECT_KEY

If nothing appears after several minutes double-check IAM permissions first—they’re often at fault!

For advanced filtering use "Filter" blocks specifying prefixes/tags rather than "Prefix" alone; see AWS CLI docs for syntax examples.

Enterprise File Backup Solution for Amazon S3 Object Storage

While native S3 live replication provides valuable redundancy, it does not deliver true backup capabilities such as point-in-time recovery or comprehensive ransomware protection—which are essential safeguards against accidental deletions and sophisticated threats. To address these gaps, Vinchin Backup & Recovery stands out as a professional, enterprise-grade file backup solution supporting mainstream platforms including Amazon S3 object storage, Windows/Linux file servers, and NAS devices.

Vinchin Backup & Recovery leverages proprietary technologies like simultaneous scanning with data transfer and merged file transmission streams, enabling exceptionally fast backup speeds that surpass other vendors’ offerings in real-world scenarios.

Among its robust feature set, five highlights stand out as especially relevant here: incremental backups minimize unnecessary data transfer; wildcard filtering streamlines job setup; multi-level compression optimizes storage usage; cross-platform restore allows flexible recovery between file server/NAS/object storage targets; and strong encryption ensures sensitive information remains protected throughout backup cycles—all contributing toward efficient management and resilient security posture.

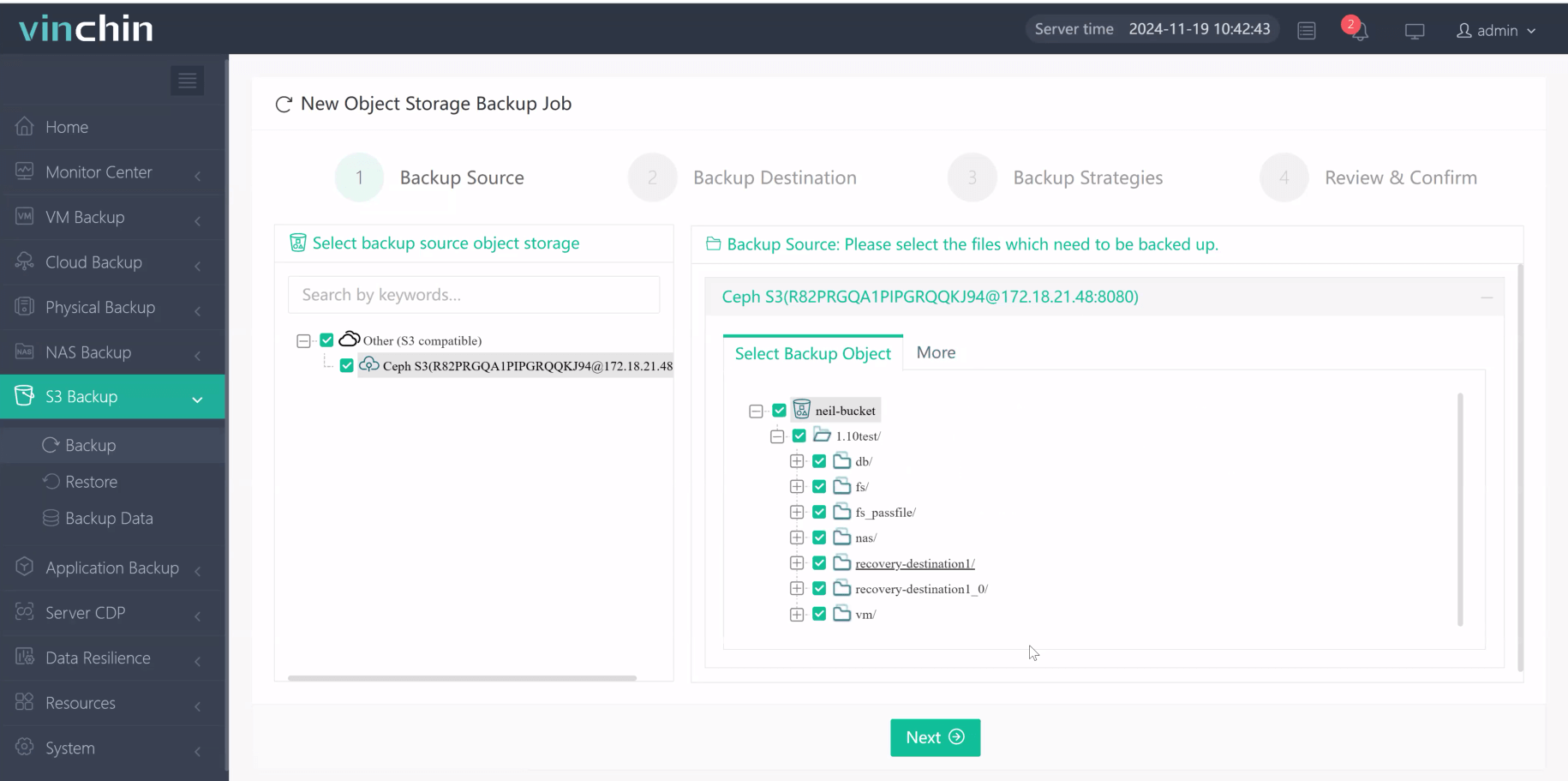

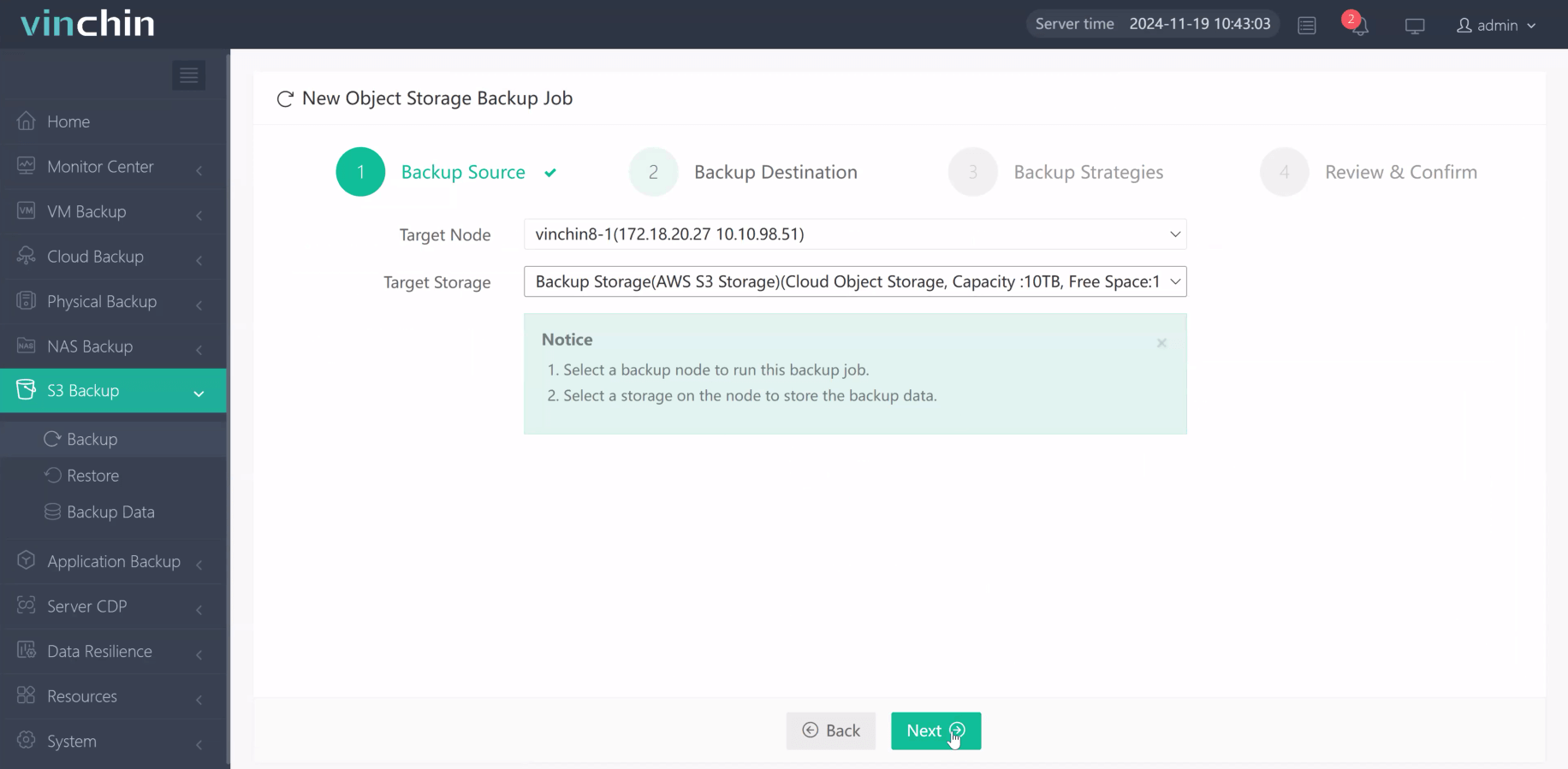

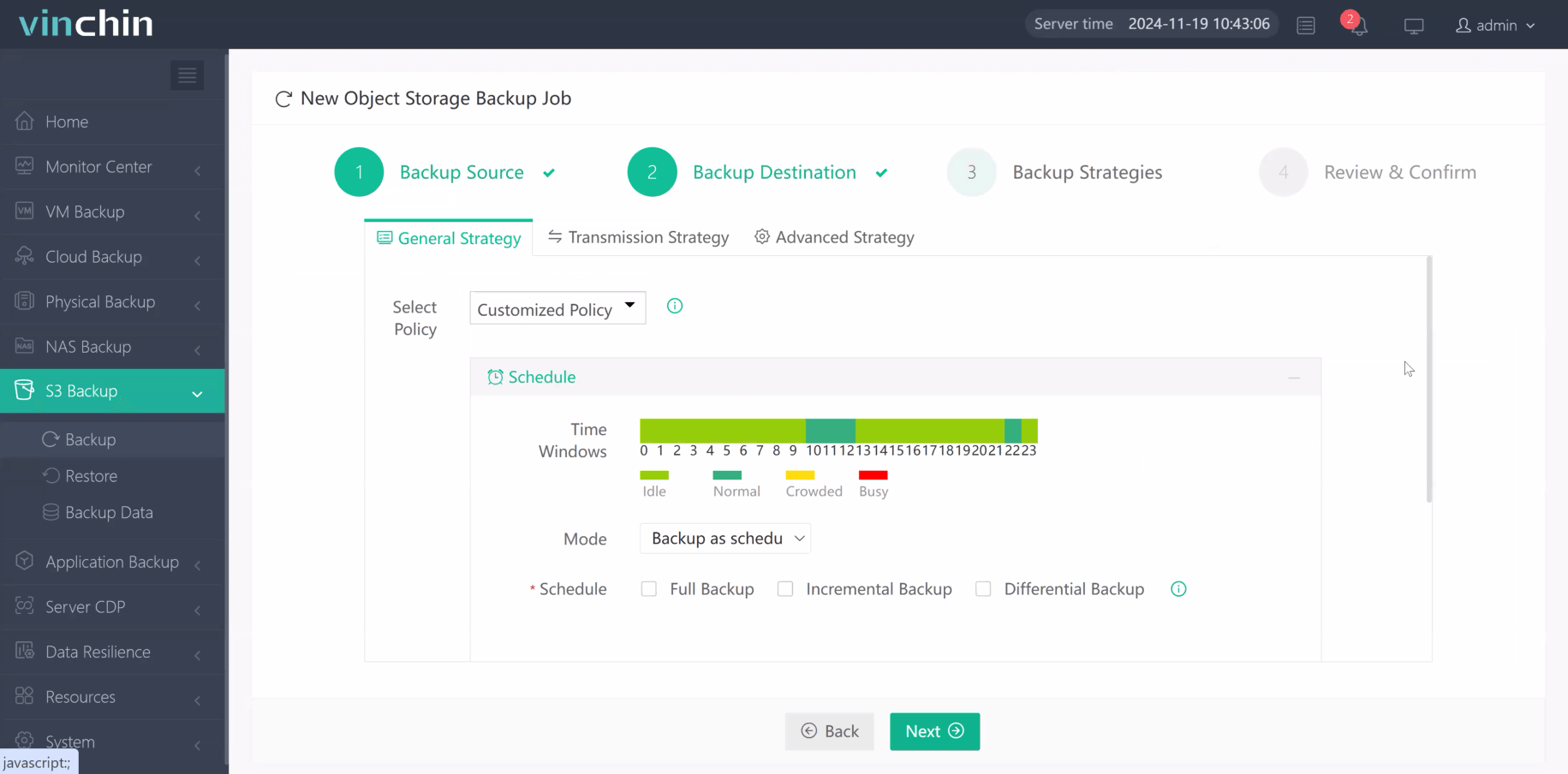

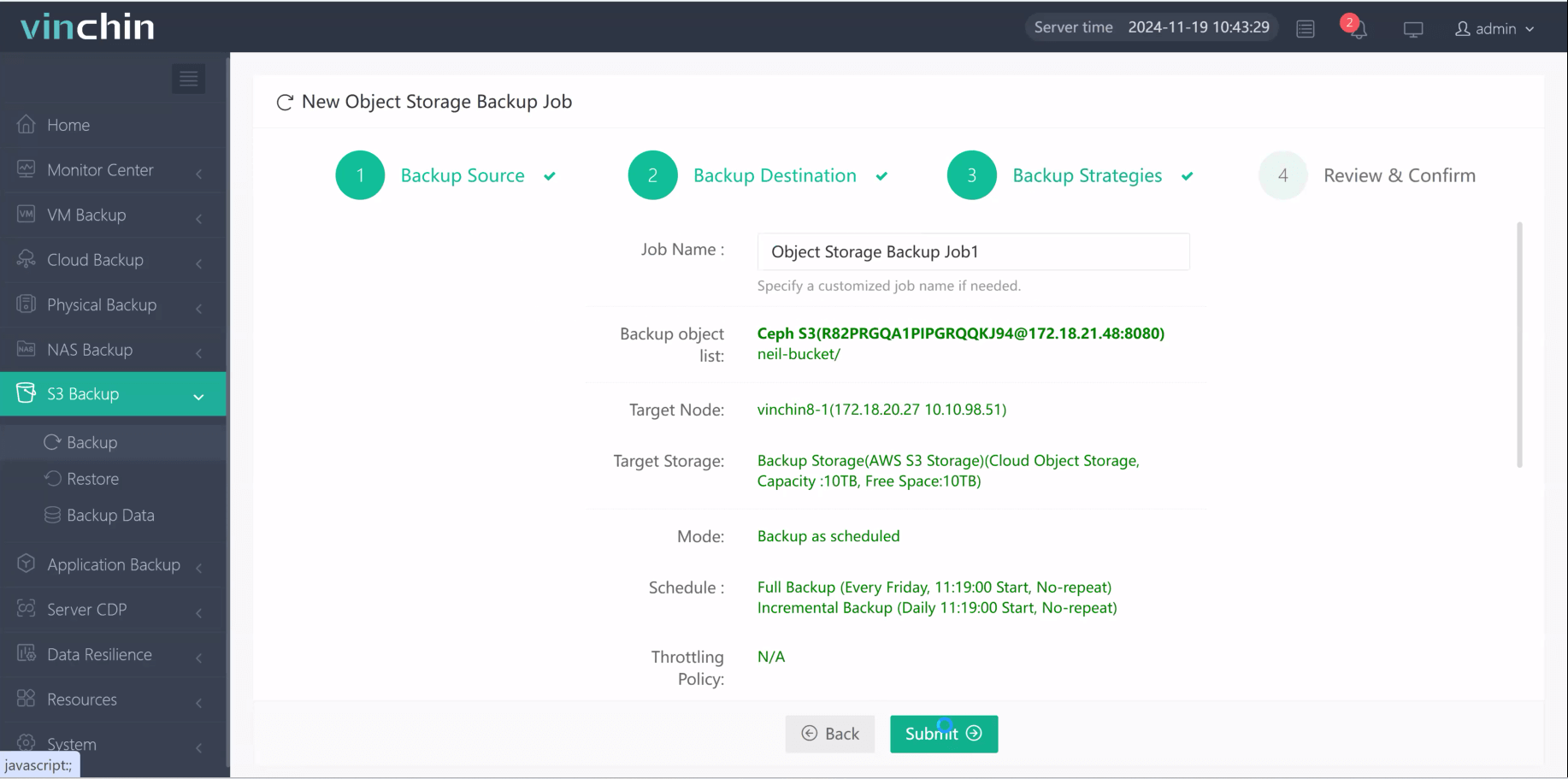

The web console of Vinchin Backup & Recovery is designed for simplicity—backing up Amazon S3

1.Just select the object to backup

2.Then select backup destination

3.Select strategies

4.Finally submit the job

Recognized globally with thousands of satisfied customers and top industry ratings, Vinchin Backup & Recovery offers a fully featured free trial valid for 60 days—click below now to experience enterprise-grade protection firsthand!

S3 Live Replication FAQs

Q1 Can delete markers be replicated between buckets?

A1 Yes—but only if you enable “Replicate delete markers” during rule creation; otherwise deletions remain local only.

Q2 Is there any way existing objects can be retroactively replicated without batch jobs?

A2 No—all pre-existing items require explicit action via [Batch Replication]; standard rules affect only future additions/modifications onward.

Conclusion

S3 live replication provides vital insurance against outages/losses while improving compliance/performance worldwide—but remember it's NOT true backup! For comprehensive recovery/Vinchin offers fast secure solutions tailored specifically for modern cloud environments.

Share on: