-

What is SCSI Bus Sharing in VMware?

-

How Does Storage Architecture Affect SCSI Bus Sharing?

-

Setting Up SCSI Bus Sharing Step-by-Step

-

Key Recommendations When Using SCSI Bus Sharing

-

Enterprise-Class Backup Solution Across Virtual Platforms

-

FAQs About SCSI Bus Sharing

-

Conclusion

SCSI bus sharing is a powerful feature in VMware environments that enables multiple virtual machines (VMs) to access the same disk at once. This capability is essential when building high-availability clusters or configuring shared storage between VMs for mission-critical workloads. But what exactly does SCSI bus sharing do? How do you set it up safely? And what should you watch out for in production? In this guide, we’ll walk through everything from basic concepts to advanced configuration tips—so you can use SCSI bus sharing confidently in your VMware infrastructure.

What is SCSI Bus Sharing in VMware?

At its core, SCSI (Small Computer System Interface) defines how computers connect to storage devices like disks or tape drives. In VMware environments, SCSI bus sharing lets two or more VMs access the same virtual disk or raw device mapping (RDM) simultaneously through a shared virtual SCSI controller.

This setup is crucial for clustered applications such as Microsoft Failover Clustering or Oracle RAC where nodes must coordinate access to shared data volumes. Without proper coordination at both the hypervisor and guest OS level, simultaneous writes could corrupt data.

When you enable SCSI bus sharing on a VM’s controller in vSphere, you’re telling VMware that this disk will be accessed by multiple VMs—and that special rules apply to prevent conflicts.

Understanding SCSI Reservations

Before going further, it helps to know how disk access is coordinated under the hood. When several systems share a physical LUN (Logical Unit Number), they use SCSI reservations—a mechanism that locks the disk during critical operations so only one node can write at a time. In failover clusters using Windows Server or Linux HA tools inside VMs, these reservations help avoid “split-brain” scenarios where two nodes try writing conflicting data.

If too many nodes contend for control of a shared LUN without proper design (for example if queue depths are too high), performance drops sharply—a situation called a reservation storm.

Three Modes of SCSI Bus Sharing

VMware offers three modes:

None: No sharing; each VM’s disks are private.

Virtual: Disks can be shared between VMs on the same ESXi host.

Physical: Disks can be shared between VMs running on different ESXi hosts—ideal for high availability across hosts.

Choosing the right mode depends on your cluster design:

For single-host clusters or test labs: Virtual mode suffices.

For production clusters spanning multiple hosts: Physical mode is required—and demands block-level shared storage accessible by all hosts at once.

Comparing SCSI Bus Sharing vs Multi-Writer Mode

It’s easy to confuse these features—but they serve different needs:

| Feature | SCSI Bus Sharing | Multi-Writer Mode |

|---|---|---|

| Use Case | Guest-managed clustering | Parallelized apps needing concurrent writes |

| Data Integrity | Managed by guest OS | Requires app-layer coordination |

| Host Requirements | Shared storage | Typically single-host only |

With bus sharing, clustering software inside each VM coordinates which node has write access at any moment—using mechanisms like quorum disks and fencing agents. With multi-writer mode, multiple VMs may write concurrently but must rely on application logic (like clustered databases) to prevent corruption; there’s no built-in arbitration from VMware itself.

How Does Storage Architecture Affect SCSI Bus Sharing?

The underlying storage platform plays a huge role in whether your configuration will work smoothly—or cause headaches down the road.

Designing Shared Storage for Clustered Disks

For physical-mode bus sharing across ESXi hosts:

All participating hosts must see exactly the same LUN UUIDs via Fibre Channel zoning or iSCSI target mapping.

Block-based SAN storage (Fibre Channel/iSCSI) is required; NFS datastores won’t work because they lack true block-level locking.

Local disks cannot be used unless all clustered VMs run on one host using Virtual mode—which defeats most HA goals!

Make sure your SAN administrator configures Access Control Lists (ACLs) so only authorized ESXi servers can see cluster LUNs—this prevents accidental overwrites by unrelated workloads.

VMFS vs vVols Considerations

Most clusters use traditional VMFS datastores since vVols don’t support RDMs needed for some advanced configurations like SQL AlwaysOn FCI with physical-mode bus sharing. If you plan future upgrades involving vVols or vSAN:

Double-check compatibility before migrating clustered workloads

Test thoroughly in non-production first!

Choosing Your Controller Type Wisely

Not all virtual controllers behave equally with clustering:

Prefer LSI Logic SAS controllers over PVSCSI when attaching shared disks; PVSCSI isn’t fully supported by every guest OS cluster driver.

Use dedicated controllers just for shared disks—not mixed with OS/data volumes—to isolate queue depth settings and reduce contention risk.

Why bother isolating controllers? It lets you tune performance per workload type while avoiding bottlenecks caused by competing I/O streams within one queue—a common pitfall seen in busy clusters!

Setting Up SCSI Bus Sharing Step-by-Step

Let's break down configuration into clear stages—from planning through automation—so even large-scale deployments stay manageable.

Start by confirming these basics:

1. You have two or more VMs needing simultaneous disk access—for example as part of Windows Failover Clustering.

2. All involved ESXi hosts have network paths (Fibre Channel/iSCSI) mapped identically to your chosen SAN LUN(s).

3. The target virtual disk resides on block-based shared storage—not local drives unless testing single-host setups.

4. Your licensing supports physical-mode clustering if spanning multiple hosts (vSphere Enterprise Plus required).

Remember: If using RDMs instead of standard VMDKs—for instance when an application requires direct hardware features—the process differs slightly but follows similar principles regarding controller assignment and permissions.

Method 1: Configuring Controllers & Disks via vSphere Web Client

Once prerequisites are met:

1. Power off every VM intended to share the disk—you cannot change controller settings while powered up due to metadata lock requirements.

2. Open vSphere Web Client; right-click each VM > select Edit Settings.

3. On Virtual Hardware, click Add New Device > choose SCSI Controller if not already present; always dedicate one controller solely for cluster/shared disks.

4. Expand your new controller entry; set its property under SCSI Bus Sharing dropdown menu as either Virtual (same host) or Physical (cross-host).

5. Add an existing hard disk pointing at your pre-created .vmdk file stored on shared SAN/NAS—or map an RDM if needed for application compatibility.

6. Attach this disk specifically under your newly created “shared” controller—not mixed with boot/data volumes!

7. Repeat steps 2–6 across all participating cluster nodes; ensure identical controller numbers/bus IDs everywhere so guest drivers align properly during failover events.

8. Power up all affected VMs after double-checking mappings match exactly across nodes.

Why power off first? Changing hardware assignments live risks inconsistent metadata updates—which could leave some guests unable to see their quorum/resource disks after rebooting!

If using RDMs:

Map them consistently as either physical compatibility mode (“pass-through”) or virtual compatibility depending on application needs—but always attach via dedicated controllers configured above.

Method 2: Automating Validation & Reporting Using PowerCLI

In larger environments—with dozens of clusters—it pays off quickly to automate checks ensuring compliance with best practices:

First connect securely:

try {

Connect-VIServer $vcServer -ErrorAction Stop

} catch {

Write-Warning "Could not connect to $vcServer"

exit

}Then enumerate relevant settings:

$array = @()

$vms = Get-Cluster $cluster | Get-VM

foreach ($vm in $vms) {

$controllers = $vm | Get-SCSiController | Where { $_.BusSharingMode -ne 'NoSharing' }

foreach ($ctrl in $controllers) {

$report = New-Object PSObject -Property @{

Name = $vm.Name

Host = $vm.VMHost.Name

Mode = $ctrl.BusSharingMode

Path = ($ctrl.ExtensionData.Device | % { $_ }) # Add path reporting if needed

}

$array += $report

}

}

$array | Out-GridView # Presents results interactively so admins can filter/export findings easily!What does Out-GridView do? It pops up an interactive table showing which controllers/disks are configured with bus sharing enabled—a fast way to spot misconfigurations before they cause outages!

Want deeper validation?

Extend scripts above with checks against expected LUN UUIDs per host,

Alert if any mismatches found,

Or export results directly into CSV reports emailed nightly.

Key Recommendations When Using SCSI Bus Sharing

Success comes from careful planning—not luck! Here are key recommendations based on real-world experience:

1. Always dedicate separate controllers just for clustered/shared disks rather than mixing them with other volumes; this isolates queue depths and avoids unnecessary contention during heavy loads.

2. Monitor reservation activity regularly using esxtop tool under D command group—look out for spikes indicating possible reservation storms caused by excessive parallelism among nodes trying simultaneous failovers/restarts!

3. Document exact mappings—including controller numbers/bus IDs/LUN paths—in change management records so future upgrades/migrations don’t break dependencies unexpectedly.

Enterprise-Class Backup Solution Across Virtual Platforms

When protecting complex VMware infrastructures utilizing features like scsi bus sharing, choosing a robust backup solution becomes essential—and that's where Vinchin excels as an enterprise-class option tailored specifically for virtualization environments.

Vinchin Backup & Recovery provides professional-grade backup and disaster recovery capabilities supporting over 15 mainstream virtualization platforms—including full support for VMware, Hyper-V, Proxmox VE, oVirt, OLVM, RHV, XCP-ng, XenServer, OpenStack, ZStack and more—all from a unified console designed around operational simplicity and reliability.

For administrators managing advanced configurations such as scsi bus sharing within VMware clusters, Vinchin delivers comprehensive protection through features like forever-incremental backup (minimizing backup windows and resource usage), built-in deduplication and compression technologies (maximizing storage efficiency), cross-platform V2V migration tools enabling seamless movement between hypervisors/cloud platforms without downtime risk, granular restore options down to individual files/folders—even within multi-node clustered workloads—and flexible scheduling policies including GFS retention strategies suited perfectly for enterprise compliance needs.

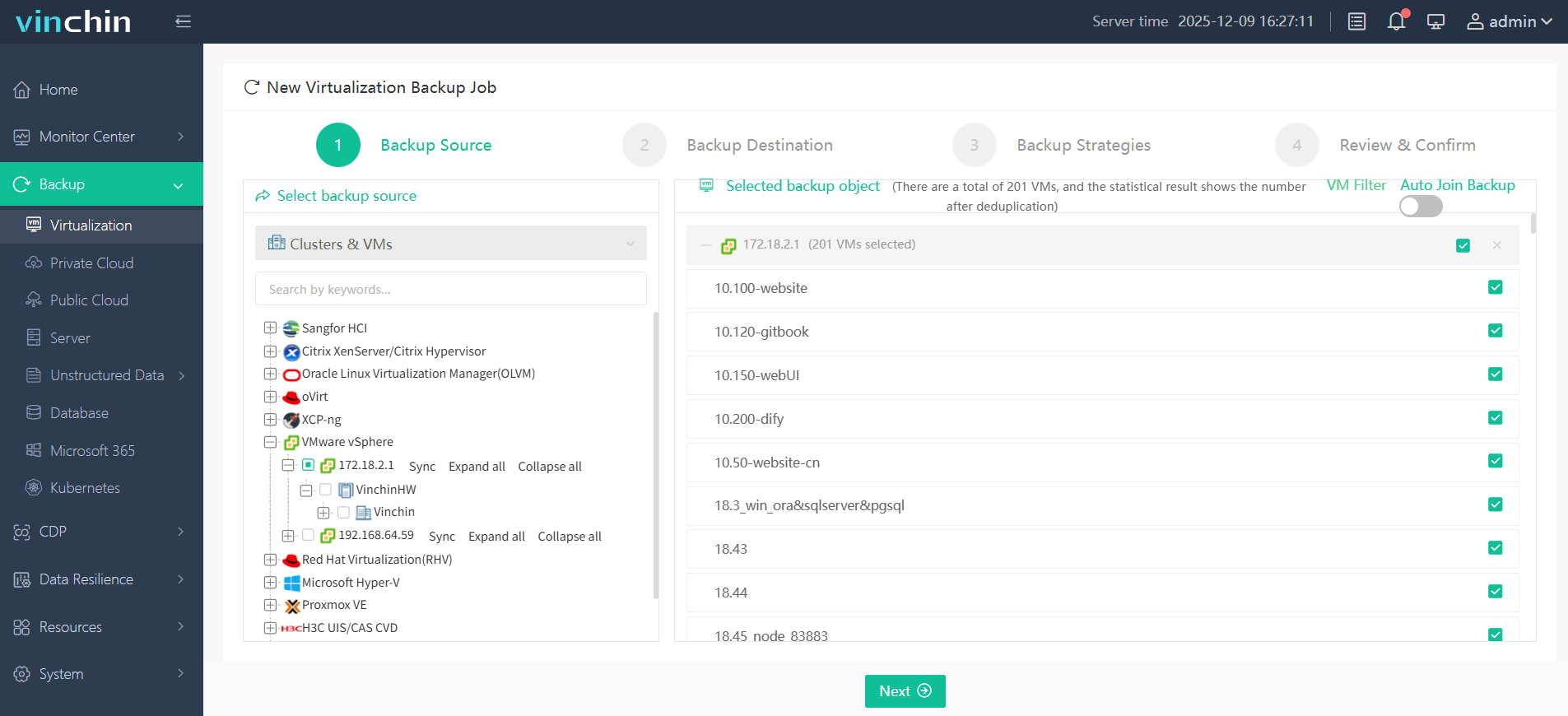

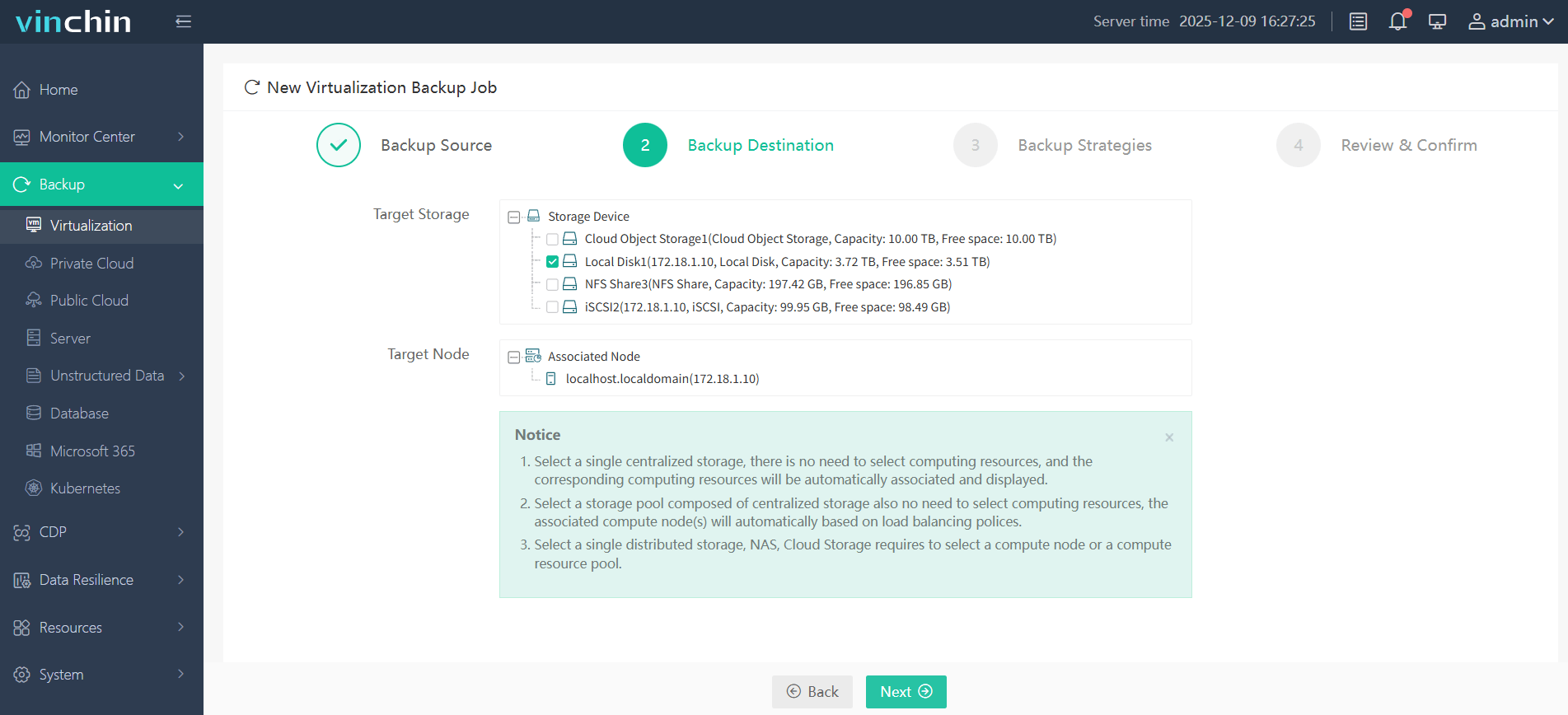

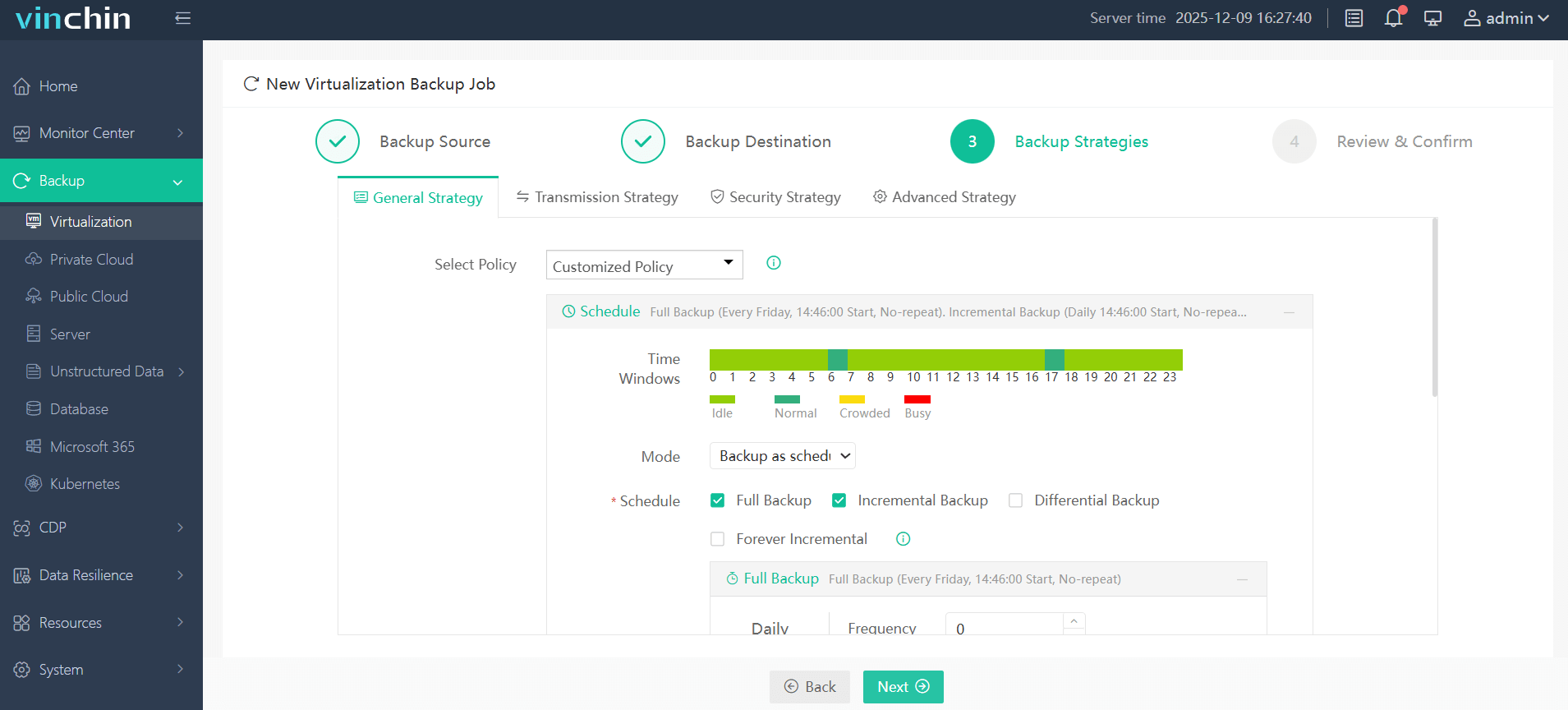

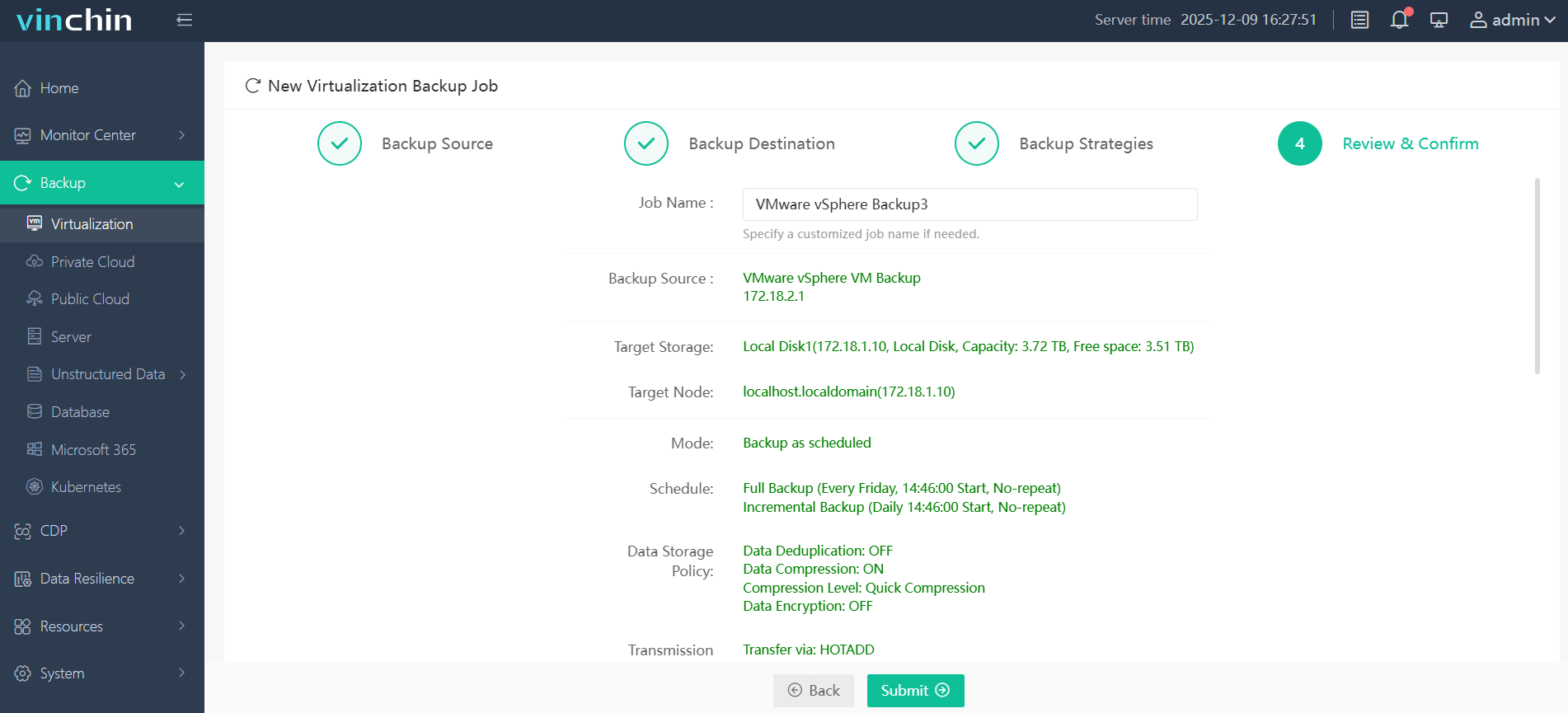

Backing up a specific VMware VM takes just four intuitive steps within Vinchin's web-based interface:

1. Select your target VMware VM(s);

2. Choose desired backup repository/storage location;

3. Define custom backup strategies;

4. Submit the job.

Globally trusted across industries—with strong customer satisfaction ratings—Vinchin invites you to experience its full capabilities risk-free: start today with our 60-day full-featured free trial! Click below to download instantly and deploy enterprise-grade protection within minutes.

FAQs About SCSI Bus Sharing

Q1: Can I use scsi bus sharing across different datastores?

A1: No—the shared disk must reside entirely within one block-based datastore accessible simultaneously from all participating ESXi hosts.

Q2: How do I check which virtual machines currently have scsi bus sharing enabled?

A2: Run PowerCLI commands connecting VISERVER > GET–CLUSTER > GET–VM > GET–SCSICONTROLLER then review BUSSHARINGMODE field values.

Q3: What happens if someone tries snapshotting a vm attached via scsi-bus-sharing-enabled device?

A3: The operation fails immediately—with error message explaining snapshots aren’t supported due locked state enforced among cluster members.

Conclusion

Mastering scsi bus sharing unlocks powerful options for building resilient clusters within VMware environments—as long as you follow best practices around architecture design and operational safeguards along the way! Vinchin makes protecting even complex multi-node deployments straightforward thanks to deep integration with leading virtualization stacks plus robust automation tools tailored specifically toward enterprise IT teams.

Share on: